Chris Percy

Initial results of the Digital Consciousness Model

Jan 22, 2026Abstract:Artificially intelligent systems have become remarkably sophisticated. They hold conversations, write essays, and seem to understand context in ways that surprise even their creators. This raises a crucial question: Are we creating systems that are conscious? The Digital Consciousness Model (DCM) is a first attempt to assess the evidence for consciousness in AI systems in a systematic, probabilistic way. It provides a shared framework for comparing different AIs and biological organisms, and for tracking how the evidence changes over time as AI develops. Instead of adopting a single theory of consciousness, it incorporates a range of leading theories and perspectives - acknowledging that experts disagree fundamentally about what consciousness is and what conditions are necessary for it. This report describes the structure and initial results of the Digital Consciousness Model. Overall, we find that the evidence is against 2024 LLMs being conscious, but the evidence against 2024 LLMs being conscious is not decisive. The evidence against LLM consciousness is much weaker than the evidence against consciousness in simpler AI systems.

Accountability in AI: From Principles to Industry-specific Accreditation

Oct 08, 2021

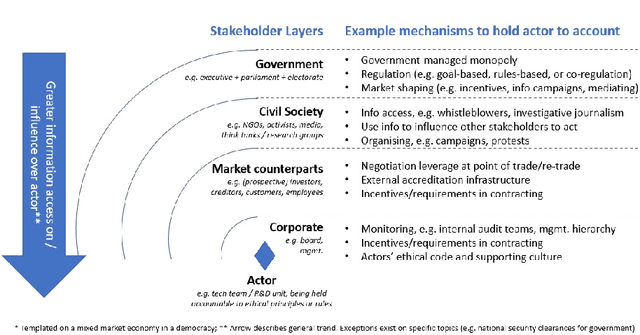

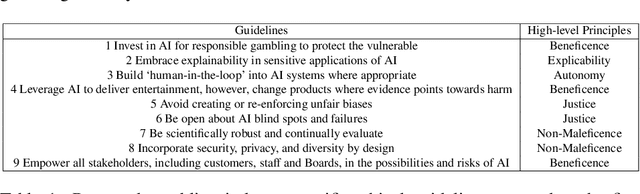

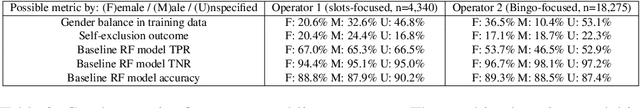

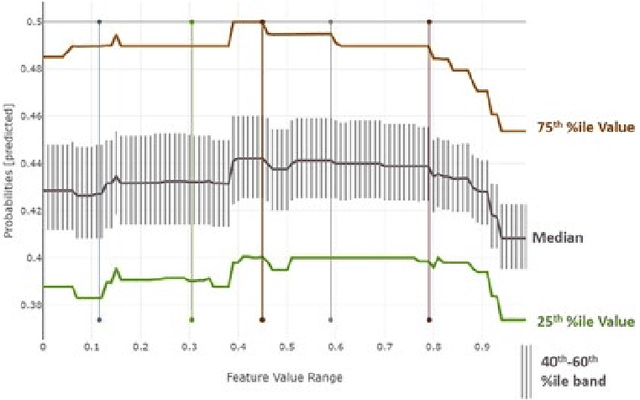

Abstract:Recent AI-related scandals have shed a spotlight on accountability in AI, with increasing public interest and concern. This paper draws on literature from public policy and governance to make two contributions. First, we propose an AI accountability ecosystem as a useful lens on the system, with different stakeholders requiring and contributing to specific accountability mechanisms. We argue that the present ecosystem is unbalanced, with a need for improved transparency via AI explainability and adequate documentation and process formalisation to support internal audit, leading up eventually to external accreditation processes. Second, we use a case study in the gambling sector to illustrate in a subset of the overall ecosystem the need for industry-specific accountability principles and processes. We define and evaluate critically the implementation of key accountability principles in the gambling industry, namely addressing algorithmic bias and model explainability, before concluding and discussing directions for future work based on our findings. Keywords: Accountability, Explainable AI, Algorithmic Bias, Regulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge