Artjom Zern

Assignment Flows for Data Labeling on Graphs: Convergence and Stability

Feb 26, 2020

Abstract:The assignment flow recently introduced in the J. Math. Imaging and Vision 58/2 (2017), constitutes a high-dimensional dynamical system that evolves on an elementary statistical manifold and performs contextual labeling (classification) of data given in any metric space. Vertices of a given graph index the data points and define a system of neighborhoods. These neighborhoods together with nonnegative weight parameters define regularization of the evolution of label assignments to data points, through geometric averaging induced by the affine e-connection of information geometry. Regarding evolutionary game dynamics, the assignment flow may be characterized as a large system of replicator equations that are coupled by geometric averaging. This paper establishes conditions on the weight parameters that guarantee convergence of the continuous-time assignment flow to integral assignments (labelings), up to a negligible subset of situations that will not be encountered when working with real data in practice. Furthermore, we classify attractors of the flow and quantify corresponding basins of attraction. This provides convergence guarantees for the assignment flow which are extended to the discrete-time assignment flow that results from applying a Runge-Kutta-Munthe-Kaas scheme for numerical geometric integration of the assignment flow. Several counter-examples illustrate that violating the conditions may entail unfavorable behavior of the assignment flow regarding contextual data classification.

Self-Assignment Flows for Unsupervised Data Labeling on Graphs

Nov 08, 2019

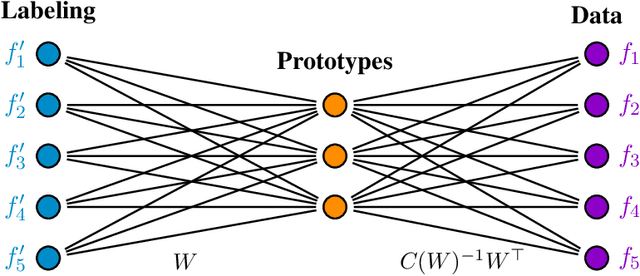

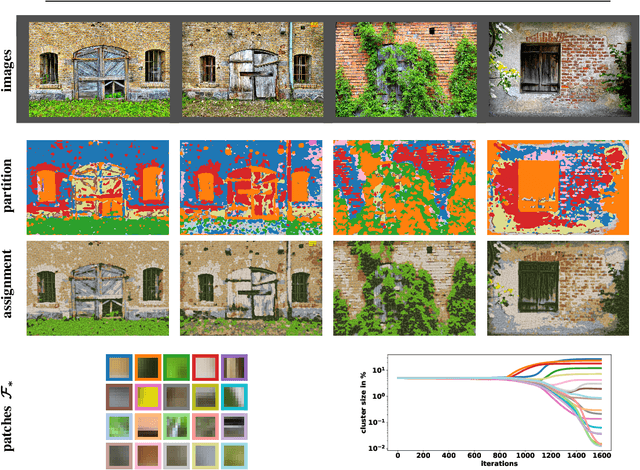

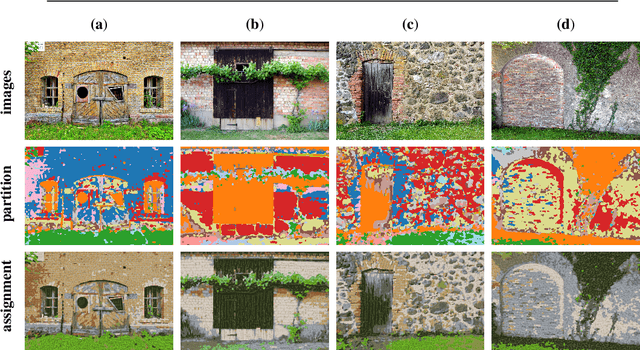

Abstract:This paper extends the recently introduced assignment flow approach for supervised image labeling to unsupervised scenarios where no labels are given. The resulting self-assignment flow takes a pairwise data affinity matrix as input data and maximizes the correlation with a low-rank matrix that is parametrized by the variables of the assignment flow, which entails an assignment of the data to themselves through the formation of latent labels (feature prototypes). A single user parameter, the neighborhood size for the geometric regularization of assignments, drives the entire process. By smooth geodesic interpolation between different normalizations of self-assignment matrices on the positive definite matrix manifold, a one-parameter family of self-assignment flows is defined. Accordingly, our approach can be characterized from different viewpoints, e.g. as performing spatially regularized, rank-constrained discrete optimal transport, or as computing spatially regularized normalized spectral cuts. Regarding combinatorial optimization, our approach successfully determines completely positive factorizations of self-assignments in large-scale scenarios, subject to spatial regularization. Various experiments including the unsupervised learning of patch dictionaries using a locally invariant distance function, illustrate the properties of the approach.

Unsupervised Assignment Flow: Label Learning on Feature Manifolds by Spatially Regularized Geometric Assignment

Apr 24, 2019

Abstract:This paper introduces the unsupervised assignment flow that couples the assignment flow for supervised image labeling with Riemannian gradient flows for label evolution on feature manifolds. The latter component of the approach encompasses extensions of state-of-the-art clustering approaches to manifold-valued data. Coupling label evolution with the spatially regularized assignment flow induces a sparsifying effect that enables to learn compact label dictionaries in an unsupervised manner. Our approach alleviates the requirement for supervised labeling to have proper labels at hand, because an initial set of labels can evolve and adapt to better values while being assigned to given data. The separation between feature and assignment manifolds enables the flexible application which is demonstrated for three scenarios with manifold-valued features. Experiments demonstrate beneficial effect in both directions: adaptivity of labels improves image labeling, and steering label evolution by spatially regularized assignments leads to proper labels, because the assignment flow for supervised labeling is exactly used without any approximation for label learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge