Artem Lensky

Detecting Blinks in Healthy and Parkinson's EEG: A Deep Learning Perspective

Sep 05, 2025Abstract:Blinks in electroencephalography (EEG) are often treated as unwanted artifacts. However, recent studies have demonstrated that blink rate and its variability are important physiological markers to monitor cognitive load, attention, and potential neurological disorders. This paper addresses the critical task of accurate blink detection by evaluating various deep learning models for segmenting EEG signals into involuntary blinks and non-blinks. We present a pipeline for blink detection using 1, 3, or 5 frontal EEG electrodes. The problem is formulated as a sequence-to-sequence task and tested on various deep learning architectures including standard recurrent neural networks, convolutional neural networks (both standard and depth-wise), temporal convolutional networks (TCN), transformer-based models, and hybrid architectures. The models were trained on raw EEG signals with minimal pre-processing. Training and testing was carried out on a public dataset of 31 subjects collected at UCSD. This dataset consisted of 15 healthy participants and 16 patients with Parkinson's disease allowing us to verify the model's robustness to tremor. Out of all models, CNN-RNN hybrid model consistently outperformed other models and achieved the best blink detection accuracy of 93.8%, 95.4% and 95.8% with 1, 3, and 5 channels in the healthy cohort and correspondingly 73.8%, 75.4% and 75.8% in patients with PD. The paper compares neural networks for the task of segmenting EEG recordings to involuntary blinks and no blinks allowing for computing blink rate and other statistics.

Central and Central-Parietal EEG Signatures of Parkinson's Disease

Mar 16, 2025Abstract:This study investigates EEG as a potential early biomarker by applying deep learning techniques to resting-state EEG recordings from 31 subjects (15 with PD and 16 healthy controls). EEG signals were rigorously preprocessed to remove tremor artifacts, then converted to wavelet-based images by grouping spatially adjacent electrodes into triplets for convolutional neural network (CNN) classification. Our analysis across different brain regions and frequency bands showed distinct spatial-spectral patterns of PD-related neural oscillations. We identified high classification accuracy (74%) in the gamma band (40-62.4 Hz) for central-parietal electrodes (CP1, Pz, CP2), and 76% accuracy using central electrodes (C3, Cz, C4) with full-spectrum 0.4-62.4 Hz. In particular, we observed pronounced right-hemisphere involvement, specifically in parieto-occipital regions. Unlike previous studies that achieved higher accuracies by potentially including tremor artifacts, our approach isolates genuine neurophysiological alterations in cortical activity. These findings suggest that specific EEG-based oscillatory patterns, especially central-parietal gamma activity, may provide diagnostic information for PD, potentially before the onset of motor symptoms.

Zero- and Few-Shots Knowledge Graph Triplet Extraction with Large Language Models

Dec 04, 2023Abstract:In this work, we tested the Triplet Extraction (TE) capabilities of a variety of Large Language Models (LLMs) of different sizes in the Zero- and Few-Shots settings. In detail, we proposed a pipeline that dynamically gathers contextual information from a Knowledge Base (KB), both in the form of context triplets and of (sentence, triplets) pairs as examples, and provides it to the LLM through a prompt. The additional context allowed the LLMs to be competitive with all the older fully trained baselines based on the Bidirectional Long Short-Term Memory (BiLSTM) Network architecture. We further conducted a detailed analysis of the quality of the gathered KB context, finding it to be strongly correlated with the final TE performance of the model. In contrast, the size of the model appeared to only logarithmically improve the TE capabilities of the LLMs.

Comparing Deep Learning Models for the Task of Volatility Prediction Using Multivariate Data

Jun 23, 2023Abstract:This study aims to compare multiple deep learning-based forecasters for the task of predicting volatility using multivariate data. The paper evaluates a range of models, starting from simpler and shallower ones and progressing to deeper and more complex architectures. Additionally, the performance of these models is compared against naive predictions and variations of classical GARCH models. The prediction of volatility for five assets, namely S&P500, NASDAQ100, gold, silver, and oil, is specifically addressed using GARCH models, Multi-Layer Perceptrons, Recurrent Neural Networks, Temporal Convolutional Networks, and the Temporal Fusion Transformer. In the majority of cases, the Temporal Fusion Transformer, followed by variants of the Temporal Convolutional Network, outperformed classical approaches and shallow networks. These experiments were repeated, and the differences observed between the competing models were found to be statistically significant, thus providing strong encouragement for their practical application.

kNN-Res: Residual Neural Network with kNN-Graph coherence for point cloud registration

Mar 31, 2023

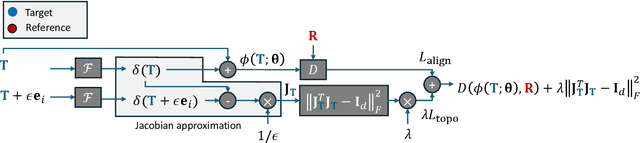

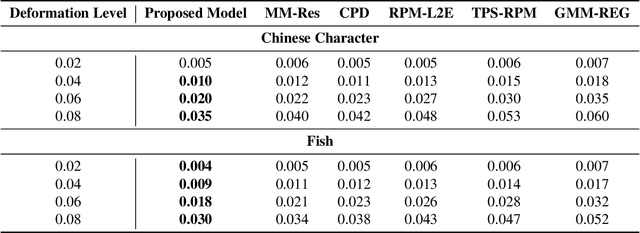

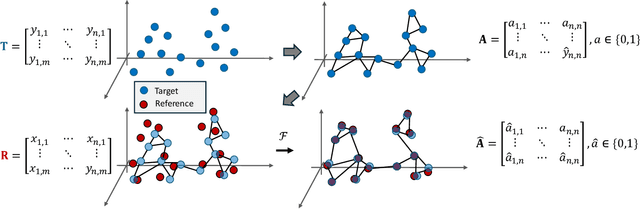

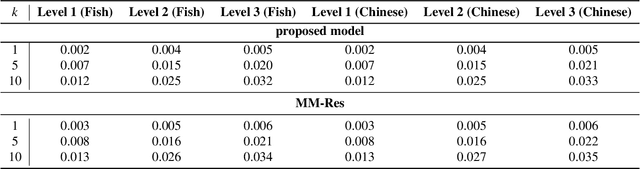

Abstract:In this paper, we present a residual neural network-based method for point set registration. Given a target and a reference point cloud, the goal is to learn a minimal transformation that aligns the target to the reference under the constraint that the topological structure of the target point cloud is preserved. Similar to coherent point drift (CPD), the registration (alignment) problem is viewed as the movement of data points sampled from a target distribution along a regularized displacement vector field. While the coherence constraint in CPD is stated in terms of local motion coherence, the proposed regularization term relies on a global smoothness constraint as a proxy for preserving local topology. This makes CPD less flexible when the deformation is locally rigid but globally non-rigid as in the case of multiple objects and articulate pose registration. To mitigate these issues, a Jacobian-based cost function along with geometric-aware statistical distances is proposed. The latter allows for measuring misalignment between the target and the reference. The justification for the kNN-graph preservation of target data, when the Jacobian cost is used, is also provided. Further, a stochastic approximation for high dimensional registration is introduced to make a high-dimensional alignment feasible. The proposed method is tested on high-dimensional Flow Cytometry to align two data distributions whilst preserving the kNN-graph of the data. The implementation of the proposed approach is available at https://github.com/MuhammadSaeedBatikh/kNN-Res_Demo/ under the MIT license.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge