Muhammad S. Battikh

kNN-Res: Residual Neural Network with kNN-Graph coherence for point cloud registration

Mar 31, 2023

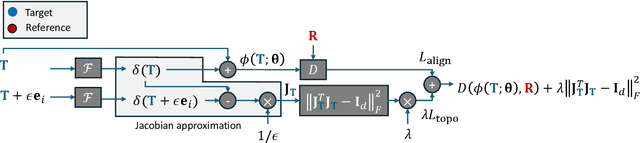

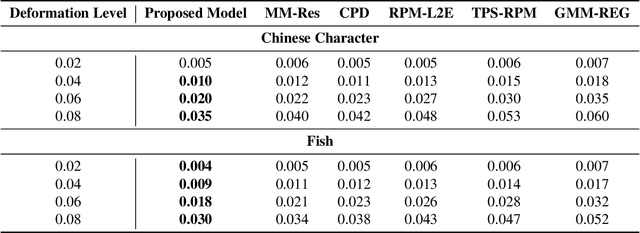

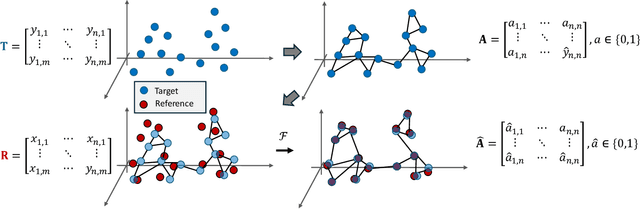

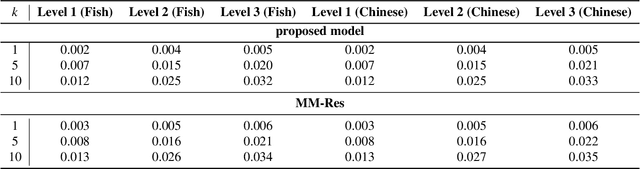

Abstract:In this paper, we present a residual neural network-based method for point set registration. Given a target and a reference point cloud, the goal is to learn a minimal transformation that aligns the target to the reference under the constraint that the topological structure of the target point cloud is preserved. Similar to coherent point drift (CPD), the registration (alignment) problem is viewed as the movement of data points sampled from a target distribution along a regularized displacement vector field. While the coherence constraint in CPD is stated in terms of local motion coherence, the proposed regularization term relies on a global smoothness constraint as a proxy for preserving local topology. This makes CPD less flexible when the deformation is locally rigid but globally non-rigid as in the case of multiple objects and articulate pose registration. To mitigate these issues, a Jacobian-based cost function along with geometric-aware statistical distances is proposed. The latter allows for measuring misalignment between the target and the reference. The justification for the kNN-graph preservation of target data, when the Jacobian cost is used, is also provided. Further, a stochastic approximation for high dimensional registration is introduced to make a high-dimensional alignment feasible. The proposed method is tested on high-dimensional Flow Cytometry to align two data distributions whilst preserving the kNN-graph of the data. The implementation of the proposed approach is available at https://github.com/MuhammadSaeedBatikh/kNN-Res_Demo/ under the MIT license.

Latent-Insensitive autoencoders for Anomaly Detection

Nov 14, 2021

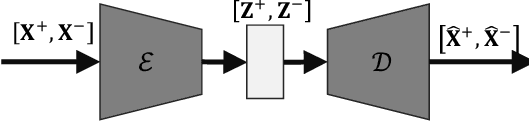

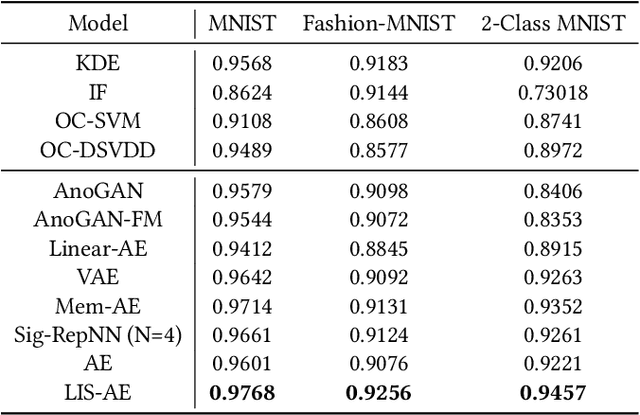

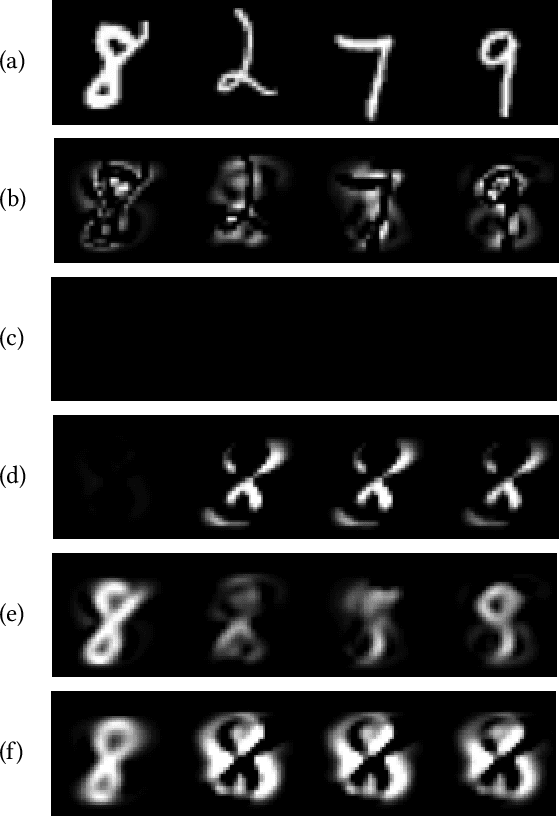

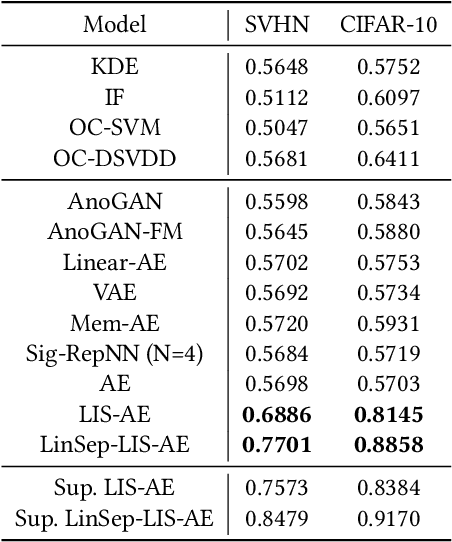

Abstract:Reconstruction-based approaches to anomaly detection tend to fall short when applied to complex datasets with target classes that possess high inter-class variance. Similar to the idea of self-taught learning used in transfer learning, many domains are rich with similar unlabelled datasets that could be leveraged as a proxy for out-of-distribution samples. In this paper we introduce Latent-Insensitive autoencoder (LIS-AE) where unlabeled data from a similar domain is utilized as negative examples to shape the latent layer (bottleneck) of a regular autoencoder such that it is only capable of reconstructing one task. We provide theoretical justification for the proposed training process and loss functions along with an extensive ablation study highlighting important aspects of our model. We test our model in multiple anomaly detection settings presenting quantitative and qualitative analysis showcasing the significant performance improvement of our model for anomaly detection tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge