Arman Bolatov

Where Does Warm-Up Come From? Adaptive Scheduling for Norm-Constrained Optimizers

Feb 05, 2026Abstract:We study adaptive learning rate scheduling for norm-constrained optimizers (e.g., Muon and Lion). We introduce a generalized smoothness assumption under which local curvature decreases with the suboptimality gap and empirically verify that this behavior holds along optimization trajectories. Under this assumption, we establish convergence guarantees under an appropriate choice of learning rate, for which warm-up followed by decay arises naturally from the proof rather than being imposed heuristically. Building on this theory, we develop a practical learning rate scheduler that relies only on standard hyperparameters and adapts the warm-up duration automatically at the beginning of training. We evaluate this method on large language model pretraining with LLaMA architectures and show that our adaptive warm-up selection consistently outperforms or at least matches the best manually tuned warm-up schedules across all considered setups, without additional hyperparameter search. Our source code is available at https://github.com/brain-lab-research/llm-baselines/tree/warmup

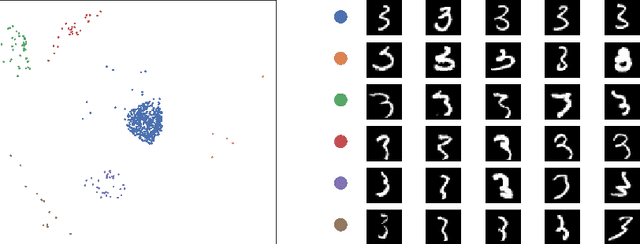

Simplex Deep Linear Discriminant Analysis

Jan 04, 2026Abstract:We revisit Deep Linear Discriminant Analysis (Deep LDA) from a likelihood-based perspective. While classical LDA is a simple Gaussian model with linear decision boundaries, attaching an LDA head to a neural encoder raises the question of how to train the resulting deep classifier by maximum likelihood estimation (MLE). We first show that end-to-end MLE training of an unconstrained Deep LDA model ignores discrimination: when both the LDA parameters and the encoder parameters are learned jointly, the likelihood admits a degenerate solution in which some of the class clusters may heavily overlap or even collapse, and classification performance deteriorates. Batchwise moment re-estimation of the LDA parameters does not remove this failure mode. We then propose a constrained Deep LDA formulation that fixes the class means to the vertices of a regular simplex in the latent space and restricts the shared covariance to be spherical, leaving only the priors and a single variance parameter to be learned along with the encoder. Under these geometric constraints, MLE becomes stable and yields well-separated class clusters in the latent space. On images (Fashion-MNIST, CIFAR-10, CIFAR-100), the resulting Deep LDA models achieve accuracy competitive with softmax baselines while offering a simple, interpretable latent geometry that is clearly visible in two-dimensional projections.

Deep Linear Discriminant Analysis Revisited

Jan 04, 2026Abstract:We show that for unconstrained Deep Linear Discriminant Analysis (LDA) classifiers, maximum-likelihood training admits pathological solutions in which class means drift together, covariances collapse, and the learned representation becomes almost non-discriminative. Conversely, cross-entropy training yields excellent accuracy but decouples the head from the underlying generative model, leading to highly inconsistent parameter estimates. To reconcile generative structure with discriminative performance, we introduce the \emph{Discriminative Negative Log-Likelihood} (DNLL) loss, which augments the LDA log-likelihood with a simple penalty on the mixture density. DNLL can be interpreted as standard LDA NLL plus a term that explicitly discourages regions where several classes are simultaneously likely. Deep LDA trained with DNLL produces clean, well-separated latent spaces, matches the test accuracy of softmax classifiers on synthetic data and standard image benchmarks, and yields substantially better calibrated predictive probabilities, restoring a coherent probabilistic interpretation to deep discriminant models.

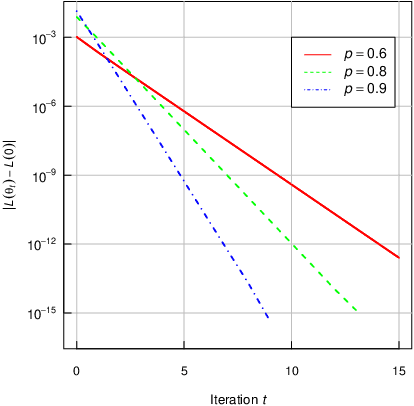

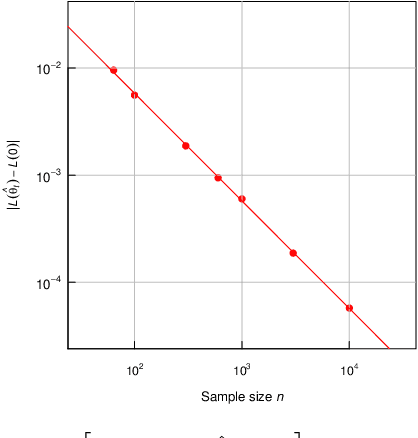

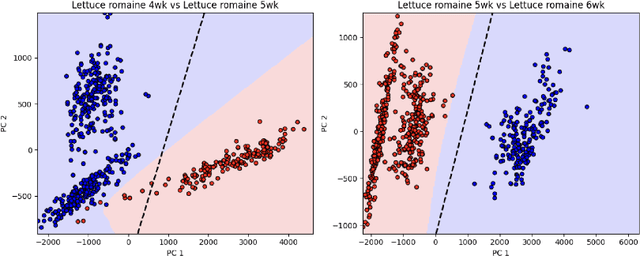

Overspecified Mixture Discriminant Analysis: Exponential Convergence, Statistical Guarantees, and Remote Sensing Applications

Oct 30, 2025

Abstract:This study explores the classification error of Mixture Discriminant Analysis (MDA) in scenarios where the number of mixture components exceeds those present in the actual data distribution, a condition known as overspecification. We use a two-component Gaussian mixture model within each class to fit data generated from a single Gaussian, analyzing both the algorithmic convergence of the Expectation-Maximization (EM) algorithm and the statistical classification error. We demonstrate that, with suitable initialization, the EM algorithm converges exponentially fast to the Bayes risk at the population level. Further, we extend our results to finite samples, showing that the classification error converges to Bayes risk with a rate $n^{-1/2}$ under mild conditions on the initial parameter estimates and sample size. This work provides a rigorous theoretical framework for understanding the performance of overspecified MDA, which is often used empirically in complex data settings, such as image and text classification. To validate our theory, we conduct experiments on remote sensing datasets.

Gradient Descent Fails to Learn High-frequency Functions and Modular Arithmetic

Oct 19, 2023

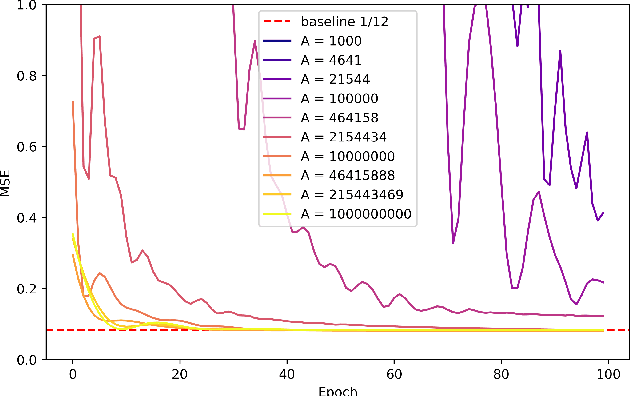

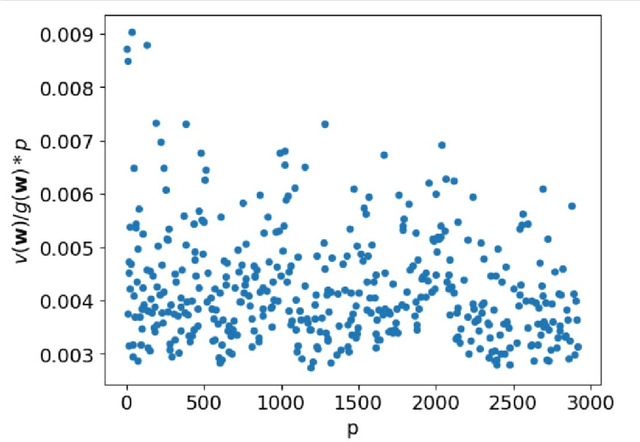

Abstract:Classes of target functions containing a large number of approximately orthogonal elements are known to be hard to learn by the Statistical Query algorithms. Recently this classical fact re-emerged in a theory of gradient-based optimization of neural networks. In the novel framework, the hardness of a class is usually quantified by the variance of the gradient with respect to a random choice of a target function. A set of functions of the form $x\to ax \bmod p$, where $a$ is taken from ${\mathbb Z}_p$, has attracted some attention from deep learning theorists and cryptographers recently. This class can be understood as a subset of $p$-periodic functions on ${\mathbb Z}$ and is tightly connected with a class of high-frequency periodic functions on the real line. We present a mathematical analysis of limitations and challenges associated with using gradient-based learning techniques to train a high-frequency periodic function or modular multiplication from examples. We highlight that the variance of the gradient is negligibly small in both cases when either a frequency or the prime base $p$ is large. This in turn prevents such a learning algorithm from being successful.

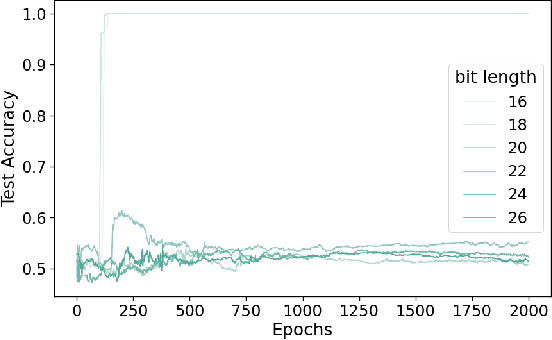

Intractability of Learning the Discrete Logarithm with Gradient-Based Methods

Oct 02, 2023

Abstract:The discrete logarithm problem is a fundamental challenge in number theory with significant implications for cryptographic protocols. In this paper, we investigate the limitations of gradient-based methods for learning the parity bit of the discrete logarithm in finite cyclic groups of prime order. Our main result, supported by theoretical analysis and empirical verification, reveals the concentration of the gradient of the loss function around a fixed point, independent of the logarithm's base used. This concentration property leads to a restricted ability to learn the parity bit efficiently using gradient-based methods, irrespective of the complexity of the network architecture being trained. Our proof relies on Boas-Bellman inequality in inner product spaces and it involves establishing approximate orthogonality of discrete logarithm's parity bit functions through the spectral norm of certain matrices. Empirical experiments using a neural network-based approach further verify the limitations of gradient-based learning, demonstrating the decreasing success rate in predicting the parity bit as the group order increases.

Long-Tail Theory under Gaussian Mixtures

Jul 24, 2023

Abstract:We suggest a simple Gaussian mixture model for data generation that complies with Feldman's long tail theory (2020). We demonstrate that a linear classifier cannot decrease the generalization error below a certain level in the proposed model, whereas a nonlinear classifier with a memorization capacity can. This confirms that for long-tailed distributions, rare training examples must be considered for optimal generalization to new data. Finally, we show that the performance gap between linear and nonlinear models can be lessened as the tail becomes shorter in the subpopulation frequency distribution, as confirmed by experiments on synthetic and real data.

A Central Asian Food Dataset for Personalized Dietary Interventions, Extended Abstract

May 12, 2023Abstract:Nowadays, it is common for people to take photographs of every beverage, snack, or meal they eat and then post these photographs on social media platforms. Leveraging these social trends, real-time food recognition and reliable classification of these captured food images can potentially help replace some of the tedious recording and coding of food diaries to enable personalized dietary interventions. Although Central Asian cuisine is culturally and historically distinct, there has been little published data on the food and dietary habits of people in this region. To fill this gap, we aim to create a reliable dataset of regional foods that is easily accessible to both public consumers and researchers. To the best of our knowledge, this is the first work on creating a Central Asian Food Dataset (CAFD). The final dataset contains 42 food categories and over 16,000 images of national dishes unique to this region. We achieved a classification accuracy of 88.70\% (42 classes) on the CAFD using the ResNet152 neural network model. The food recognition models trained on the CAFD demonstrate computer vision's effectiveness and high accuracy for dietary assessment.

Empirical Analysis of the AdaBoost's Error Bound

Feb 02, 2023

Abstract:Understanding the accuracy limits of machine learning algorithms is essential for data scientists to properly measure performance so they can continually improve their models' predictive capabilities. This study empirically verified the error bound of the AdaBoost algorithm for both synthetic and real-world data. The results show that the error bound holds up in practice, demonstrating its efficiency and importance to a variety of applications. The corresponding source code is available at https://github.com/armanbolatov/adaboost_error_bound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge