Arindam Paul

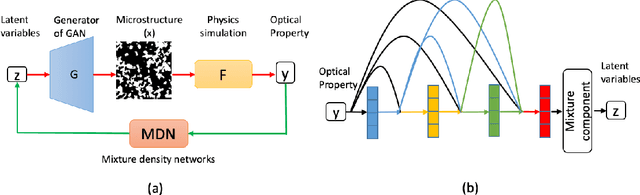

A General Framework Combining Generative Adversarial Networks and Mixture Density Networks for Inverse Modeling in Microstructural Materials Design

Jan 26, 2021

Abstract:Microstructural materials design is one of the most important applications of inverse modeling in materials science. Generally speaking, there are two broad modeling paradigms in scientific applications: forward and inverse. While the forward modeling estimates the observations based on known parameters, the inverse modeling attempts to infer the parameters given the observations. Inverse problems are usually more critical as well as difficult in scientific applications as they seek to explore the parameters that cannot be directly observed. Inverse problems are used extensively in various scientific fields, such as geophysics, healthcare and materials science. However, it is challenging to solve inverse problems, because they usually need to learn a one-to-many non-linear mapping, and also require significant computing time, especially for high-dimensional parameter space. Further, inverse problems become even more difficult to solve when the dimension of input (i.e. observation) is much lower than that of output (i.e. parameters). In this work, we propose a framework consisting of generative adversarial networks and mixture density networks for inverse modeling, and it is evaluated on a materials science dataset for microstructural materials design. Compared with baseline methods, the results demonstrate that the proposed framework can overcome the above-mentioned challenges and produce multiple promising solutions in an efficient manner.

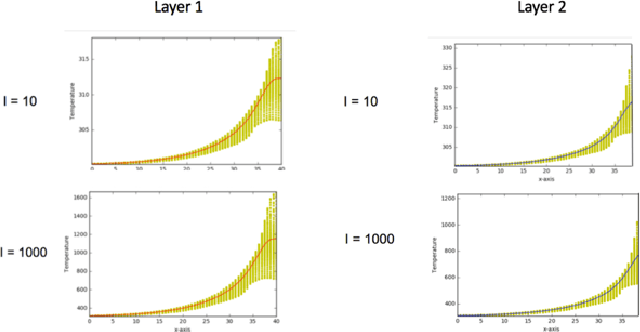

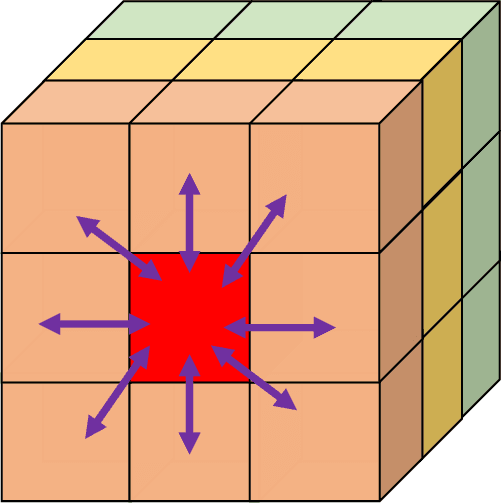

A real-time iterative machine learning approach for temperature profile prediction in additive manufacturing processes

Aug 09, 2019

Abstract:Additive Manufacturing (AM) is a manufacturing paradigm that builds three-dimensional objects from a computer-aided design model by successively adding material layer by layer. AM has become very popular in the past decade due to its utility for fast prototyping such as 3D printing as well as manufacturing functional parts with complex geometries using processes such as laser metal deposition that would be difficult to create using traditional machining. As the process for creating an intricate part for an expensive metal such as Titanium is prohibitive with respect to cost, computational models are used to simulate the behavior of AM processes before the experimental run. However, as the simulations are computationally costly and time-consuming for predicting multiscale multi-physics phenomena in AM, physics-informed data-driven machine-learning systems for predicting the behavior of AM processes are immensely beneficial. Such models accelerate not only multiscale simulation tools but also empower real-time control systems using in-situ data. In this paper, we design and develop essential components of a scientific framework for developing a data-driven model-based real-time control system. Finite element methods are employed for solving time-dependent heat equations and developing the database. The proposed framework uses extremely randomized trees - an ensemble of bagged decision trees as the regression algorithm iteratively using temperatures of prior voxels and laser information as inputs to predict temperatures of subsequent voxels. The models achieve mean absolute percentage errors below 1% for predicting temperature profiles for AM processes. The code is made available for the research community at https://github.com/paularindam/ml-iter-additive.

* 10 pages, 8 figures

Transfer Learning Using Ensemble Neural Networks for Organic Solar Cell Screening

Mar 30, 2019

Abstract:Organic Solar Cells are a promising technology for solving the clean energy crisis in the world. However, generating candidate chemical compounds for solar cells is a time-consuming process requiring thousands of hours of laboratory analysis. For a solar cell, the most important property is the power conversion efficiency which is dependent on the highest occupied molecular orbitals (HOMO) values of the donor molecules. Recently, machine learning techniques have proved to be very useful in building predictive models for HOMO values of donor structures of Organic Photovoltaic Cells (OPVs). Since experimental datasets are limited in size, current machine learning models are trained on data derived from calculations based on density functional theory (DFT). Molecular line notations such as SMILES or InChI are popular input representations for describing the molecular structure of donor molecules. The two types of line representations encode different information, such as SMILES defines the bond types while InChi defines protonation. In this work, we present an ensemble deep neural network architecture, called SINet, which harnesses both the SMILES and InChI molecular representations to predict HOMO values and leverage the potential of transfer learning from a sizeable DFT-computed dataset- Harvard CEP to build more robust predictive models for relatively smaller HOPV datasets. Harvard CEP dataset contains molecular structures and properties for 2.3 million candidate donor structures for OPV while HOPV contains DFT-computed and experimental values of 350 and 243 molecules respectively. Our results demonstrate significant performance improvement from the use of transfer learning and leveraging both molecular representations.

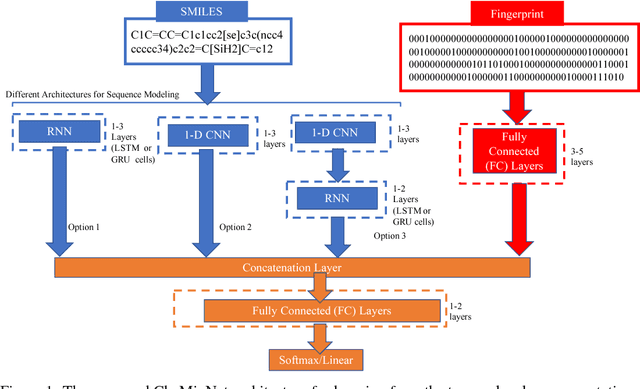

CheMixNet: Mixed DNN Architectures for Predicting Chemical Properties using Multiple Molecular Representations

Nov 30, 2018

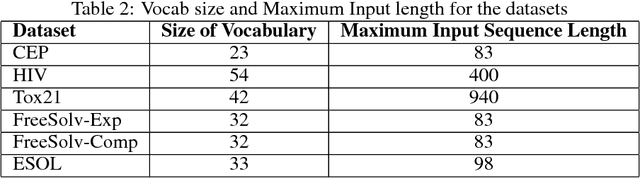

Abstract:SMILES is a linear representation of chemical structures which encodes the connection table, and the stereochemistry of a molecule as a line of text with a grammar structure denoting atoms, bonds, rings and chains, and this information can be used to predict chemical properties. Molecular fingerprints are representations of chemical structures, successfully used in similarity search, clustering, classification, drug discovery, and virtual screening and are a standard and computationally efficient abstract representation where structural features are represented as a bit string. Both SMILES and molecular fingerprints are different representations for describing the structure of a molecule. There exist several predictive models for learning chemical properties based on either SMILES or molecular fingerprints. Here, our goal is to build predictive models that can leverage both these molecular representations. In this work, we present CheMixNet -- a set of neural networks for predicting chemical properties from a mixture of features learned from the two molecular representations -- SMILES as sequences and molecular fingerprints as vector inputs. We demonstrate the efficacy of CheMixNet architectures by evaluating on six different datasets. The proposed CheMixNet models not only outperforms the candidate neural architectures such as contemporary fully connected networks that uses molecular fingerprints and 1-D CNN and RNN models trained SMILES sequences, but also other state-of-the-art architectures such as Chemception and Molecular Graph Convolutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge