Aravind K. Joshi

University of Pennsylvania

Anchoring a Lexicalized Tree-Adjoining Grammar for Discourse

Jun 24, 1998

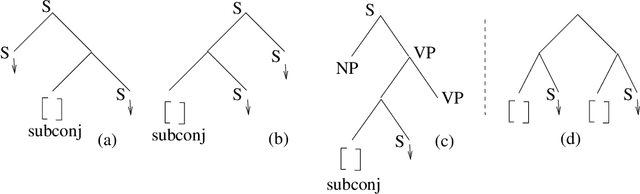

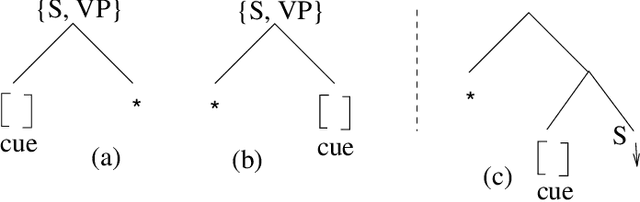

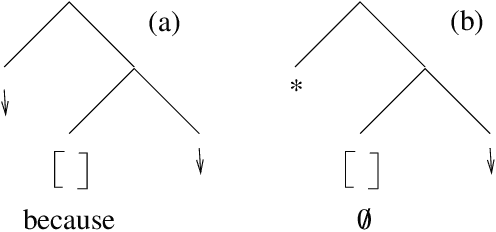

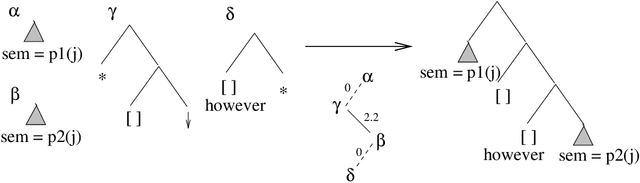

Abstract:We here explore a ``fully'' lexicalized Tree-Adjoining Grammar for discourse that takes the basic elements of a (monologic) discourse to be not simply clauses, but larger structures that are anchored on variously realized discourse cues. This link with intra-sentential grammar suggests an account for different patterns of discourse cues, while the different structures and operations suggest three separate sources for elements of discourse meaning: (1) a compositional semantics tied to the basic trees and operations; (2) a presuppositional semantics carried by cue phrases that freely adjoin to trees; and (3) general inference, that draws additional, defeasible conclusions that flesh out what is conveyed compositionally.

* 7 pages, uses aclcol.sty

A Processing Model for Free Word Order Languages

Apr 15, 1995Abstract:Like many verb-final languages, Germn displays considerable word-order freedom: there is no syntactic constraint on the ordering of the nominal arguments of a verb, as long as the verb remains in final position. This effect is referred to as ``scrambling'', and is interpreted in transformational frameworks as leftward movement of the arguments. Furthermore, arguments from an embedded clause may move out of their clause; this effect is referred to as ``long-distance scrambling''. While scrambling has recently received considerable attention in the syntactic literature, the status of long-distance scrambling has only rarely been addressed. The reason for this is the problematic status of the data: not only is long-distance scrambling highly dependent on pragmatic context, it also is strongly subject to degradation due to processing constraints. As in the case of center-embedding, it is not immediately clear whether to assume that observed unacceptability of highly complex sentences is due to grammatical restrictions, or whether we should assume that the competence grammar does not place any restrictions on scrambling (and that, therefore, all such sentences are in fact grammatical), and the unacceptability of some (or most) of the grammatically possible word orders is due to processing limitations. In this paper, we will argue for the second view by presenting a processing model for German.

Disambiguation of Super Parts of Speech (or Supertags): Almost Parsing

Oct 26, 1994

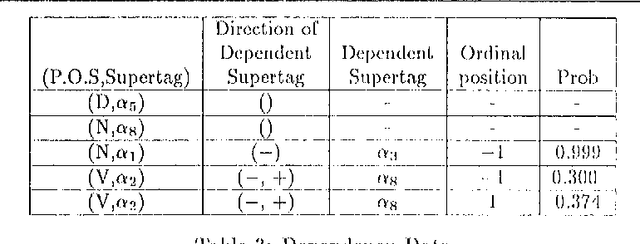

Abstract:In a lexicalized grammar formalism such as Lexicalized Tree-Adjoining Grammar (LTAG), each lexical item is associated with at least one elementary structure (supertag) that localizes syntactic and semantic dependencies. Thus a parser for a lexicalized grammar must search a large set of supertags to choose the right ones to combine for the parse of the sentence. We present techniques for disambiguating supertags using local information such as lexical preference and local lexical dependencies. The similarity between LTAG and Dependency grammars is exploited in the dependency model of supertag disambiguation. The performance results for various models of supertag disambiguation such as unigram, trigram and dependency-based models are presented.

* ps file. 8 pages

Korean to English Translation Using Synchronous TAGs

Oct 24, 1994

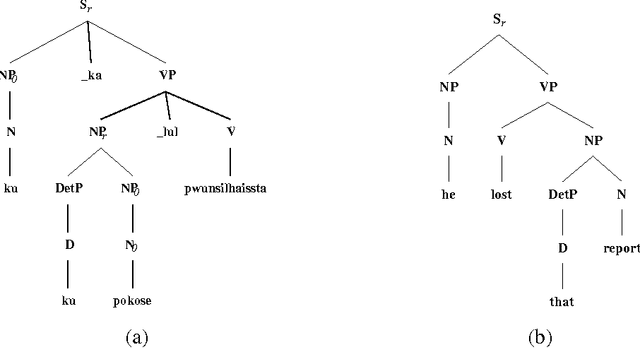

Abstract:It is often argued that accurate machine translation requires reference to contextual knowledge for the correct treatment of linguistic phenomena such as dropped arguments and accurate lexical selection. One of the historical arguments in favor of the interlingua approach has been that, since it revolves around a deep semantic representation, it is better able to handle the types of linguistic phenomena that are seen as requiring a knowledge-based approach. In this paper we present an alternative approach, exemplified by a prototype system for machine translation of English and Korean which is implemented in Synchronous TAGs. This approach is essentially transfer based, and uses semantic feature unification for accurate lexical selection of polysemous verbs. The same semantic features, when combined with a discourse model which stores previously mentioned entities, can also be used for the recovery of topicalized arguments. In this paper we concentrate on the translation of Korean to English.

* ps file. 8 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge