Anurag Katakkar

Rethinking Distance Metrics for Counterfactual Explainability

Oct 18, 2024

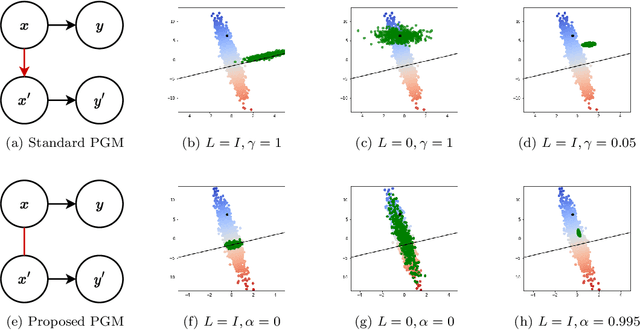

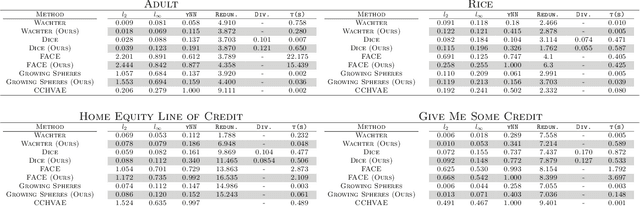

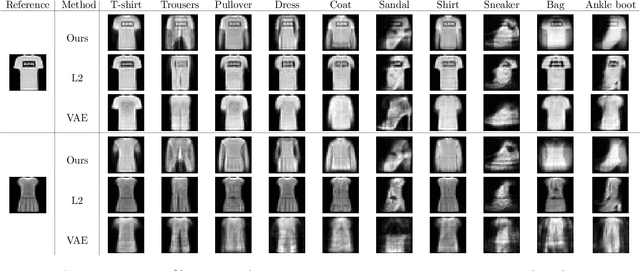

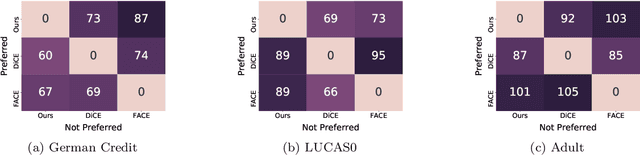

Abstract:Counterfactual explanations have been a popular method of post-hoc explainability for a variety of settings in Machine Learning. Such methods focus on explaining classifiers by generating new data points that are similar to a given reference, while receiving a more desirable prediction. In this work, we investigate a framing for counterfactual generation methods that considers counterfactuals not as independent draws from a region around the reference, but as jointly sampled with the reference from the underlying data distribution. Through this framing, we derive a distance metric, tailored for counterfactual similarity that can be applied to a broad range of settings. Through both quantitative and qualitative analyses of counterfactual generation methods, we show that this framing allows us to express more nuanced dependencies among the covariates.

Towards Language Modelling in the Speech Domain Using Sub-word Linguistic Units

Oct 31, 2021

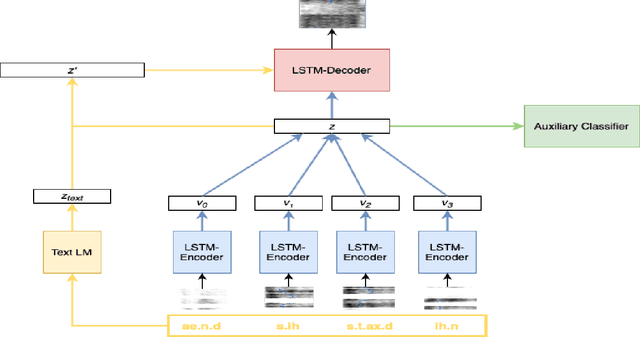

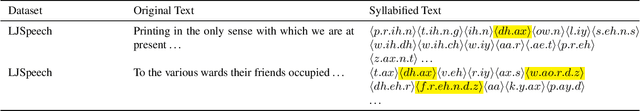

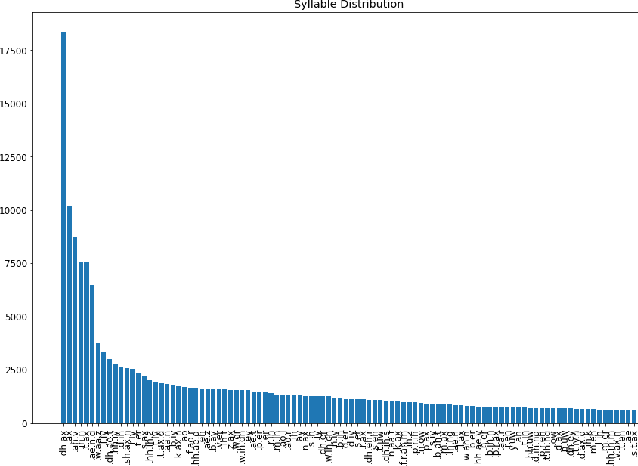

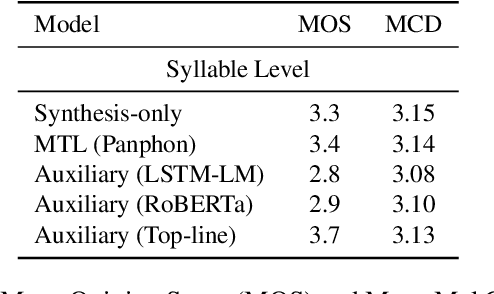

Abstract:Language models (LMs) for text data have been studied extensively for their usefulness in language generation and other downstream tasks. However, language modelling purely in the speech domain is still a relatively unexplored topic, with traditional speech LMs often depending on auxiliary text LMs for learning distributional aspects of the language. For the English language, these LMs treat words as atomic units, which presents inherent challenges to language modelling in the speech domain. In this paper, we propose a novel LSTM-based generative speech LM that is inspired by the CBOW model and built on linguistic units including syllables and phonemes. This offers better acoustic consistency across utterances in the dataset, as opposed to single melspectrogram frames, or whole words. With a limited dataset, orders of magnitude smaller than that required by contemporary generative models, our model closely approximates babbling speech. We show the effect of training with auxiliary text LMs, multitask learning objectives, and auxiliary articulatory features. Through our experiments, we also highlight some well known, but poorly documented challenges in training generative speech LMs, including the mismatch between the supervised learning objective with which these models are trained such as Mean Squared Error (MSE), and the true objective, which is speech quality. Our experiments provide an early indication that while validation loss and Mel Cepstral Distortion (MCD) are not strongly correlated with generated speech quality, traditional text language modelling metrics like perplexity and next-token-prediction accuracy might be.

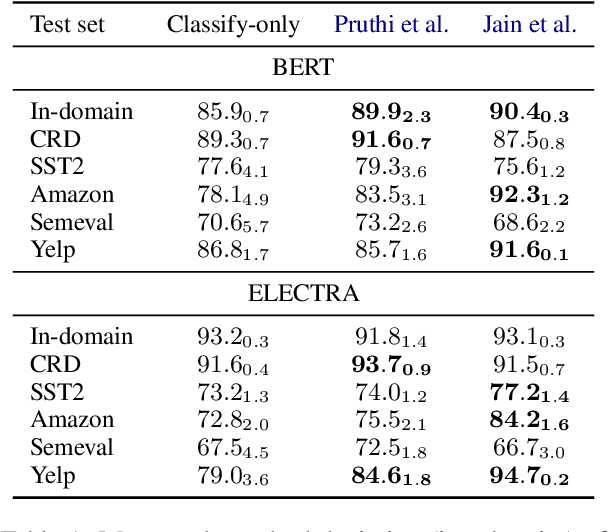

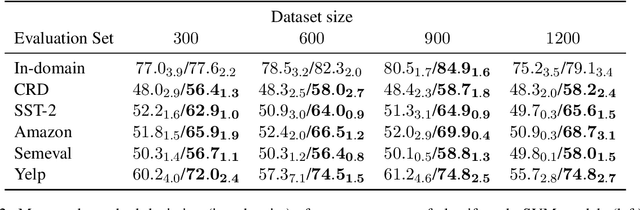

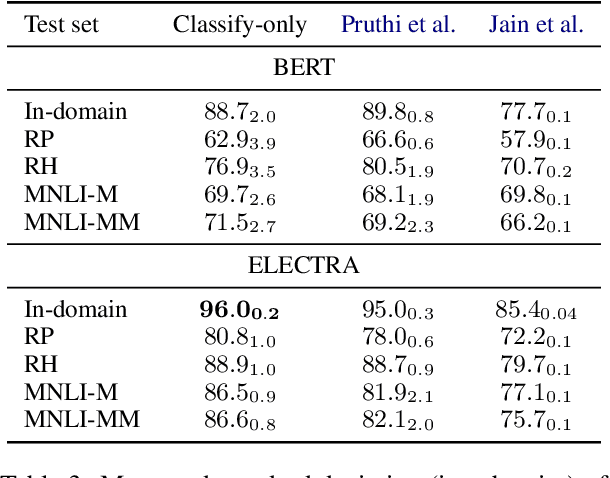

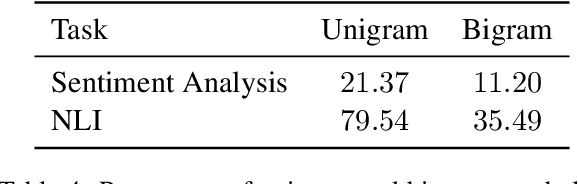

Practical Benefits of Feature Feedback Under Distribution Shift

Oct 14, 2021

Abstract:In attempts to develop sample-efficient algorithms, researcher have explored myriad mechanisms for collecting and exploiting feature feedback, auxiliary annotations provided for training (but not test) instances that highlight salient evidence. Examples include bounding boxes around objects and salient spans in text. Despite its intuitive appeal, feature feedback has not delivered significant gains in practical problems as assessed on iid holdout sets. However, recent works on counterfactually augmented data suggest an alternative benefit of supplemental annotations: lessening sensitivity to spurious patterns and consequently delivering gains in out-of-domain evaluations. Inspired by these findings, we hypothesize that while the numerous existing methods for incorporating feature feedback have delivered negligible in-sample gains, they may nevertheless generalize better out-of-domain. In experiments addressing sentiment analysis, we show that feature feedback methods perform significantly better on various natural out-of-domain datasets even absent differences on in-domain evaluation. By contrast, on natural language inference tasks, performance remains comparable. Finally, we compare those tasks where feature feedback does (and does not) help.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge