Antti Kangasrääsiö

ELFI: Engine for Likelihood-Free Inference

Jul 05, 2018

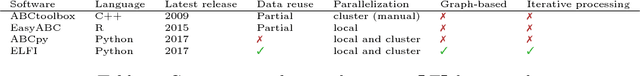

Abstract:Engine for Likelihood-Free Inference (ELFI) is a Python software library for performing likelihood-free inference (LFI). ELFI provides a convenient syntax for arranging components in LFI, such as priors, simulators, summaries or distances, to a network called ELFI graph. The components can be implemented in a wide variety of languages. The stand-alone ELFI graph can be used with any of the available inference methods without modifications. A central method implemented in ELFI is Bayesian Optimization for Likelihood-Free Inference (BOLFI), which has recently been shown to accelerate likelihood-free inference up to several orders of magnitude by surrogate-modelling the distance. ELFI also has an inbuilt support for output data storing for reuse and analysis, and supports parallelization of computation from multiple cores up to a cluster environment. ELFI is designed to be extensible and provides interfaces for widening its functionality. This makes the adding of new inference methods to ELFI straightforward and automatically compatible with the inbuilt features.

Inverse Reinforcement Learning from Summary Data

Jun 17, 2018

Abstract:Inverse reinforcement learning (IRL) aims to explain observed strategic behavior by fitting reinforcement learning models to behavioral data. However, traditional IRL methods are only applicable when the observations are in the form of state-action paths. This assumption may not hold in many real-world modeling settings, where only partial or summarized observations are available. In general, we may assume that there is a summarizing function $\sigma$, which acts as a filter between us and the true state-action paths that constitute the demonstration. Some initial approaches to extending IRL to such situations have been presented, but with very specific assumptions about the structure of $\sigma$, such as that only certain state observations are missing. This paper instead focuses on the most general case of the problem, where no assumptions are made about the summarizing function, except that it can be evaluated. We demonstrate that inference is still possible. The paper presents exact and approximate inference algorithms that allow full posterior inference, which is particularly important for assessing parameter uncertainty in this challenging inference situation. Empirical scalability is demonstrated to reasonably sized problems, and practical applicability is demonstrated by estimating the posterior for a cognitive science RL model based on an observed user's task completion time only.

Inferring Cognitive Models from Data using Approximate Bayesian Computation

Jan 13, 2017

Abstract:An important problem for HCI researchers is to estimate the parameter values of a cognitive model from behavioral data. This is a difficult problem, because of the substantial complexity and variety in human behavioral strategies. We report an investigation into a new approach using approximate Bayesian computation (ABC) to condition model parameters to data and prior knowledge. As the case study we examine menu interaction, where we have click time data only to infer a cognitive model that implements a search behaviour with parameters such as fixation duration and recall probability. Our results demonstrate that ABC (i) improves estimates of model parameter values, (ii) enables meaningful comparisons between model variants, and (iii) supports fitting models to individual users. ABC provides ample opportunities for theoretical HCI research by allowing principled inference of model parameter values and their uncertainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge