Antoine Serrurier

FAIR4Cov: Fused Audio Instance and Representation for COVID-19 Detection

Apr 22, 2022

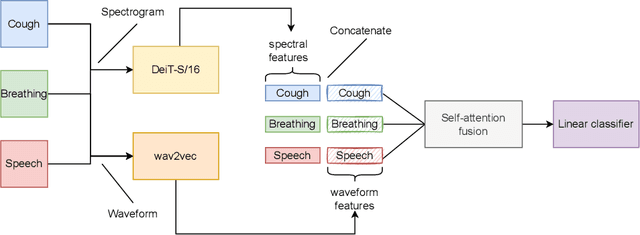

Abstract:Audio-based classification techniques on body sounds have long been studied to support diagnostic decisions, particularly in pulmonary diseases. In response to the urgency of the COVID-19 pandemic, a growing number of models are developed to identify COVID-19 patients based on acoustic input. Most models focus on cough because the dry cough is the best-known symptom of COVID-19. However, other body sounds, such as breath and speech, have also been revealed to correlate with COVID-19 as well. In this work, rather than relying on a specific body sound, we propose Fused Audio Instance and Representation for COVID-19 Detection (FAIR4Cov). It relies on constructing a joint feature vector obtained from a plurality of body sounds in waveform and spectrogram representation. The core component of FAIR4Cov is a self-attention fusion unit that is trained to establish the relation of multiple body sounds and audio representations and integrate it into a compact feature vector. We set up our experiments on different combinations of body sounds using only waveform, spectrogram, and a joint representation of waveform and spectrogram. Our findings show that the use of self-attention to combine extracted features from cough, breath, and speech sounds leads to the best performance with an Area Under the Receiver Operating Characteristic Curve (AUC) score of 0.8658, a sensitivity of 0.8057, and a specificity of 0.7958. This AUC is 0.0227 higher than the one of the models trained on spectrograms only and 0.0847 higher than the one of the models trained on waveforms only. The results demonstrate that the combination of spectrogram with waveform representation helps to enrich the extracted features and outperforms the models with single representation.

Automatic vocal tract landmark localization from midsagittal MRI data

Jul 18, 2019

Abstract:The various speech sounds of a language are obtained by varying the shape and position of the articulators surrounding the vocal tract. Analyzing their variability is crucial for understanding speech production, diagnosing speech and swallowing disorders and building intuitive applications for rehabilitation. Magnetic Resonance Imaging (MRI) is currently the most harmless powerful imaging modality used for this purpose. Identifying key anatomical landmarks on it is a pre-requisite for further analyses. This is a challenging task considering the high inter- and intra-speaker variability and the mutual interaction between the articulators. This study intends to solve this issue automatically for the first time. For this purpose, midsagittal anatomical MRI for 9 speakers sustaining 62 articulations and annotated with the location of 21 key anatomical landmarks are considered. Four state-of-the-art methods, including deep learning methods, are adapted from the literature for facial landmark localization and human pose estimation and evaluated. Furthermore, an approach based on the description of each landmark location as a heat-map image stored in a channel of a single multi-channel image embedding all landmarks is proposed. The generation of such a multi-channel image from an input MRI image is tested through two deep learning networks, one taken from the literature and one designed on purpose in this study, the flat-net. Results show that the flat-net approach outperforms the other methods, leading to an overall Root Mean Square Error of 3.4~pixels/0.34~cm obtained in a leave-one-out procedure over the speakers. All of the codes are publicly available on GitHub.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge