Anna Esposito

Exploring Emotion Expression Recognition in Older Adults Interacting with a Virtual Coach

Nov 09, 2023Abstract:The EMPATHIC project aimed to design an emotionally expressive virtual coach capable of engaging healthy seniors to improve well-being and promote independent aging. One of the core aspects of the system is its human sensing capabilities, allowing for the perception of emotional states to provide a personalized experience. This paper outlines the development of the emotion expression recognition module of the virtual coach, encompassing data collection, annotation design, and a first methodological approach, all tailored to the project requirements. With the latter, we investigate the role of various modalities, individually and combined, for discrete emotion expression recognition in this context: speech from audio, and facial expressions, gaze, and head dynamics from video. The collected corpus includes users from Spain, France, and Norway, and was annotated separately for the audio and video channels with distinct emotional labels, allowing for a performance comparison across cultures and label types. Results confirm the informative power of the modalities studied for the emotional categories considered, with multimodal methods generally outperforming others (around 68% accuracy with audio labels and 72-74% with video labels). The findings are expected to contribute to the limited literature on emotion recognition applied to older adults in conversational human-machine interaction.

The Relationship Between Speech Features Changes When You Get Depressed: Feature Correlations for Improving Speed and Performance of Depression Detection

Jul 07, 2023

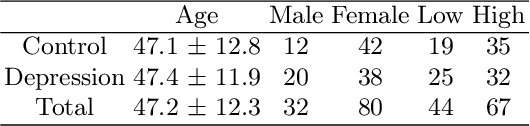

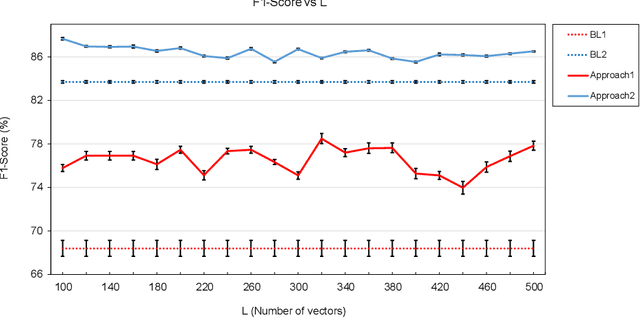

Abstract:This work shows that depression changes the correlation between features extracted from speech. Furthermore, it shows that using such an insight can improve the training speed and performance of depression detectors based on SVMs and LSTMs. The experiments were performed over the Androids Corpus, a publicly available dataset involving 112 speakers, including 58 people diagnosed with depression by professional psychiatrists. The results show that the models used in the experiments improve in terms of training speed and performance when fed with feature correlation matrices rather than with feature vectors. The relative reduction of the error rate ranges between 23.1% and 26.6% depending on the model. The probable explanation is that feature correlation matrices appear to be more variable in the case of depressed speakers. Correspondingly, such a phenomenon can be thought of as a depression marker.

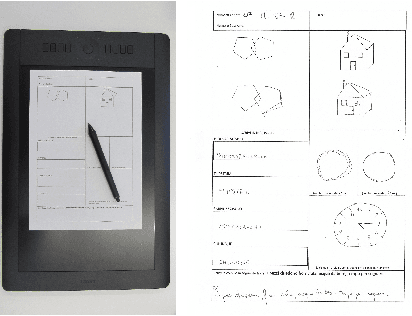

Handwriting and Drawing for Depression Detection: A Preliminary Study

Feb 05, 2023Abstract:The events of the past 2 years related to the pandemic have shown that it is increasingly important to find new tools to help mental health experts in diagnosing mood disorders. Leaving aside the longcovid cognitive (e.g., difficulty in concentration) and bodily (e.g., loss of smell) effects, the short-term covid effects on mental health were a significant increase in anxiety and depressive symptoms. The aim of this study is to use a new tool, the online handwriting and drawing analysis, to discriminate between healthy individuals and depressed patients. To this aim, patients with clinical depression (n = 14), individuals with high sub-clinical (diagnosed by a test rather than a doctor) depressive traits (n = 15) and healthy individuals (n = 20) were recruited and asked to perform four online drawing /handwriting tasks using a digitizing tablet and a special writing device. From the raw collected online data, seventeen drawing/writing features (categorized into five categories) were extracted, and compared among the three groups of the involved participants, through ANOVA repeated measures analyses. Results shows that Time features are more effective in discriminating between healthy and participants with sub-clinical depressive characteristics. On the other hand, Ductus and Pressure features are more effective in discriminating between clinical depressed and healthy participants.

A Naturalistic Database of Thermal Emotional Facial Expressions and Effects of Induced Emotions on Memory

Mar 29, 2022

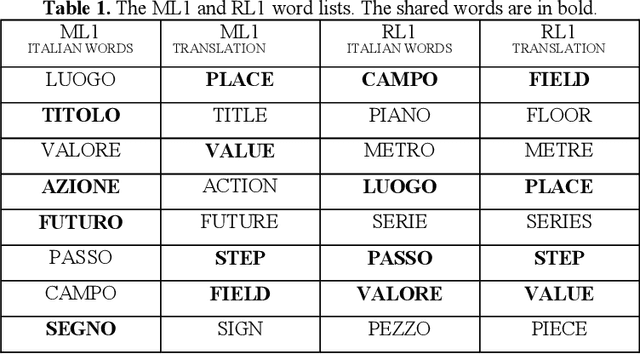

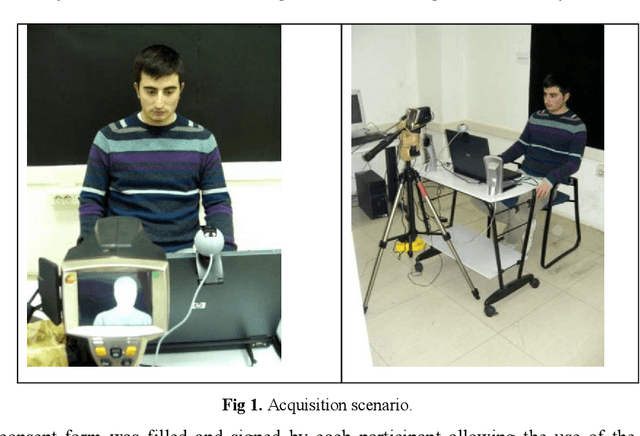

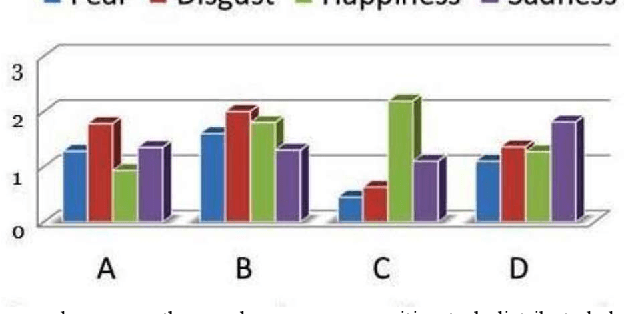

Abstract:This work defines a procedure for collecting naturally induced emotional facial expressions through the vision of movie excerpts with high emotional contents and reports experimental data ascertaining the effects of emotions on memory word recognition tasks. The induced emotional states include the four basic emotions of sadness, disgust, happiness, and surprise, as well as the neutral emotional state. The resulting database contains both thermal and visible emotional facial expressions, portrayed by forty Italian subjects and simultaneously acquired by appropriately synchronizing a thermal and a standard visible camera. Each subject's recording session lasted 45 minutes, allowing for each mode (thermal or visible) to collect a minimum of 2000 facial expressions from which a minimum of 400 were selected as highly expressive of each emotion category. The database is available to the scientific community and can be obtained contacting one of the authors. For this pilot study, it was found that emotions and/or emotion categories do not affect individual performance on memory word recognition tasks and temperature changes in the face or in some regions of it do not discriminate among emotional states.

* 15 pages published in Esposito, A., Esposito, A.M., Vinciarelli, A., Hoffmann, R., M\"uller, V.C. (eds) Cognitive Behavioural Systems. Lecture Notes in Computer Science, vol 7403. Springer, Berlin, Heidelberg

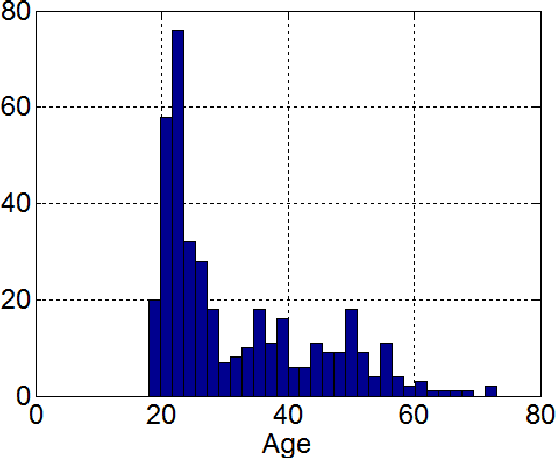

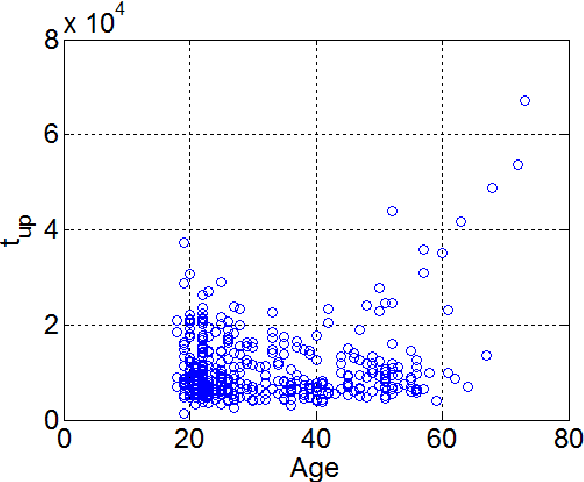

A Preliminary Study on Aging Examining Online Handwriting

Mar 08, 2022

Abstract:In order to develop infocommunications devices so that the capabilities of the human brain may interact with the capabilities of any artificially cognitive system a deeper knowledge of aging is necessary. Especially if society does not want to exclude elder people and wants to develop automatic systems able to help and improve the quality of life of this group of population, healthy individuals as well as those with cognitive decline or other pathologies. This paper tries to establish the variations in handwriting tasks with the goal to obtain a better knowledge about aging. We present the correlation results between several parameters extracted from online handwriting and the age of the writers. It is based on BIOSECURID database, which consists of 400 people that provided several biometric traits, including online handwriting. The main idea is to identify those parameters that are more stable and those more age dependent. One challenging topic for disease diagnose is the differentiation between healthy and pathological aging. For this purpose, it is necessary to be aware of handwriting parameters that are, in general, not affected by aging and those who experiment changes, increase or decrease their values, because of it. This paper contributes to this research line analyzing a selected set of online handwriting parameters provided by a healthy group of population aged from 18 to 70 years. Preliminary results show that these parameters are not affected by aging and therefore, changes in their values can only be attributed to motor or cognitive disorders.

* 4 pages

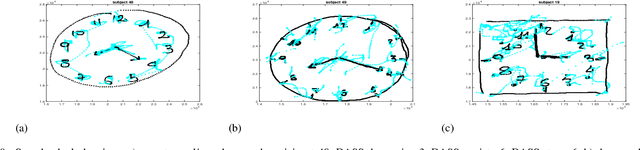

EMOTHAW: A novel database for emotional state recognition from handwriting

Feb 23, 2022

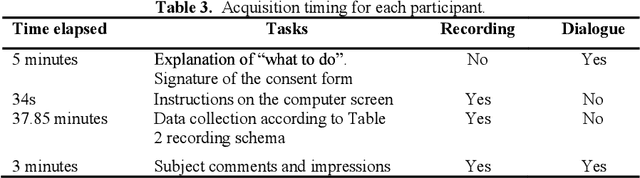

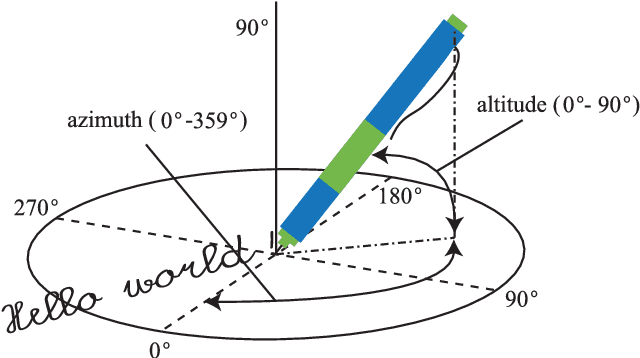

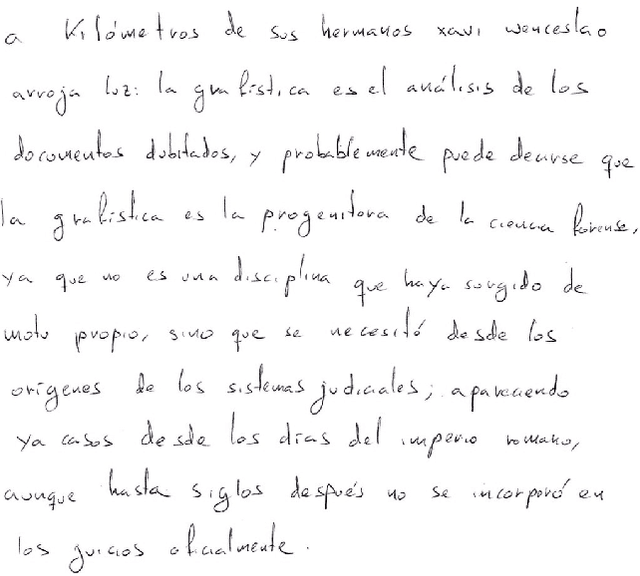

Abstract:The detection of negative emotions through daily activities such as handwriting is useful for promoting well-being. The spread of human-machine interfaces such as tablets makes the collection of handwriting samples easier. In this context, we present a first publicly available handwriting database which relates emotional states to handwriting, that we call EMOTHAW. This database includes samples of 129 participants whose emotional states, namely anxiety, depression and stress, are assessed by the Depression Anxiety Stress Scales (DASS) questionnaire. Seven tasks are recorded through a digitizing tablet: pentagons and house drawing, words copied in handprint, circles and clock drawing, and one sentence copied in cursive writing. Records consist in pen positions, on-paper and in-air, time stamp, pressure, pen azimuth and altitude. We report our analysis on this database. From collected data, we first compute measurements related to timing and ductus. We compute separate measurements according to the position of the writing device: on paper or in-air. We analyse and classify this set of measurements (referred to as features) using a random forest approach. This latter is a machine learning method [2], based on an ensemble of decision trees, which includes a feature ranking process. We use this ranking process to identify the features which best reveal a targeted emotional state. We then build random forest classifiers associated to each emotional state. Our results, obtained from cross-validation experiments, show that the targeted emotional states can be identified with accuracies ranging from 60% to 71%.

* 31 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge