Angela Yi

FactorSim: Generative Simulation via Factorized Representation

Sep 26, 2024Abstract:Generating simulations to train intelligent agents in game-playing and robotics from natural language input, from user input or task documentation, remains an open-ended challenge. Existing approaches focus on parts of this challenge, such as generating reward functions or task hyperparameters. Unlike previous work, we introduce FACTORSIM that generates full simulations in code from language input that can be used to train agents. Exploiting the structural modularity specific to coded simulations, we propose to use a factored partially observable Markov decision process representation that allows us to reduce context dependence during each step of the generation. For evaluation, we introduce a generative simulation benchmark that assesses the generated simulation code's accuracy and effectiveness in facilitating zero-shot transfers in reinforcement learning settings. We show that FACTORSIM outperforms existing methods in generating simulations regarding prompt alignment (e.g., accuracy), zero-shot transfer abilities, and human evaluation. We also demonstrate its effectiveness in generating robotic tasks.

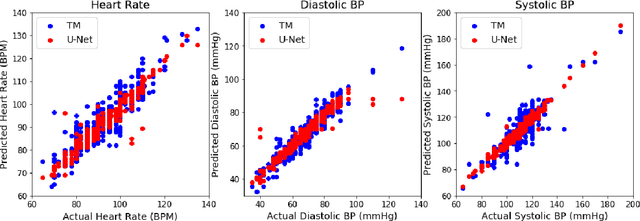

Hand-drawn Symbol Recognition of Surgical Flowsheet Graphs with Deep Image Segmentation

Jun 30, 2020

Abstract:Perioperative data are essential to investigating the causes of adverse surgical outcomes. In some low to middle income countries, these data are computationally inaccessible due to a lack of digitization of surgical flowsheets. In this paper, we present a deep image segmentation approach using a U-Net architecture that can detect hand-drawn symbols on a flowsheet graph. The segmentation mask outputs are post-processed with techniques unique to each symbol to convert into numeric values. The U-Net method can detect, at the appropriate time intervals, the symbols for heart rate and blood pressure with over 99 percent accuracy. Over 95 percent of the predictions fall within an absolute error of five when compared to the actual value. The deep learning model outperformed template matching even with a small size of annotated images available for the training set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge