Angela Violi

Joint Optimization of Piecewise Linear Ensembles

May 01, 2024

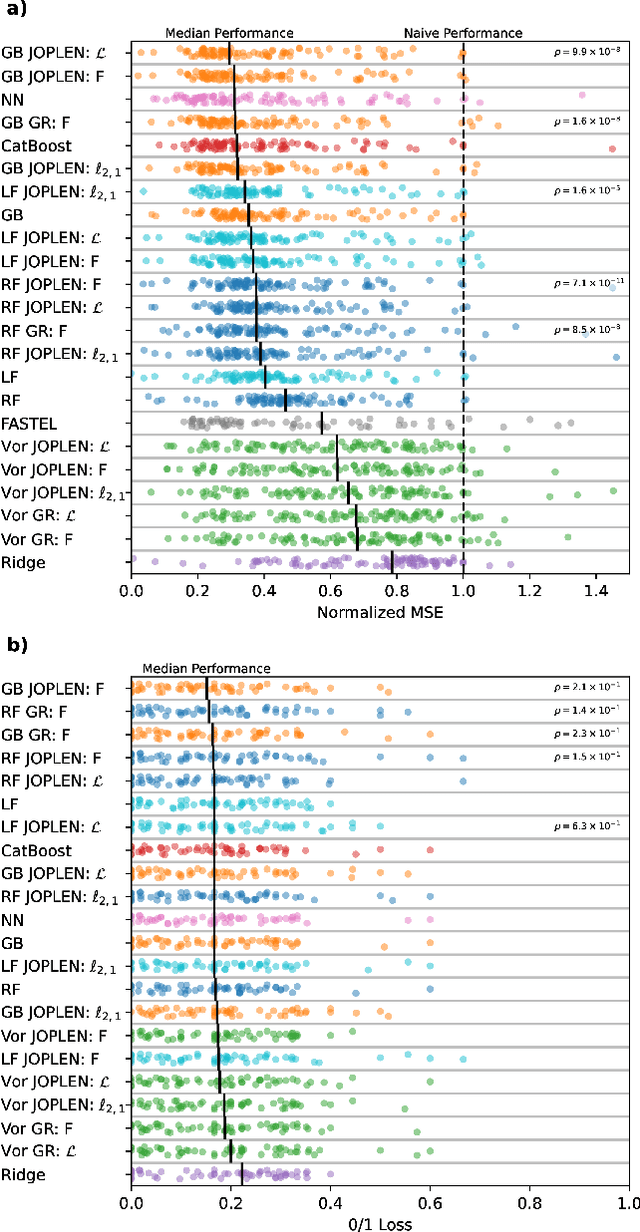

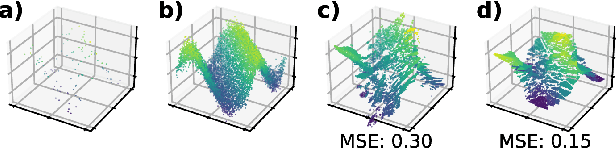

Abstract:Tree ensembles achieve state-of-the-art performance despite being greedily optimized. Global refinement (GR) reduces greediness by jointly and globally optimizing all constant leaves. We propose Joint Optimization of Piecewise Linear ENsembles (JOPLEN), a piecewise-linear extension of GR. Compared to GR, JOPLEN improves model flexibility and can apply common penalties, including sparsity-promoting matrix norms and subspace-norms, to nonlinear prediction. We evaluate the Frobenius norm, $\ell_{2,1}$ norm, and Laplacian regularization for 146 regression and classification datasets; JOPLEN, combined with GB trees and RF, achieves superior performance in both settings. Additionally, JOPLEN with a nuclear norm penalty empirically learns smooth and subspace-aligned functions. Finally, we perform multitask feature selection by extending the Dirty LASSO. JOPLEN Dirty LASSO achieves a superior feature sparsity/performance tradeoff to linear and gradient boosted approaches. We anticipate that JOPLEN will improve regression, classification, and feature selection across many fields.

Universal Feature Selection for Simultaneous Interpretability of Multitask Datasets

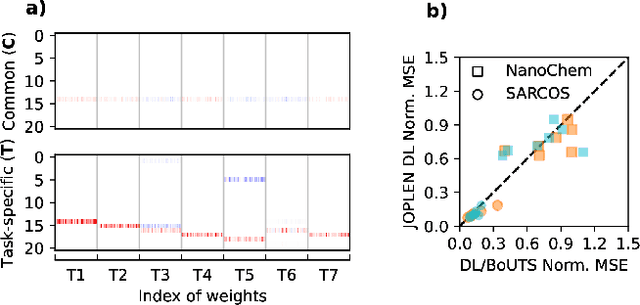

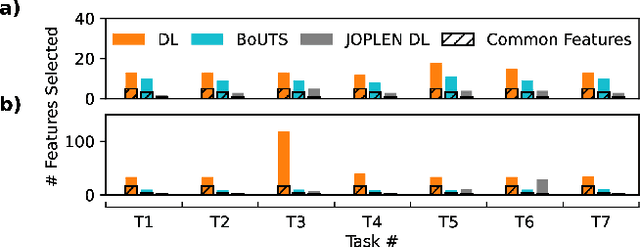

Mar 21, 2024Abstract:Extracting meaningful features from complex, high-dimensional datasets across scientific domains remains challenging. Current methods often struggle with scalability, limiting their applicability to large datasets, or make restrictive assumptions about feature-property relationships, hindering their ability to capture complex interactions. BoUTS's general and scalable feature selection algorithm surpasses these limitations to identify both universal features relevant to all datasets and task-specific features predictive for specific subsets. Evaluated on seven diverse chemical regression datasets, BoUTS achieves state-of-the-art feature sparsity while maintaining prediction accuracy comparable to specialized methods. Notably, BoUTS's universal features enable domain-specific knowledge transfer between datasets, and suggest deep connections in seemingly-disparate chemical datasets. We expect these results to have important repercussions in manually-guided inverse problems. Beyond its current application, BoUTS holds immense potential for elucidating data-poor systems by leveraging information from similar data-rich systems. BoUTS represents a significant leap in cross-domain feature selection, potentially leading to advancements in various scientific fields.

Model Reduction in Chemical Reaction Networks: A Data-Driven Sparse-Learning Approach

Dec 22, 2017

Abstract:The reduction of large kinetic mechanisms is a crucial step for fluid dynamics simulations of com- bustion systems. In this paper, we introduce a novel approach for mechanism reduction that presents unique features. We propose an unbiased reaction-based method that exploits an optimization-based sparse-learning approach to identify the set of most influential reactions in a chemical reaction network. The problem is first formulated as a mixed-integer linear program, and then a relaxation method is leveraged to reduce its computational complexity. Not only this method calculates the minimal set of reactions subject to the user-specified error tolerance bounds, but it also incorporates a bound on the propagation of error over a time horizon caused by reducing the mechanism. The method is unbiased toward the optimization of any characteristic of the system, such as ignition delay, since it is assembled based on the identification of a reduced mechanism that fits the species concentrations and reaction rate generated by the full mechanisms. Qualitative and quantitative validations of the sparse encoding approach demonstrate that the reduced model captures important network structural properties.

A Data-Driven Sparse-Learning Approach to Model Reduction in Chemical Reaction Networks

Dec 12, 2017

Abstract:In this paper, we propose an optimization-based sparse learning approach to identify the set of most influential reactions in a chemical reaction network. This reduced set of reactions is then employed to construct a reduced chemical reaction mechanism, which is relevant to chemical interaction network modeling. The problem of identifying influential reactions is first formulated as a mixed-integer quadratic program, and then a relaxation method is leveraged to reduce the computational complexity of our approach. Qualitative and quantitative validation of the sparse encoding approach demonstrates that the model captures important network structural properties with moderate computational load.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge