Andrew Wan

Mining Measured Information from Text

May 05, 2015

Abstract:We present an approach to extract measured information from text (e.g., a 1370 degrees C melting point, a BMI greater than 29.9 kg/m^2 ). Such extractions are critically important across a wide range of domains - especially those involving search and exploration of scientific and technical documents. We first propose a rule-based entity extractor to mine measured quantities (i.e., a numeric value paired with a measurement unit), which supports a vast and comprehensive set of both common and obscure measurement units. Our method is highly robust and can correctly recover valid measured quantities even when significant errors are introduced through the process of converting document formats like PDF to plain text. Next, we describe an approach to extracting the properties being measured (e.g., the property "pixel pitch" in the phrase "a pixel pitch as high as 352 {\mu}m"). Finally, we present MQSearch: the realization of a search engine with full support for measured information.

Approximate resilience, monotonicity, and the complexity of agnostic learning

Jul 09, 2014

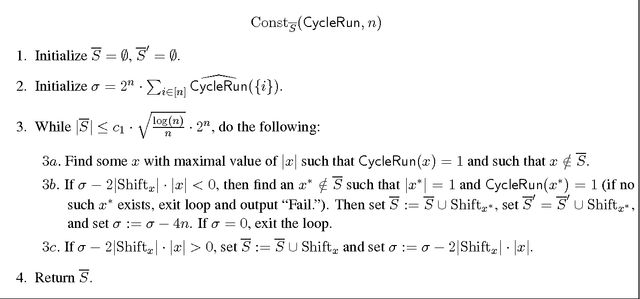

Abstract:A function $f$ is $d$-resilient if all its Fourier coefficients of degree at most $d$ are zero, i.e., $f$ is uncorrelated with all low-degree parities. We study the notion of $\mathit{approximate}$ $\mathit{resilience}$ of Boolean functions, where we say that $f$ is $\alpha$-approximately $d$-resilient if $f$ is $\alpha$-close to a $[-1,1]$-valued $d$-resilient function in $\ell_1$ distance. We show that approximate resilience essentially characterizes the complexity of agnostic learning of a concept class $C$ over the uniform distribution. Roughly speaking, if all functions in a class $C$ are far from being $d$-resilient then $C$ can be learned agnostically in time $n^{O(d)}$ and conversely, if $C$ contains a function close to being $d$-resilient then agnostic learning of $C$ in the statistical query (SQ) framework of Kearns has complexity of at least $n^{\Omega(d)}$. This characterization is based on the duality between $\ell_1$ approximation by degree-$d$ polynomials and approximate $d$-resilience that we establish. In particular, it implies that $\ell_1$ approximation by low-degree polynomials, known to be sufficient for agnostic learning over product distributions, is in fact necessary. Focusing on monotone Boolean functions, we exhibit the existence of near-optimal $\alpha$-approximately $\widetilde{\Omega}(\alpha\sqrt{n})$-resilient monotone functions for all $\alpha>0$. Prior to our work, it was conceivable even that every monotone function is $\Omega(1)$-far from any $1$-resilient function. Furthermore, we construct simple, explicit monotone functions based on ${\sf Tribes}$ and ${\sf CycleRun}$ that are close to highly resilient functions. Our constructions are based on a fairly general resilience analysis and amplification. These structural results, together with the characterization, imply nearly optimal lower bounds for agnostic learning of monotone juntas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge