Andrew Walenstein

Dynamic Neural Control Flow Execution: An Agent-Based Deep Equilibrium Approach for Binary Vulnerability Detection

Apr 03, 2024

Abstract:Software vulnerabilities are a challenge in cybersecurity. Manual security patches are often difficult and slow to be deployed, while new vulnerabilities are created. Binary code vulnerability detection is less studied and more complex compared to source code, and this has important practical implications. Deep learning has become an efficient and powerful tool in the security domain, where it provides end-to-end and accurate prediction. Modern deep learning approaches learn the program semantics through sequence and graph neural networks, using various intermediate representation of programs, such as abstract syntax trees (AST) or control flow graphs (CFG). Due to the complex nature of program execution, the output of an execution depends on the many program states and inputs. Also, a CFG generated from static analysis can be an overestimation of the true program flow. Moreover, the size of programs often does not allow a graph neural network with fixed layers to aggregate global information. To address these issues, we propose DeepEXE, an agent-based implicit neural network that mimics the execution path of a program. We use reinforcement learning to enhance the branching decision at every program state transition and create a dynamic environment to learn the dependency between a vulnerability and certain program states. An implicitly defined neural network enables nearly infinite state transitions until convergence, which captures the structural information at a higher level. The experiments are conducted on two semi-synthetic and two real-world datasets. We show that DeepEXE is an accurate and efficient method and outperforms the state-of-the-art vulnerability detection methods.

Risk-Aware Fine-Grained Access Control in Cyber-Physical Contexts

Aug 29, 2021

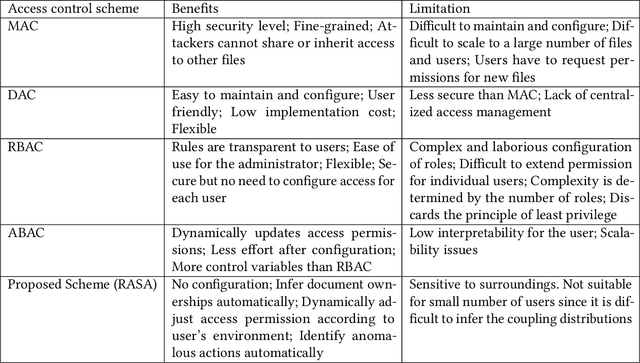

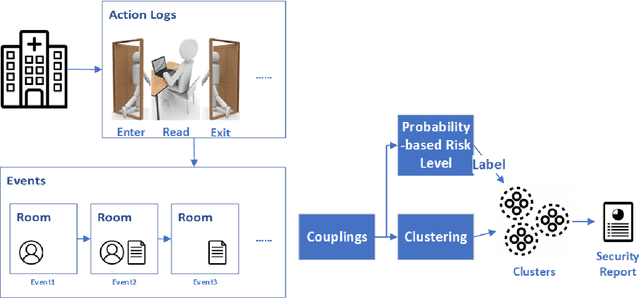

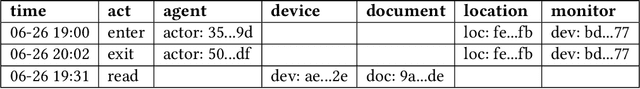

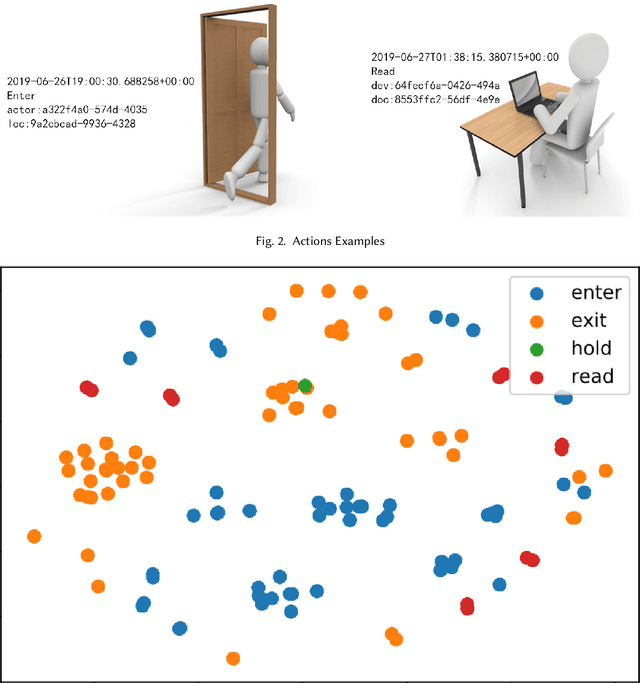

Abstract:Access to resources by users may need to be granted only upon certain conditions and contexts, perhaps particularly in cyber-physical settings. Unfortunately, creating and modifying context-sensitive access control solutions in dynamic environments creates ongoing challenges to manage the authorization contexts. This paper proposes RASA, a context-sensitive access authorization approach and mechanism leveraging unsupervised machine learning to automatically infer risk-based authorization decision boundaries. We explore RASA in a healthcare usage environment, wherein cyber and physical conditions create context-specific risks for protecting private health information. The risk levels are associated with access control decisions recommended by a security policy. A coupling method is introduced to track coexistence of the objects within context using frequency and duration of coexistence, and these are clustered to reveal sets of actions with common risk levels; these are used to create authorization decision boundaries. In addition, we propose a method for assessing the risk level and labelling the clusters with respect to their corresponding risk levels. We evaluate the promise of RASA-generated policies against a heuristic rule-based policy. By employing three different coupling features (frequency-based, duration-based, and combined features), the decisions of the unsupervised method and that of the policy are more than 99% consistent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge