Andrew R. Sedler

Diffusion-Based Generation of Neural Activity from Disentangled Latent Codes

Jul 30, 2024Abstract:Recent advances in recording technology have allowed neuroscientists to monitor activity from thousands of neurons simultaneously. Latent variable models are increasingly valuable for distilling these recordings into compact and interpretable representations. Here we propose a new approach to neural data analysis that leverages advances in conditional generative modeling to enable the unsupervised inference of disentangled behavioral variables from recorded neural activity. Our approach builds on InfoDiffusion, which augments diffusion models with a set of latent variables that capture important factors of variation in the data. We apply our model, called Generating Neural Observations Conditioned on Codes with High Information (GNOCCHI), to time series neural data and test its application to synthetic and biological recordings of neural activity during reaching. In comparison to a VAE-based sequential autoencoder, GNOCCHI learns higher-quality latent spaces that are more clearly structured and more disentangled with respect to key behavioral variables. These properties enable accurate generation of novel samples (unseen behavioral conditions) through simple linear traversal of the latent spaces produced by GNOCCHI. Our work demonstrates the potential of unsupervised, information-based models for the discovery of interpretable latent spaces from neural data, enabling researchers to generate high-quality samples from unseen conditions.

lfads-torch: A modular and extensible implementation of latent factor analysis via dynamical systems

Sep 03, 2023

Abstract:Latent factor analysis via dynamical systems (LFADS) is an RNN-based variational sequential autoencoder that achieves state-of-the-art performance in denoising high-dimensional neural activity for downstream applications in science and engineering. Recently introduced variants and extensions continue to demonstrate the applicability of the architecture to a wide variety of problems in neuroscience. Since the development of the original implementation of LFADS, new technologies have emerged that use dynamic computation graphs, minimize boilerplate code, compose model configuration files, and simplify large-scale training. Building on these modern Python libraries, we introduce lfads-torch -- a new open-source implementation of LFADS that unifies existing variants and is designed to be easier to understand, configure, and extend. Documentation, source code, and issue tracking are available at https://github.com/arsedler9/lfads-torch .

Expressive architectures enhance interpretability of dynamics-based neural population models

Dec 07, 2022Abstract:Artificial neural networks that can recover latent dynamics from recorded neural activity may provide a powerful avenue for identifying and interpreting the dynamical motifs underlying biological computation. Given that neural variance alone does not uniquely determine a latent dynamical system, interpretable architectures should prioritize accurate and low-dimensional latent dynamics. In this work, we evaluated the performance of sequential autoencoders (SAEs) in recovering three latent chaotic attractors from simulated neural datasets. We found that SAEs with widely-used recurrent neural network (RNN)-based dynamics were unable to infer accurate rates at the true latent state dimensionality, and that larger RNNs relied upon dynamical features not present in the data. On the other hand, SAEs with neural ordinary differential equation (NODE)-based dynamics inferred accurate rates at the true latent state dimensionality, while also recovering latent trajectories and fixed point structure. We attribute this finding to the fact that NODEs allow use of multi-layer perceptrons (MLPs) of arbitrary capacity to model the vector field. Decoupling the expressivity of the dynamics model from its latent dimensionality enables NODEs to learn the requisite low-D dynamics where RNN cells fail. The suboptimal interpretability of widely-used RNN-based dynamics may motivate substitution for alternative architectures, such as NODE, that enable learning of accurate dynamics in low-dimensional latent spaces.

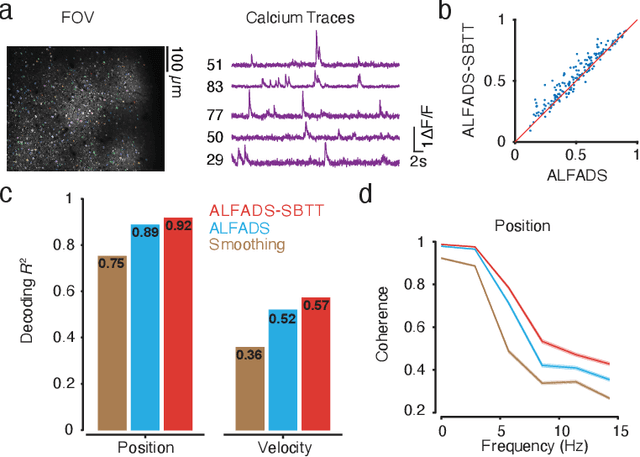

Deep inference of latent dynamics with spatio-temporal super-resolution using selective backpropagation through time

Oct 29, 2021

Abstract:Modern neural interfaces allow access to the activity of up to a million neurons within brain circuits. However, bandwidth limits often create a trade-off between greater spatial sampling (more channels or pixels) and the temporal frequency of sampling. Here we demonstrate that it is possible to obtain spatio-temporal super-resolution in neuronal time series by exploiting relationships among neurons, embedded in latent low-dimensional population dynamics. Our novel neural network training strategy, selective backpropagation through time (SBTT), enables learning of deep generative models of latent dynamics from data in which the set of observed variables changes at each time step. The resulting models are able to infer activity for missing samples by combining observations with learned latent dynamics. We test SBTT applied to sequential autoencoders and demonstrate more efficient and higher-fidelity characterization of neural population dynamics in electrophysiological and calcium imaging data. In electrophysiology, SBTT enables accurate inference of neuronal population dynamics with lower interface bandwidths, providing an avenue to significant power savings for implanted neuroelectronic interfaces. In applications to two-photon calcium imaging, SBTT accurately uncovers high-frequency temporal structure underlying neural population activity, substantially outperforming the current state-of-the-art. Finally, we demonstrate that performance could be further improved by using limited, high-bandwidth sampling to pretrain dynamics models, and then using SBTT to adapt these models for sparsely-sampled data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge