Andrew P. Leynes

Physics-driven Deep Learning for PET/MRI

Jun 11, 2022

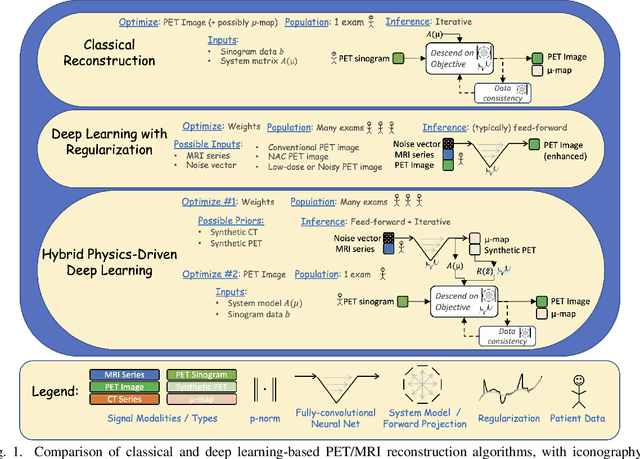

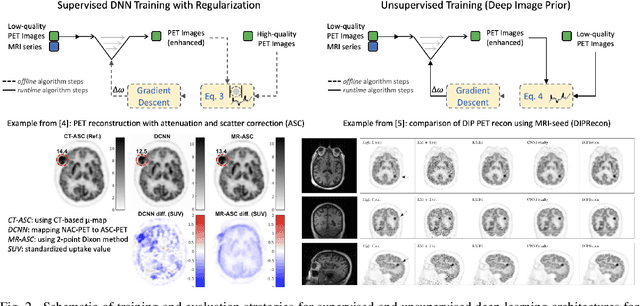

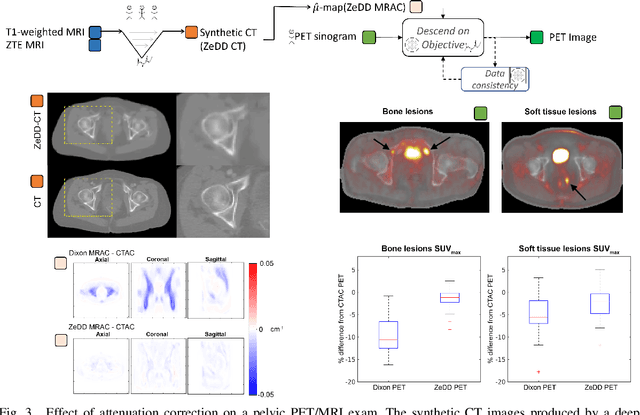

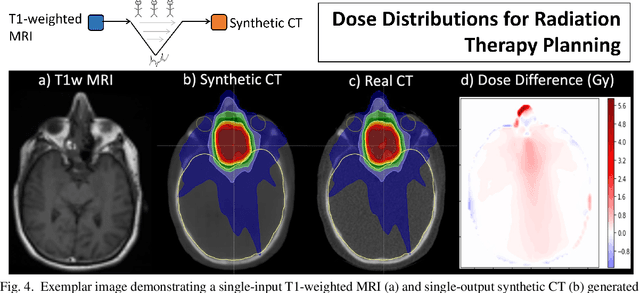

Abstract:In this paper, we review physics- and data-driven reconstruction techniques for simultaneous positron emission tomography (PET) / magnetic resonance imaging (MRI) systems, which have significant advantages for clinical imaging of cancer, neurological disorders, and heart disease. These reconstruction approaches utilize priors, either structural or statistical, together with a physics-based description of the PET system response. However, due to the nested representation of the forward problem, direct PET/MRI reconstruction is a nonlinear problem. We elucidate how a multi-faceted approach accommodates hybrid data- and physics-driven machine learning for reconstruction of 3D PET/MRI, summarizing important deep learning developments made in the last 5 years to address attenuation correction, scattering, low photon counts, and data consistency. We also describe how applications of these multi-modality approaches extend beyond PET/MRI to improving accuracy in radiation therapy planning. We conclude by discussing opportunities for extending the current state-of-the-art following the latest trends in physics- and deep learning-based computational imaging and next-generation detector hardware.

Scan-specific Self-supervised Bayesian Deep Non-linear Inversion for Undersampled MRI Reconstruction

Mar 01, 2022

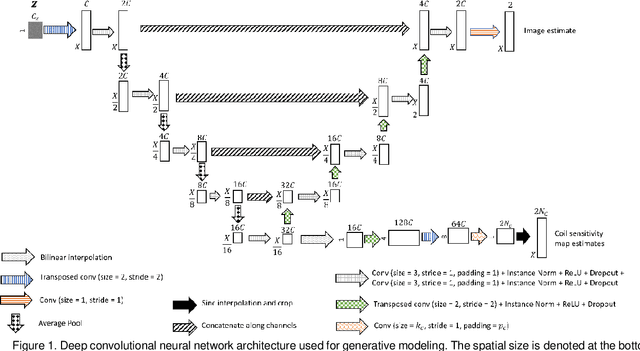

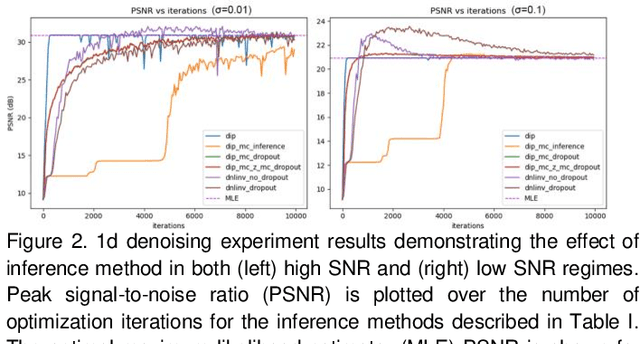

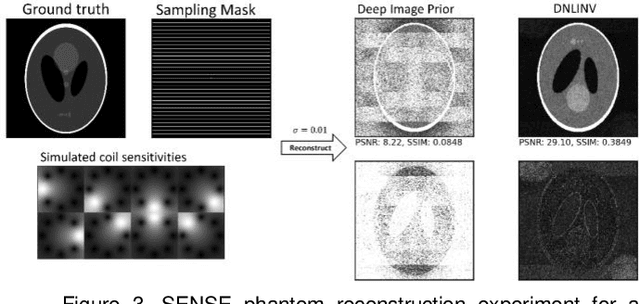

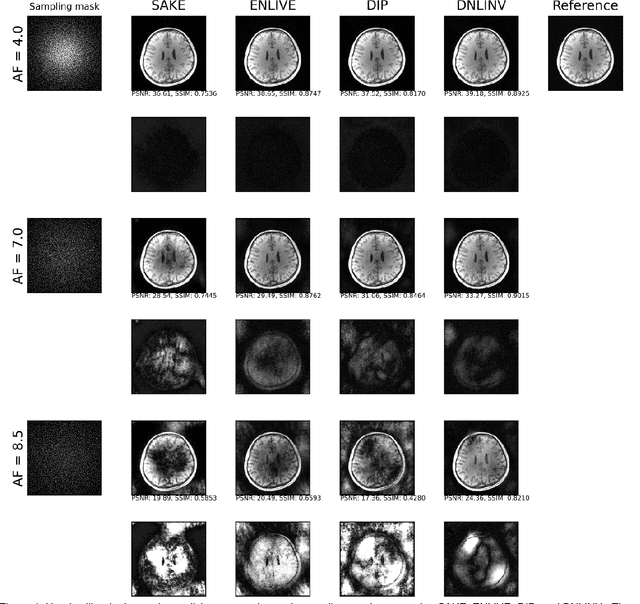

Abstract:Magnetic resonance imaging is subject to slow acquisition times due to the inherent limitations in data sampling. Recently, supervised deep learning has emerged as a promising technique for reconstructing sub-sampled MRI. However, supervised deep learning requires a large dataset of fully-sampled data. Although unsupervised or self-supervised deep learning methods have emerged to address the limitations of supervised deep learning approaches, they still require a database of images. In contrast, scan-specific deep learning methods learn and reconstruct using only the sub-sampled data from a single scan. Current scan-specific approaches require a fully-sampled auto calibration scan region in k-space that cost additional scan time. Here, we introduce Scan-Specific Self-Supervised Bayesian Deep Non-Linear Inversion (DNLINV) that does not require an auto calibration scan region. DNLINV utilizes a deep image prior-type generative modeling approach and relies on approximate Bayesian inference to regularize the deep convolutional neural network. We demonstrate our approach on several anatomies, contrasts, and sampling patterns and show improved performance over existing approaches in scan-specific calibrationless parallel imaging and compressed sensing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge