Andrew Michelson

Multimodal hierarchical multi-task deep learning framework for jointly predicting and explaining Alzheimer disease progression

Apr 04, 2024

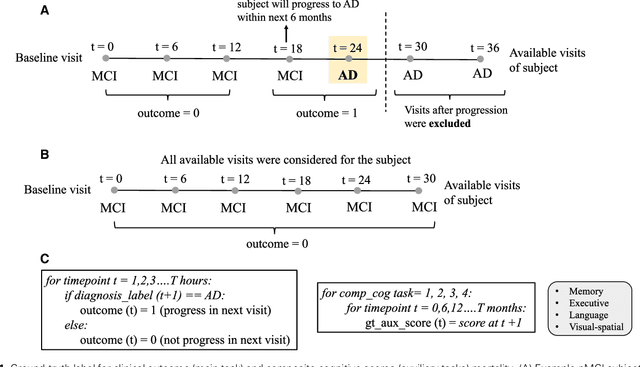

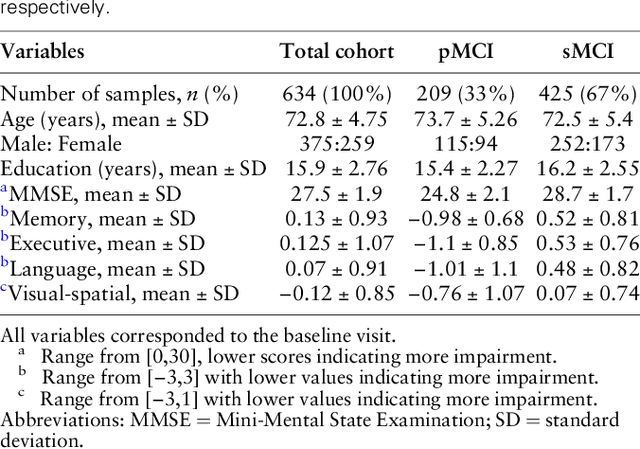

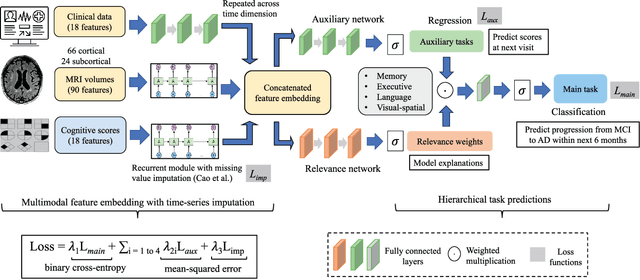

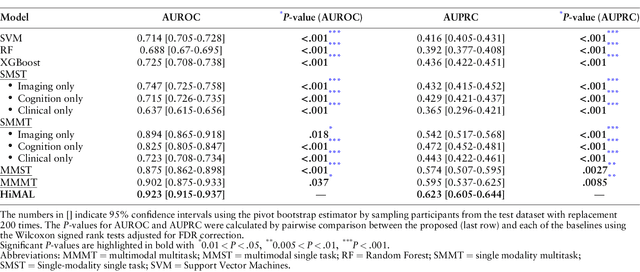

Abstract:Early identification of Mild Cognitive Impairment (MCI) subjects who will eventually progress to Alzheimer Disease (AD) is challenging. Existing deep learning models are mostly single-modality single-task models predicting risk of disease progression at a fixed timepoint. We proposed a multimodal hierarchical multi-task learning approach which can monitor the risk of disease progression at each timepoint of the visit trajectory. Longitudinal visit data from multiple modalities (MRI, cognition, and clinical data) were collected from MCI individuals of the Alzheimer Disease Neuroimaging Initiative (ADNI) dataset. Our hierarchical model predicted at every timepoint a set of neuropsychological composite cognitive function scores as auxiliary tasks and used the forecasted scores at every timepoint to predict the future risk of disease. Relevance weights for each composite function provided explanations about potential factors for disease progression. Our proposed model performed better than state-of-the-art baselines in predicting AD progression risk and the composite scores. Ablation study on the number of modalities demonstrated that imaging and cognition data contributed most towards the outcome. Model explanations at each timepoint can inform clinicians 6 months in advance the potential cognitive function decline that can lead to progression to AD in future. Our model monitored their risk of AD progression every 6 months throughout the visit trajectory of individuals. The hierarchical learning of auxiliary tasks allowed better optimization and allowed longitudinal explanations for the outcome. Our framework is flexible with the number of input modalities and the selection of auxiliary tasks and hence can be generalized to other clinical problems too.

Self-explaining Neural Network with Plausible Explanations

Oct 09, 2021

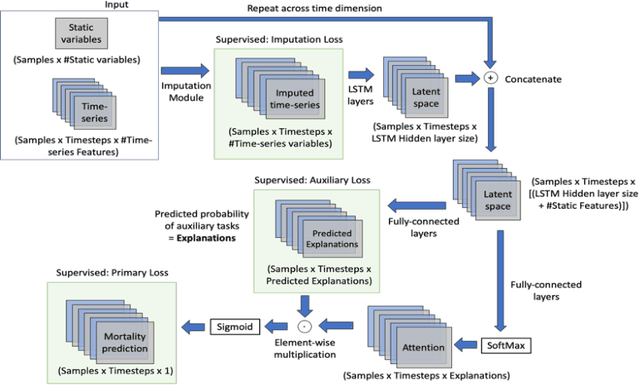

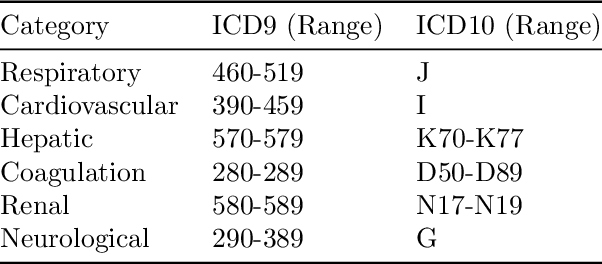

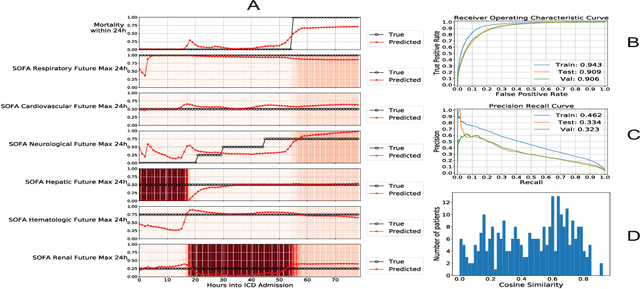

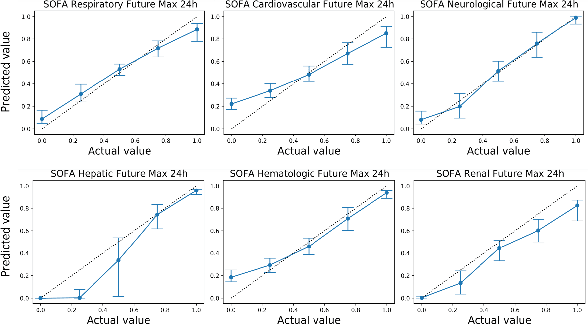

Abstract:Explaining the predictions of complex deep learning models, often referred to as black boxes, is critical in high-stakes domains like healthcare. However, post-hoc model explanations often are not understandable by clinicians and are difficult to integrate into clinical workflow. Further, while most explainable models use individual clinical variables as units of explanation, human understanding often rely on higher-level concepts or feature representations. In this paper, we propose a novel, self-explaining neural network for longitudinal in-hospital mortality prediction using domain-knowledge driven Sequential Organ Failure Assessment (SOFA) organ-specific scores as the atomic units of explanation. We also design a novel procedure to quantitatively validate the model explanations against gold standard discharge diagnosis information of patients. Our results provide interesting insights into how each of the SOFA organ scores contribute to mortality at different timesteps within longitudinal patient trajectory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge