Andreas Uhl

Temporal Image Forensics: A Review and Critical Evaluation

Sep 09, 2025

Abstract:Temporal image forensics is the science of estimating the age of a digital image. Usually, time-dependent traces (age traces) introduced by the image acquisition pipeline are exploited for this purpose. In this review, a comprehensive overview of the field of temporal image forensics based on time-dependent traces from the image acquisition pipeline is given. This includes a detailed insight into the properties of known age traces (i.e., in-field sensor defects and sensor dust) and temporal image forensics techniques. Another key aspect of this work is to highlight the problem of content bias and to illustrate how important eXplainable Artificial Intelligence methods are to verify the reliability of temporal image forensics techniques. Apart from reviewing material presented in previous works, in this review: (i) a new (probably more realistic) forensic setting is proposed; (ii) the main properties (growth rate and spatial distribution) of in-field sensor defects are verified; (iii) it is shown that a method proposed to utilize in-field sensor defects for image age approximation actually exploits other traces (most likely content bias); (iv) the features learned by a neural network dating palmprint images are further investigated; (v) it is shown how easily a neural network can be distracted from learning age traces. For this purpose, previous work is analyzed, re-implemented if required and experiments are conducted.

FaceSORT: a Multi-Face Tracking Method based on Biometric and Appearance Features

Jan 20, 2025

Abstract:Tracking multiple faces is a difficult problem, as there may be partially occluded or lateral faces. In multiple face tracking, association is typically based on (biometric) face features. However, the models used to extract these face features usually require frontal face images, which can limit the tracking performance. In this work, a multi-face tracking method inspired by StrongSort, FaceSORT, is proposed. To mitigate the problem of partially occluded or lateral faces, biometric face features are combined with visual appearance features (i.e., generated by a generic object classifier), with both features are extracted from the same face patch. A comprehensive experimental evaluation is performed, including a comparison of different face descriptors, an evaluation of different parameter settings, and the application of a different similarity metric. All experiments are conducted with a new multi-face tracking dataset and a subset of the ChokePoint dataset. The `Paris Lodron University Salzburg Faces in a Queue' dataset consists of a total of seven fully annotated sequences (12730 frames) and is made publicly available as part of this work. Together with this dataset, annotations of 6 sequences from the ChokePoint dataset are also provided.

Device (In)Dependence of Deep Learning-based Image Age Approximation

Apr 18, 2024

Abstract:The goal of temporal image forensic is to approximate the age of a digital image relative to images from the same device. Usually, this is based on traces left during the image acquisition pipeline. For example, several methods exist that exploit the presence of in-field sensor defects for this purpose. In addition to these 'classical' methods, there is also an approach in which a Convolutional Neural Network (CNN) is trained to approximate the image age. One advantage of a CNN is that it independently learns the age features used. This would make it possible to exploit other (different) age traces in addition to the known ones (i.e., in-field sensor defects). In a previous work, we have shown that the presence of strong in-field sensor defects is irrelevant for a CNN to predict the age class. Based on this observation, the question arises how device (in)dependent the learned features are. In this work, we empirically asses this by training a network on images from a single device and then apply the trained model to images from different devices. This evaluation is performed on 14 different devices, including 10 devices from the publicly available 'Northumbria Temporal Image Forensics' database. These 10 different devices are based on five different device pairs (i.e., with the identical camera model).

Exploring Deep Learning Image Super-Resolution for Iris Recognition

Nov 02, 2023Abstract:In this work we test the ability of deep learning methods to provide an end-to-end mapping between low and high resolution images applying it to the iris recognition problem. Here, we propose the use of two deep learning single-image super-resolution approaches: Stacked Auto-Encoders (SAE) and Convolutional Neural Networks (CNN) with the most possible lightweight structure to achieve fast speed, preserve local information and reduce artifacts at the same time. We validate the methods with a database of 1.872 near-infrared iris images with quality assessment and recognition experiments showing the superiority of deep learning approaches over the compared algorithms.

Content Bias in Deep Learning Age Approximation: A new Approach Towards more Explainability

Oct 03, 2023

Abstract:In the context of temporal image forensics, it is not evident that a neural network, trained on images from different time-slots (classes), exploit solely age related features. Usually, images taken in close temporal proximity (e.g., belonging to the same age class) share some common content properties. Such content bias can be exploited by a neural network. In this work, a novel approach that evaluates the influence of image content is proposed. This approach is verified using synthetic images (where content bias can be ruled out) with an age signal embedded. Based on the proposed approach, it is shown that a `standard' neural network trained in the context of age classification is strongly dependent on image content. As a potential countermeasure, two different techniques are applied to mitigate the influence of the image content during training, and they are also evaluated by the proposed method.

Deep Learning in the Field of Biometric Template Protection: An Overview

Mar 05, 2023

Abstract:Today, deep learning represents the most popular and successful form of machine learning. Deep learning has revolutionised the field of pattern recognition, including biometric recognition. Biometric systems utilising deep learning have been shown to achieve auspicious recognition accuracy, surpassing human performance. Apart from said breakthrough advances in terms of biometric performance, the use of deep learning was reported to impact different covariates of biometrics such as algorithmic fairness, vulnerability to attacks, or template protection. Technologies of biometric template protection are designed to enable a secure and privacy-preserving deployment of biometrics. In the recent past, deep learning techniques have been frequently applied in biometric template protection systems for various purposes. This work provides an overview of how advances in deep learning take influence on the field of biometric template protection. The interrelation between improved biometric performance rates and security in biometric template protection is elaborated. Further, the use of deep learning for obtaining feature representations that are suitable for biometric template protection is discussed. Novel methods that apply deep learning to achieve various goals of biometric template protection are surveyed along with deep learning-based attacks.

Advanced Image Quality Assessment for Hand- and Fingervein Biometrics

Feb 21, 2023

Abstract:Natural Scene Statistics commonly used in non-reference image quality measures and a deep learning based quality assessment approach are proposed as biometric quality indicators for vasculature images. While NIQE and BRISQUE if trained on common images with usual distortions do not work well for assessing vasculature pattern samples' quality, their variants being trained on high and low quality vasculature sample data behave as expected from a biometric quality estimator in most cases (deviations from the overall trend occur for certain datasets or feature extraction methods). The proposed deep learning based quality metric is capable of assigning the correct quality class to the vaculature pattern samples in most cases, independent of finger or hand vein patterns being assessed. The experiments were conducted on a total of 13 publicly available finger and hand vein datasets and involve three distinct template representations (two of them especially designed for vascular biometrics). The proposed (trained) quality measures are compared to a several classical quality metrics, with their achieved results underlining their promising behaviour.

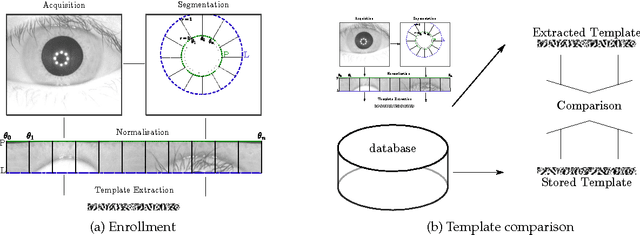

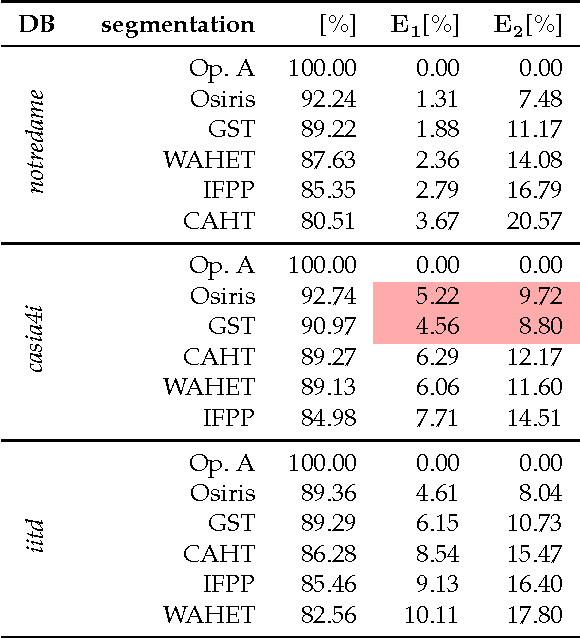

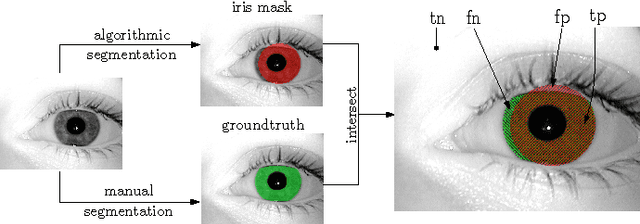

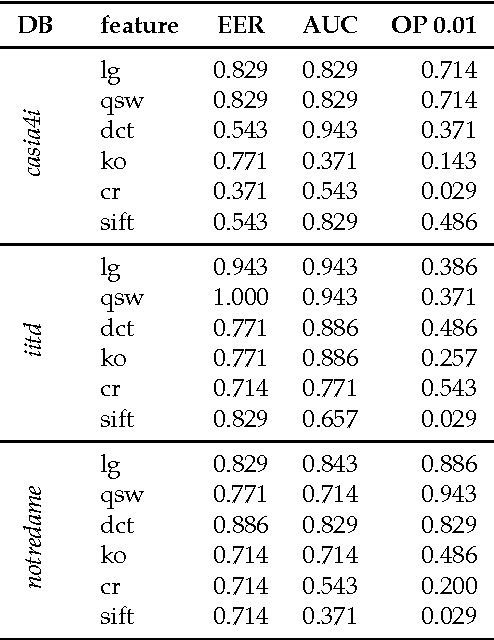

Experimental analysis regarding the influence of iris segmentation on the recognition rate

Nov 10, 2022

Abstract:In this study the authors will look at the detection and segmentation of the iris and its influence on the overall performance of the iris-biometric tool chain. The authors will examine whether the segmentation accuracy, based on conformance with a ground truth, can serve as a predictor for the overall performance of the iris-biometric tool chain. That is: If the segmentation accuracy is improved will this always improve the overall performance? Furthermore, the authors will systematically evaluate the influence of segmentation parameters, pupillary and limbic boundary and normalisation centre (based on Daugman's rubbersheet model), on the rest of the iris-biometric tool chain. The authors will investigate if accurately finding these parameters is important and how consistency, that is, extracting the same exact region of the iris during segmenting, influences the overall performance.

Iris super-resolution using CNNs: is photo-realism important to iris recognition?

Oct 24, 2022Abstract:The use of low-resolution images adopting more relaxed acquisition conditions such as mobile phones and surveillance videos is becoming increasingly common in iris recognition nowadays. Concurrently, a great variety of single image super-resolution techniques are emerging, especially with the use of convolutional neural networks (CNNs). The main objective of these methods is to try to recover finer texture details generating more photo-realistic images based on the optimisation of an objective function depending basically on the CNN architecture and training approach. In this work, the authors explore single image super-resolution using CNNs for iris recognition. For this, they test different CNN architectures and use different training databases, validating their approach on a database of 1.872 near infrared iris images and on a mobile phone image database. They also use quality assessment, visual results and recognition experiments to verify if the photo-realism provided by the CNNs which have already proven to be effective for natural images can reflect in a better recognition rate for iris recognition. The results show that using deeper architectures trained with texture databases that provide a balance between edge preservation and the smoothness of the method can lead to good results in the iris recognition process.

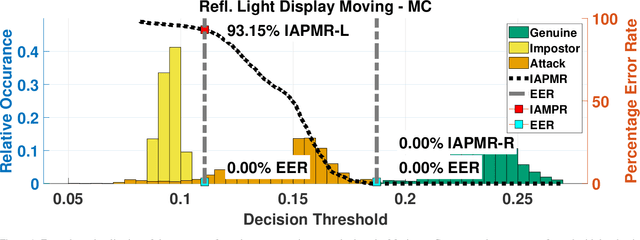

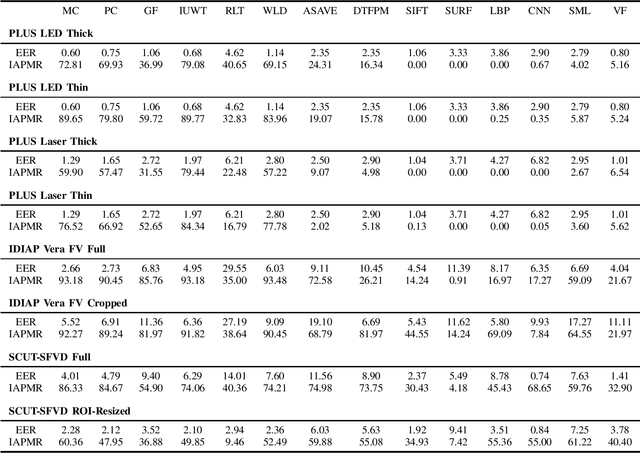

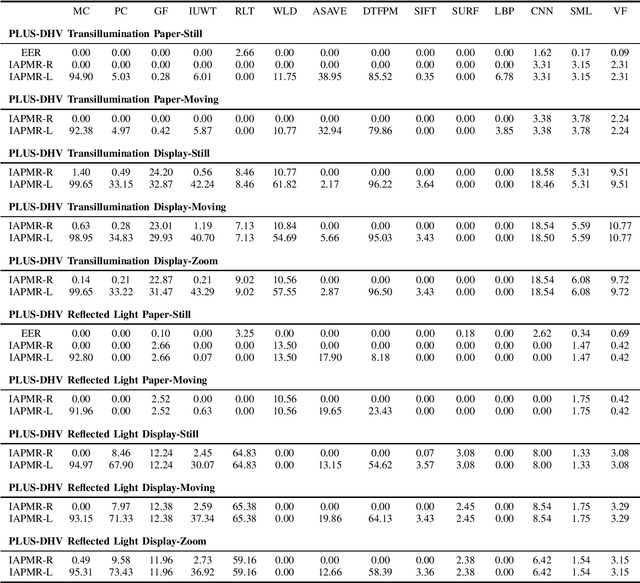

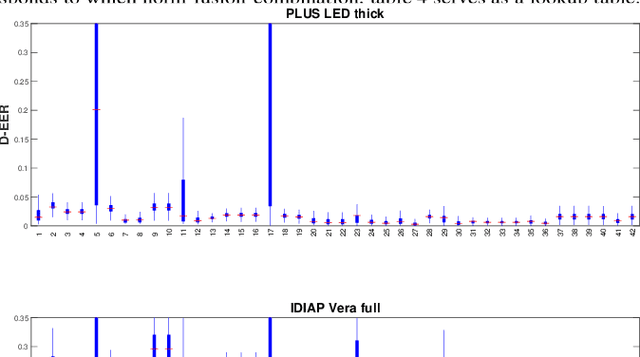

Extensive Threat Analysis of Vein Attack Databases and Attack Detection by Fusion of Comparison Scores

Mar 16, 2022

Abstract:The last decade has brought forward many great contributions regarding presentation attack detection for the domain of finger and hand vein biometrics. Among those contributions, one is able to find a variety of different attack databases that are either private or made publicly available to the research community. However, it is not always shown whether the used attack samples hold the capability to actually deceive a realistic vein recognition system. Inspired by previous works, this study provides a systematic threat evaluation including three publicly available finger vein attack databases and one private dorsal hand vein database. To do so, 14 distinct vein recognition schemes are confronted with attack samples and the percentage of wrongly accepted attack samples is then reported as the Impostor Attack Presentation Match Rate. As a second step, comparison scores from different recognition schemes are combined using score level fusion with the goal of performing presentation attack detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge