Andrea Virgilio Monti-Guarnieri

Cooperative Coherent Multistatic Imaging and Phase Synchronization in Networked Sensing

Nov 13, 2023

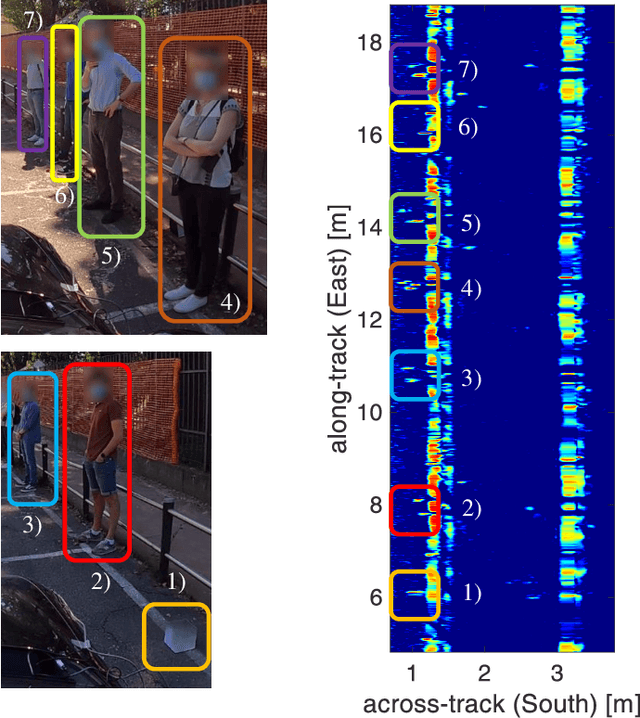

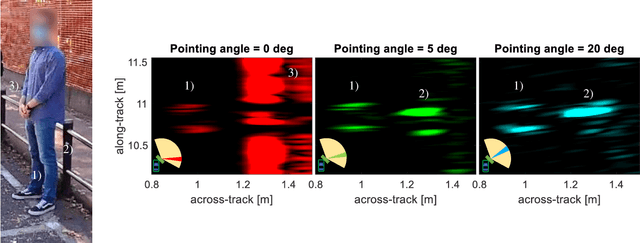

Abstract:Coherent multistatic radio imaging represents a pivotal opportunity for forthcoming wireless networks, which involves distributed nodes cooperating to achieve accurate sensing resolution and robustness. This paper delves into cooperative coherent imaging for vehicular radar networks. Herein, multiple radar-equipped vehicles cooperate to improve collective sensing capabilities and address the fundamental issue of distinguishing weak targets in close proximity to strong ones, a critical challenge for vulnerable road users protection. We prove the significant benefits of cooperative coherent imaging in the considered automotive scenario in terms of both probability of correct detection, evaluated considering several system parameters, as well as resolution capabilities, showcased by a dedicated experimental campaign wherein the collaboration between two vehicles enables the detection of the legs of a pedestrian close to a parked car. Moreover, as \textit{coherent} processing of several sensors' data requires very tight accuracy on clock synchronization and sensor's positioning -- referred to as \textit{phase synchronization} -- (such that to predict sensor-target distances up to a fraction of the carrier wavelength), we present a general three-step cooperative multistatic phase synchronization procedure, detailing the required information exchange among vehicles in the specific automotive radar context and assessing its feasibility and performance by hybrid Cram\'er-Rao bound.

Wavefield Networked Sensing: Principles, Algorithms and Applications

May 17, 2023

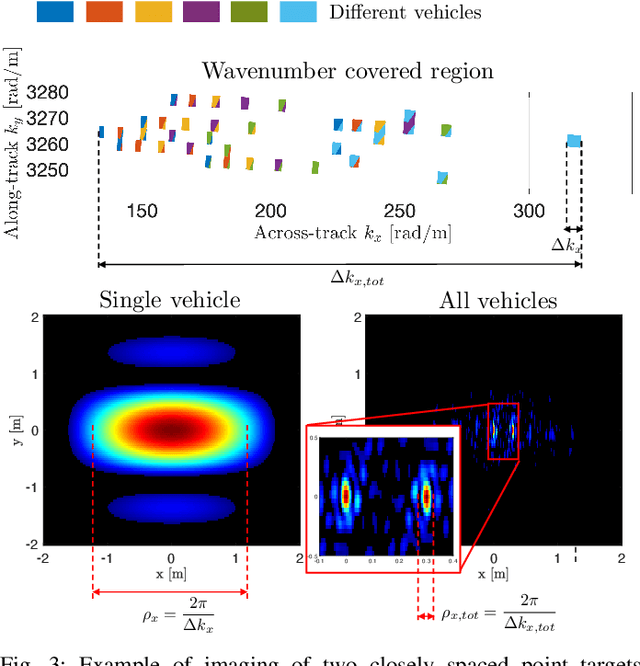

Abstract:Networked sensing refers to the capability of properly orchestrating multiple sensing terminals to enhance specific figures of merit, e.g., positioning accuracy or imaging resolution. Regarding radio-based sensing, it is essential to understand \textit{when} and \textit{how} sensing terminals should be orchestrated, namely the best cooperation that trades between performance and cost (e.g., energy consumption, communication overhead, and complexity). This paper addresses networked sensing from a physics-driven perspective, aiming to provide a general theoretical benchmark to evaluate its \textit{imaging} performance bounds and to guide the sensing orchestration accordingly. Diffraction tomography theory (DTT) is the method to quantify the imaging resolution of any radio sensing experiment from inspection of its spectral (or wavenumber) content. In networked sensing, the image formation is based on the back-projection integral, valid for any network topology and physical configuration of the terminals. The \textit{wavefield networked sensing} is a framework in which multiple sensing terminals are orchestrated during the acquisition process to maximize the imaging quality (resolution and grating lobes suppression) by pursuing the deceptively simple \textit{wavenumber tessellation principle}. We discuss all the cooperation possibilities between sensing terminals and possible killer applications. Remarkably, we show that the proposed method allows obtaining high-quality images of the environment in limited bandwidth conditions, leveraging the coherent combination of multiple multi-static low-resolution images.

Motion Estimation and Compensation in Automotive MIMO SAR

Jan 25, 2022

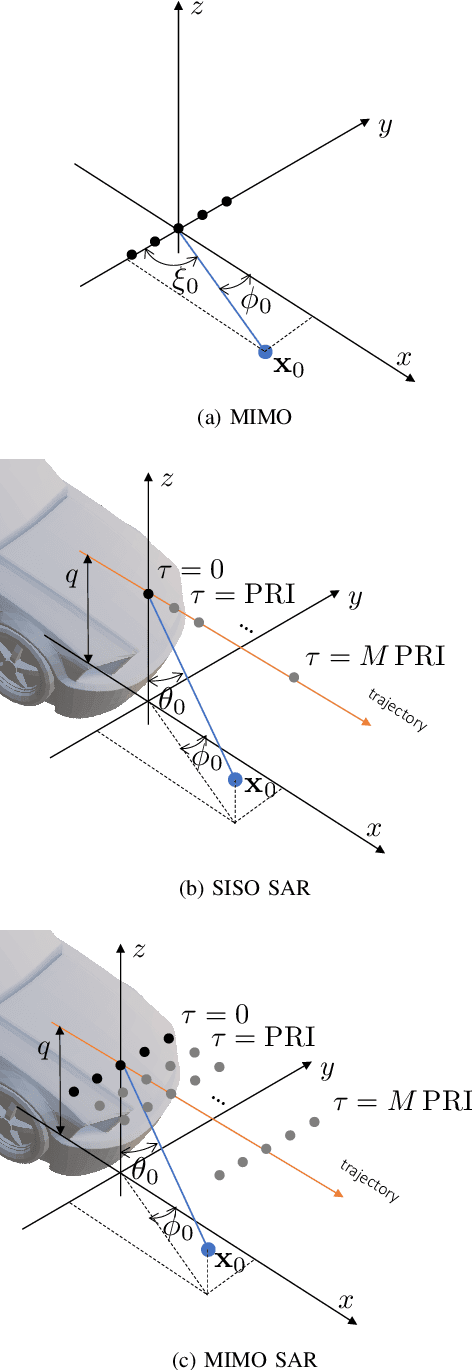

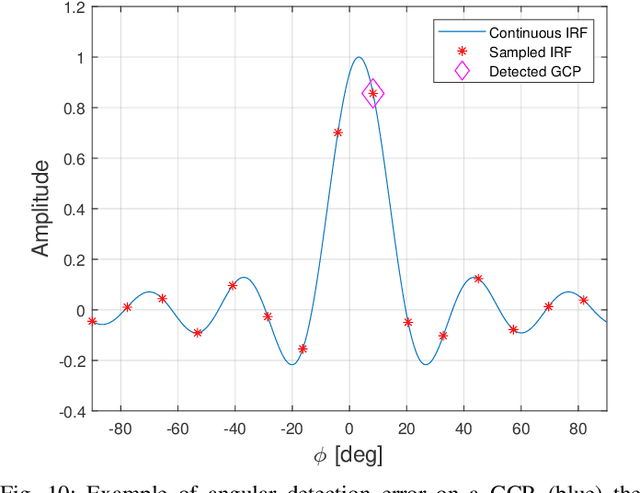

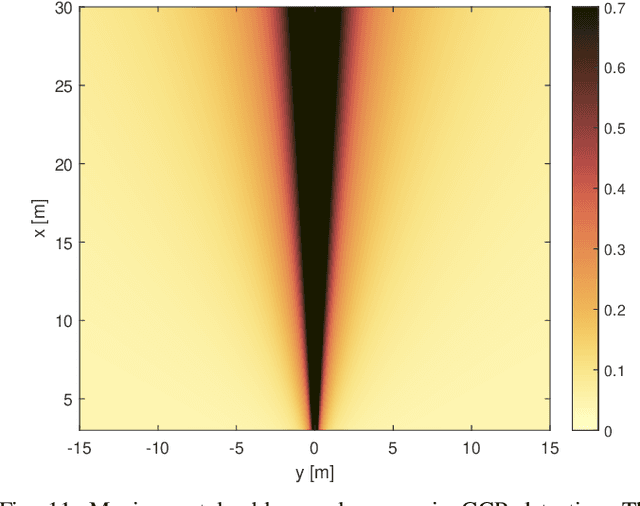

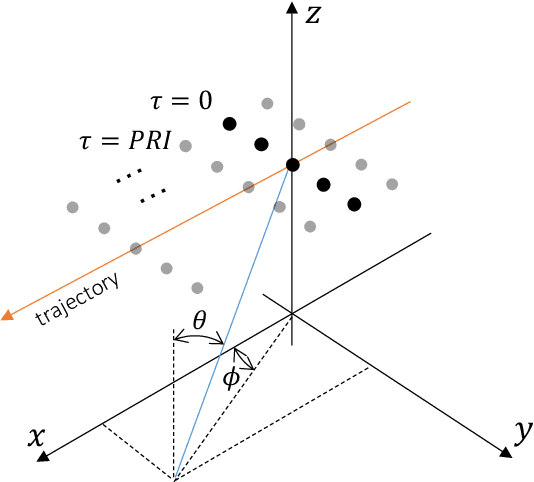

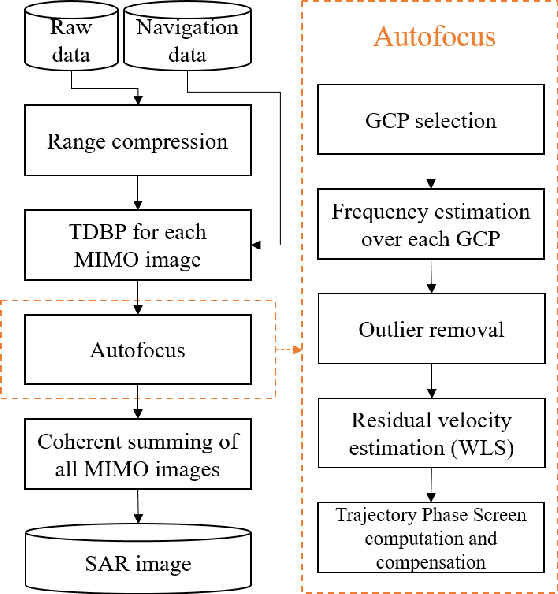

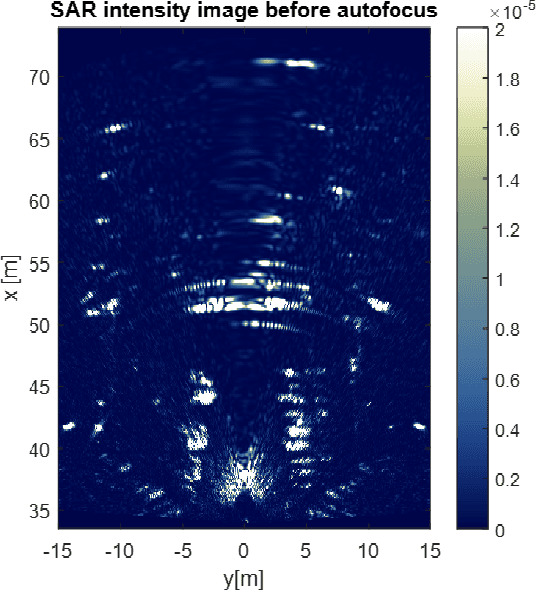

Abstract:With the advent of self-driving vehicles, autonomous driving systems will have to rely on a vast number of heterogeneous sensors to perform dynamic perception of the surrounding environment. Synthetic Aperture Radar (SAR) systems increase the resolution of conventional mass-market radars by exploiting the vehicle's ego-motion, requiring a very accurate knowledge of the trajectory, usually not compatible with automotive-grade navigation systems. In this regard, this paper deals with the analysis, estimation and compensation of trajectory estimation errors in automotive SAR systems, proposing a complete residual motion estimation and compensation workflow. We start by defining the geometry of the acquisition and the basic processing steps of Multiple-Input Multiple-Output (MIMO) SAR systems. Then, we analytically derive the effects of typical motion errors in automotive SAR imaging. Based on the derived models, the procedure is detailed, outlining the guidelines for its practical implementation. We show the effectiveness of the proposed technique by means of experimental data gathered by a 77 GHz radar mounted in a forward looking configuration.

Residual Motion Compensation in Automotive MIMO SAR Imaging

Oct 28, 2021

Abstract:This paper deals with the analysis, estimation, and compensation of trajectory errors in automotive-based Synthetic Aperture Radar (SAR) systems. First of all, we define the geometry of the acquisition and the model of the received signal. We then proceed by analytically evaluating the effect of an error in the vehicle's trajectory. Based on the derived model, we introduce a motion compensation (MoCo) procedure capable of estimating and compensating constant velocity motion errors leading to a well-focused and well-localized SAR image. The procedure is validated using real data gathered by a 77 GHz automotive SAR with MIMO capabilities.

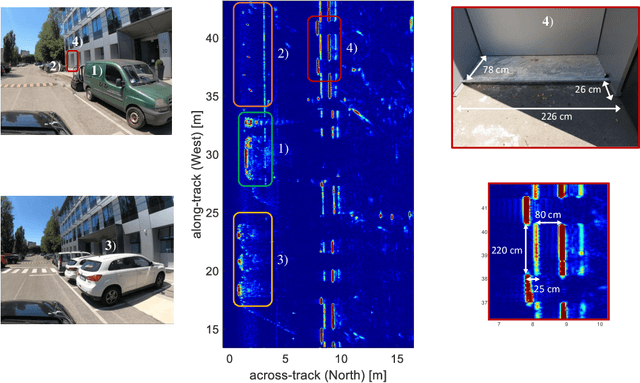

Multi-Beam Automotive SAR Imaging in Urban Scenarios

Oct 28, 2021

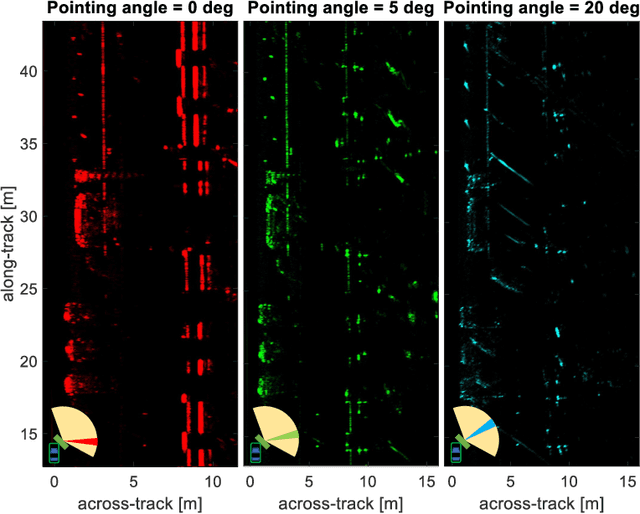

Abstract:Automotive synthetic aperture radar (SAR) systems are rapidly emerging as a candidate technological solution to enable a high-resolution environment mapping for autonomous driving. Compared to lidars and cameras, automotive-legacy radars can work in any weather condition and without an external source of illumination, but are limited in either range or angular resolution. SARs offer a relevant increase in angular resolution, provided that the ego-motion of the radar platform is known along the synthetic aperture. In this paper, we present the results of an experimental campaign aimed at assessing the potential of a multi-beam SAR imaging in an urban scenario, composed of various targets (buildings, cars, pedestrian, etc.), employing a 77 GHz multiple-input multiple-output (MIMO) radar platform based on a mass-market available automotive-grade technology. The results highlight a centimeter-level accuracy of the SAR images in realistic driving conditions, showing the possibility to use a multi-angle focusing approach to detect and discriminate between different targets based on their angular scattering response.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge