Anchen Sun

Deep Semi-Supervised Survival Analysis for Predicting Cancer Prognosis

Jan 28, 2026Abstract:The Cox Proportional Hazards (PH) model is widely used in survival analysis. Recently, artificial neural network (ANN)-based Cox-PH models have been developed. However, training these Cox models with high-dimensional features typically requires a substantial number of labeled samples containing information about time-to-event. The limited availability of labeled data for training often constrains the performance of ANN-based Cox models. To address this issue, we employed a deep semi-supervised learning (DSSL) approach to develop single- and multi-modal ANN-based Cox models based on the Mean Teacher (MT) framework, which utilizes both labeled and unlabeled data for training. We applied our model, named Cox-MT, to predict the prognosis of several types of cancer using data from The Cancer Genome Atlas (TCGA). Our single-modal Cox-MT models, utilizing TCGA RNA-seq data or whole slide images, significantly outperformed the existing ANN-based Cox model, Cox-nnet, using the same data set across four types of cancer considered. As the number of unlabeled samples increased, the performance of Cox-MT significantly improved with a given set of labeled data. Furthermore, our multi-modal Cox-MT model demonstrated considerably better performance than the single-modal model. In summary, the Cox-MT model effectively leverages both labeled and unlabeled data to significantly enhance prediction accuracy compared to existing ANN-based Cox models trained solely on labeled data.

Who Said What WSW 2.0? Enhanced Automated Analysis of Preschool Classroom Speech

May 15, 2025

Abstract:This paper introduces an automated framework WSW2.0 for analyzing vocal interactions in preschool classrooms, enhancing both accuracy and scalability through the integration of wav2vec2-based speaker classification and Whisper (large-v2 and large-v3) speech transcription. A total of 235 minutes of audio recordings (160 minutes from 12 children and 75 minutes from 5 teachers), were used to compare system outputs to expert human annotations. WSW2.0 achieves a weighted F1 score of .845, accuracy of .846, and an error-corrected kappa of .672 for speaker classification (child vs. teacher). Transcription quality is moderate to high with word error rates of .119 for teachers and .238 for children. WSW2.0 exhibits relatively high absolute agreement intraclass correlations (ICC) with expert transcriptions for a range of classroom language features. These include teacher and child mean utterance length, lexical diversity, question asking, and responses to questions and other utterances, which show absolute agreement intraclass correlations between .64 and .98. To establish scalability, we apply the framework to an extensive dataset spanning two years and over 1,592 hours of classroom audio recordings, demonstrating the framework's robustness for broad real-world applications. These findings highlight the potential of deep learning and natural language processing techniques to revolutionize educational research by providing accurate measures of key features of preschool classroom speech, ultimately guiding more effective intervention strategies and supporting early childhood language development.

Who Said What? An Automated Approach to Analyzing Speech in Preschool Classrooms

Jan 14, 2024

Abstract:Young children spend substantial portions of their waking hours in noisy preschool classrooms. In these environments, children's vocal interactions with teachers are critical contributors to their language outcomes, but manually transcribing these interactions is prohibitive. Using audio from child- and teacher-worn recorders, we propose an automated framework that uses open source software both to classify speakers (ALICE) and to transcribe their utterances (Whisper). We compare results from our framework to those from a human expert for 110 minutes of classroom recordings, including 85 minutes from child-word microphones (n=4 children) and 25 minutes from teacher-worn microphones (n=2 teachers). The overall proportion of agreement, that is, the proportion of correctly classified teacher and child utterances, was .76, with an error-corrected kappa of .50 and a weighted F1 of .76. The word error rate for both teacher and child transcriptions was .15, meaning that 15% of words would need to be deleted, added, or changed to equate the Whisper and expert transcriptions. Moreover, speech features such as the mean length of utterances in words, the proportion of teacher and child utterances that were questions, and the proportion of utterances that were responded to within 2.5 seconds were similar when calculated separately from expert and automated transcriptions. The results suggest substantial progress in analyzing classroom speech that may support children's language development. Future research using natural language processing is underway to improve speaker classification and to analyze results from the application of the automated it framework to a larger dataset containing classroom recordings from 13 children and 4 teachers observed on 17 occasions over one year.

Dynamic Analysis of Corporate ESG Reports: A Model of Evolutionary Trends

Sep 13, 2023Abstract:Environmental, social, and governance (ESG) reports are globally recognized as a keystone in sustainable enterprise development. This study aims to map the changing landscape of ESG topics within firms in the global market. A dynamic framework is developed to analyze ESG strategic management for individual classes, across multiple classes, and in alignment with a specific sustainability index. The output of these analytical processes forms the foundation of an ESG strategic model. Utilizing a rich collection of 21st-century ESG reports from technology companies, our experiment elucidates the changes in ESG perspectives by incorporating analytical keywords into the proposed framework. This work thus provides an empirical method that reveals the concurrent evolution of ESG topics over recent years.

Contrastive Learning for Predicting Cancer Prognosis Using Gene Expression Values

Jun 09, 2023

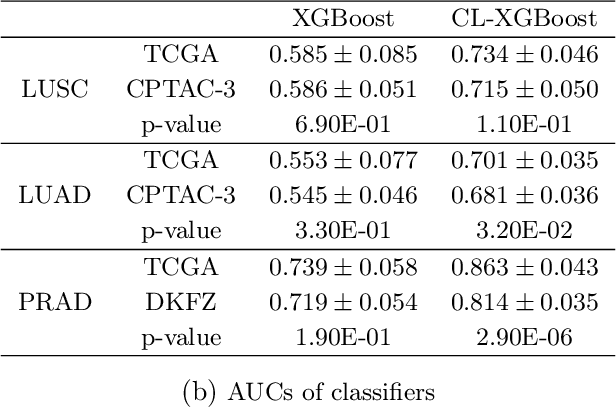

Abstract:Several artificial neural networks (ANNs) have recently been developed as the Cox proportional hazard model for predicting cancer prognosis based on tumor transcriptome. However, they have not demonstrated significantly better performance than the traditional Cox regression with regularization. Training an ANN with high prediction power is challenging in the presence of a limited number of data samples and a high-dimensional feature space. Recent advancements in image classification have shown that contrastive learning can facilitate further learning tasks by learning good feature representation from a limited number of data samples. In this paper, we applied supervised contrastive learning to tumor gene expression and clinical data to learn feature representations in a low-dimensional space. We then used these learned features to train the Cox model for predicting cancer prognosis. Using data from The Cancer Genome Atlas (TCGA), we demonstrated that our contrastive learning-based Cox model (CLCox) significantly outperformed existing methods in predicting the prognosis of 18 types of cancer under consideration. We also developed contrastive learning-based classifiers to classify tumors into different risk groups and showed that contrastive learning can significantly improve classification accuracy.

UAV-Video-Based Rip Current Detection in Nearshore Areas

Apr 24, 2023

Abstract:Rip currents pose a significant danger to those who visit beaches, as they can swiftly pull swimmers away from shore. Detecting these currents currently relies on costly equipment and is challenging to implement on a larger scale. The advent of unmanned aerial vehicles (UAVs) and camera technology, however, has made monitoring near-shore regions more accessible and scalable. This paper proposes a new framework for detecting rip currents using video-based methods that leverage optical flow estimation, offshore direction calculation, and temporal data fusion techniques. Through the analysis of videos from multiple beaches, including Palm Beach, Haulover, Ocean Reef Park, and South Beach, as well as YouTube footage, we demonstrate the efficacy of our approach, which aligns with human experts' annotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge