Ananth Hari

PettingZoo: Gym for Multi-Agent Reinforcement Learning

Sep 30, 2020

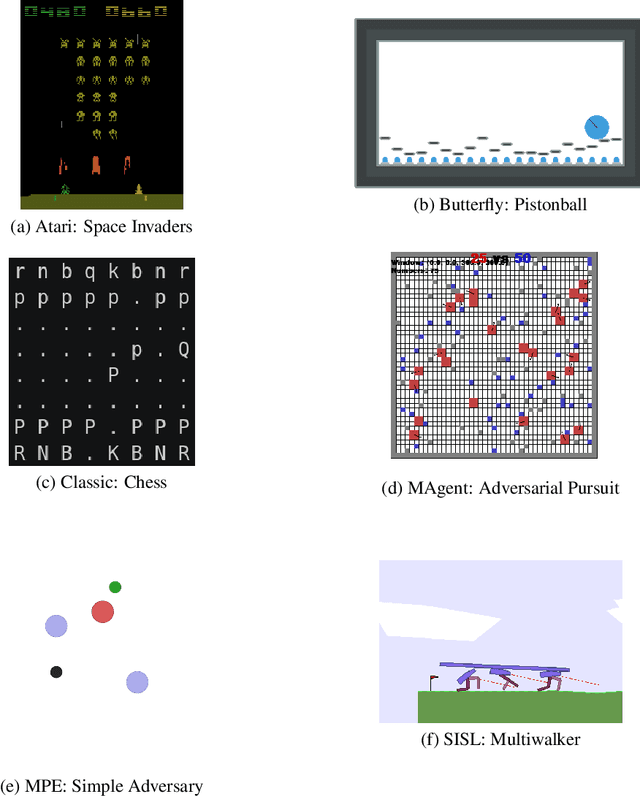

Abstract:OpenAI's Gym library contains a large, diverse set of environments that are useful benchmarks in reinforcement learning, under a single elegant Python API (with tools to develop new compliant environments) . The introduction of this library has proven a watershed moment for the reinforcement learning community, because it created an accessible set of benchmark environments that everyone could use (including wrapper important existing libraries), and because a standardized API let RL learning methods and environments from anywhere be trivially exchanged. This paper similarly introduces PettingZoo, a library of diverse set of multi-agent environments under a single elegant Python API, with tools to easily make new compliant environments.

Agent Environment Cycle Games

Sep 28, 2020

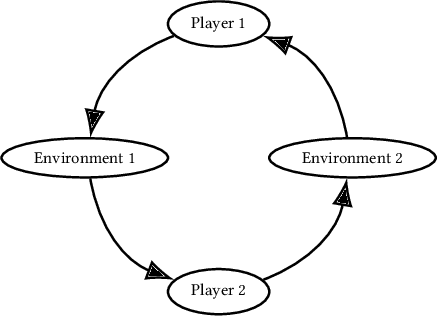

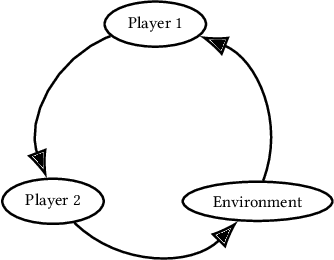

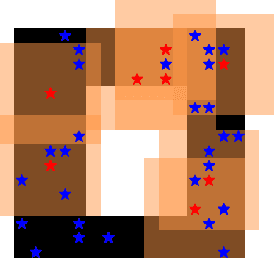

Abstract:Partially Observable Stochastic Games (POSGs), are the most general model of games used in Multi-Agent Reinforcement Learning (MARL), modeling actions and observations as happening sequentially for all agents. We introduce Agent Environment Cycle Games (AEC Games), a model of games based on sequential agent actions and observations. AEC Games can be thought of as sequential versions of POSGs, and we prove that they are equally powerful. We argue conceptually and through case studies that the AEC games model is useful in important scenarios in MARL for which the POSG model is not well suited. We additionally introduce "cyclically expansive curriculum learning," a new MARL curriculum learning method motivated by the AEC games model. It can be applied "for free," and experimentally we show this technique to achieve up to 35.1% more total reward on average.

SuperSuit: Simple Microwrappers for Reinforcement Learning Environments

Aug 17, 2020Abstract:In reinforcement learning, wrappers are universally used to transform the information that passes between a model and an environment. Despite their ubiquity, no library exists with reasonable implementations of all popular preprocessing methods. This leads to unnecessary bugs, code inefficiencies, and wasted developer time. Accordingly we introduce SuperSuit, a Python library that includes all popular wrappers, and wrappers that can easily apply lambda functions to the observations/actions/reward. It's compatible with the standard Gym environment specification, as well as the PettingZoo specification for multi-agent environments. The library is available at https://github.com/PettingZoo-Team/SuperSuit,and can be installed via pip.

Parameter Sharing is Surprisingly Useful for Multi-Agent Deep Reinforcement Learning

Jun 05, 2020

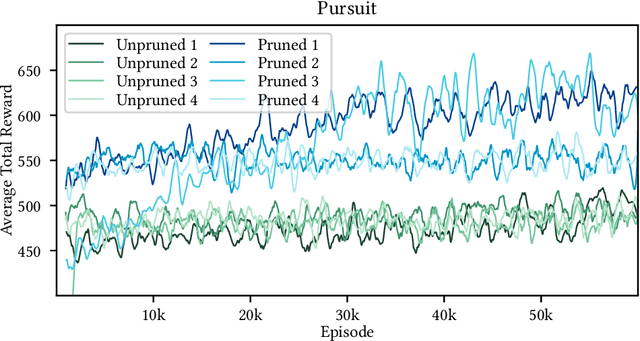

Abstract:"Nonstationarity" is a fundamental problem in cooperative multi-agent reinforcement learning (MARL)--each agent must relearn information about the other agent's policies due to the other agents learning, causing information to "ring" between agents and convergence to be slow. The MAILP model, introduced by Terry and Grammel (2020), is a novel model of information transfer during multi-agent learning. We use the MAILP model to show that increasing training centralization arbitrarily mitigates the slowing of convergence due to nonstationarity. The most centralized case of learning is parameter sharing, an uncommonly used MARL method, specific to environments with homogeneous agents, that bootstraps a single-agent reinforcement learning (RL) method and learns an identical policy for each agent. We experimentally replicate the result of increased learning centralization leading to better performance on the MARL benchmark set from Gupta et al. (2017). We further apply parameter sharing to 8 "more modern" single-agent deep RL (DRL) methods for the first time in the literature. With this, we achieved the best documented performance on a set of MARL benchmarks and achieved up to 38 times more average reward in as little as 7% as many episodes compared to documented parameter sharing arrangement. We finally offer a formal proof of a set of methods that allow parameter sharing to serve in environments with heterogeneous agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge