Ananda Pascual

Bounded nonlinear forecasts of partially observed geophysical systems with physics-constrained deep learning

Mar 02, 2022

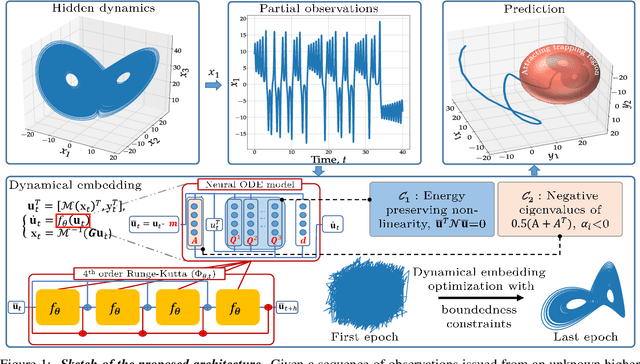

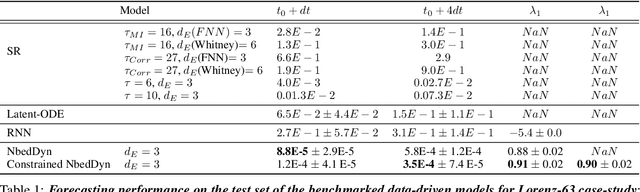

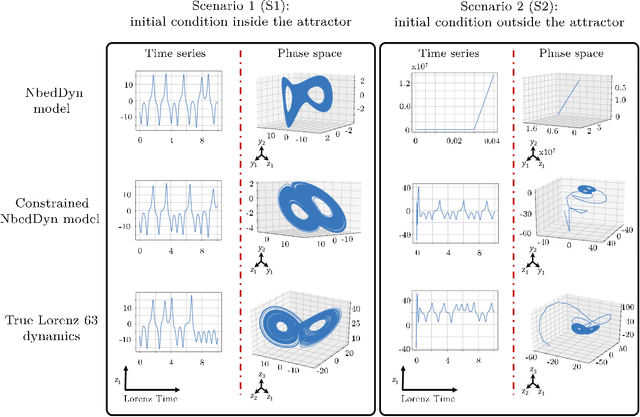

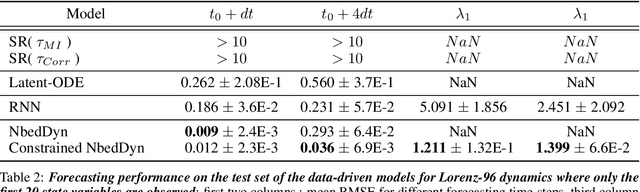

Abstract:The complexity of real-world geophysical systems is often compounded by the fact that the observed measurements depend on hidden variables. These latent variables include unresolved small scales and/or rapidly evolving processes, partially observed couplings, or forcings in coupled systems. This is the case in ocean-atmosphere dynamics, for which unknown interior dynamics can affect surface observations. The identification of computationally-relevant representations of such partially-observed and highly nonlinear systems is thus challenging and often limited to short-term forecast applications. Here, we investigate the physics-constrained learning of implicit dynamical embeddings, leveraging neural ordinary differential equation (NODE) representations. A key objective is to constrain their boundedness, which promotes the generalization of the learned dynamics to arbitrary initial condition. The proposed architecture is implemented within a deep learning framework, and its relevance is demonstrated with respect to state-of-the-art schemes for different case-studies representative of geophysical dynamics.

Learning Runge-Kutta Integration Schemes for ODE Simulation and Identification

May 11, 2021

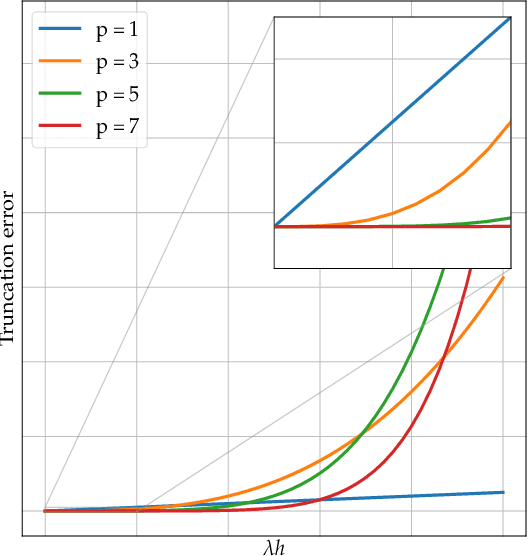

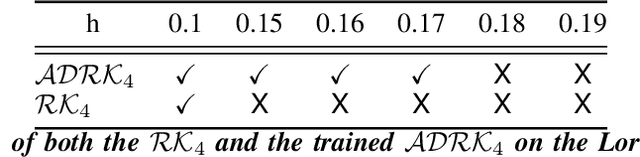

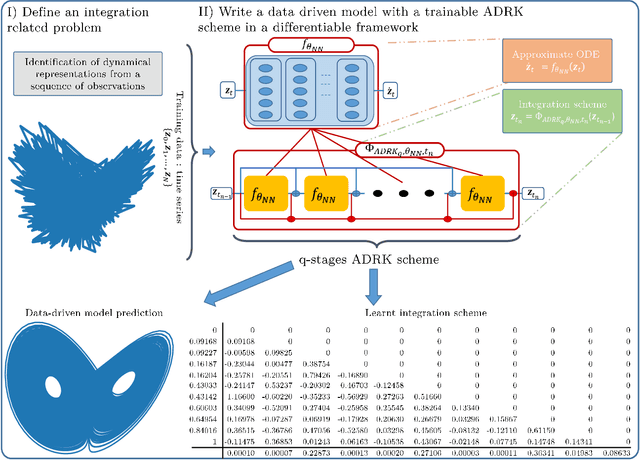

Abstract:Deriving analytical solutions of ordinary differential equations is usually restricted to a small subset of problems and numerical techniques are considered. Inevitably, a numerical simulation of a differential equation will then always be distinct from a true analytical solution. An efficient integration scheme shall further not only provide a trajectory throughout a given state, but also be derived to ensure the generated simulation to be close to the analytical one. Consequently, several integration schemes were developed for different classes of differential equations. Unfortunately, when considering the integration of complex non-linear systems, as well as the identification of non-linear equations from data, this choice of the integration scheme is often far from being trivial. In this paper, we propose a novel framework to learn integration schemes that minimize an integration-related cost function. We demonstrate the relevance of the proposed learning-based approach for non-linear equations and include a quantitative analysis w.r.t. classical state-of-the-art integration techniques, especially where the latter may not apply.

Learning Latent Dynamics for Partially-Observed Chaotic Systems

Jul 04, 2019

Abstract:This paper addresses the data-driven identification of latent dynamical representations of partially-observed systems, i.e., dynamical systems for which some components are never observed, with an emphasis on forecasting applications, including long-term asymptotic patterns. Whereas state-of-the-art data-driven approaches rely on delay embeddings and linear decompositions of the underlying operators, we introduce a framework based on the data-driven identification of an augmented state-space model using a neural-network-based representation. For a given training dataset, it amounts to jointly learn an ODE (Ordinary Differential Equation) representation in the latent space and reconstructing latent states. Through numerical experiments, we demonstrate the relevance of the proposed framework w.r.t. state-of-the-art approaches in terms of short-term forecasting performance and long-term behaviour. We further discuss how the proposed framework relates to Koopman operator theory and Takens' embedding theorem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge