Amogh Gudi

Proximally Sensitive Error for Anomaly Detection and Feature Learning

Jun 01, 2022

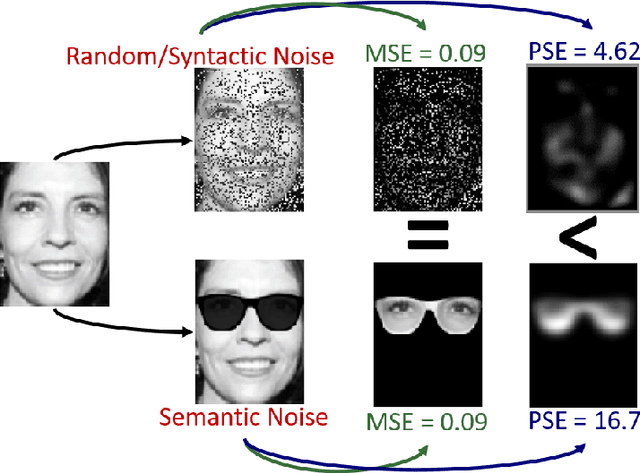

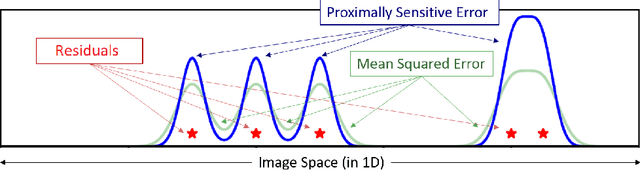

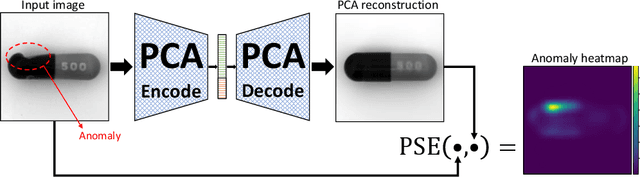

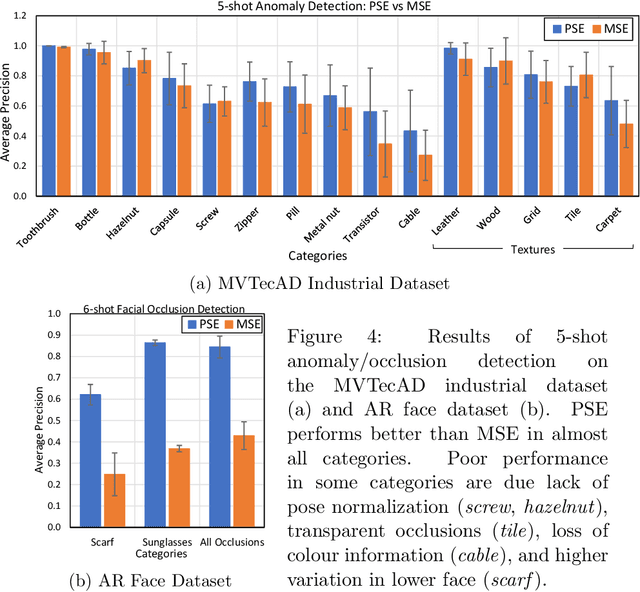

Abstract:Mean squared error (MSE) is one of the most widely used metrics to expression differences between multi-dimensional entities, including images. However, MSE is not locally sensitive as it does not take into account the spatial arrangement of the (pixel) differences, which matters for structured data types like images. Such spatial arrangements carry information about the source of the differences; therefore, an error function that also incorporates the location of errors can lead to a more meaningful distance measure. We introduce Proximally Sensitive Error (PSE), through which we suggest that a regional emphasis in the error measure can 'highlight' semantic differences between images over syntactic/random deviations. We demonstrate that this emphasis can be leveraged upon for the task of anomaly/occlusion detection. We further explore its utility as a loss function to help a model focus on learning representations of semantic objects instead of minimizing syntactic reconstruction noise.

Real-time Webcam Heart-Rate and Variability Estimation with Clean Ground Truth for Evaluation

Dec 31, 2020

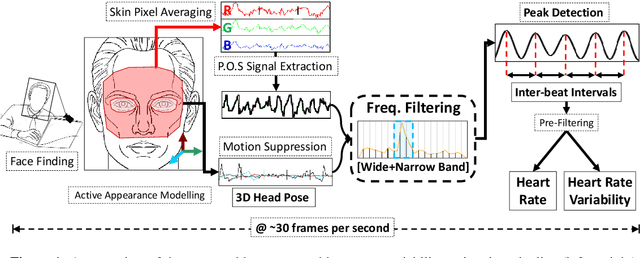

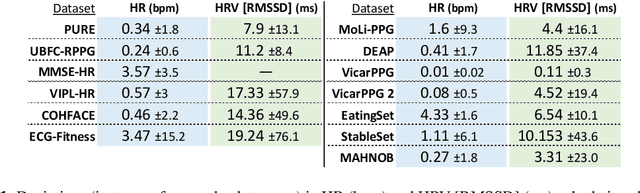

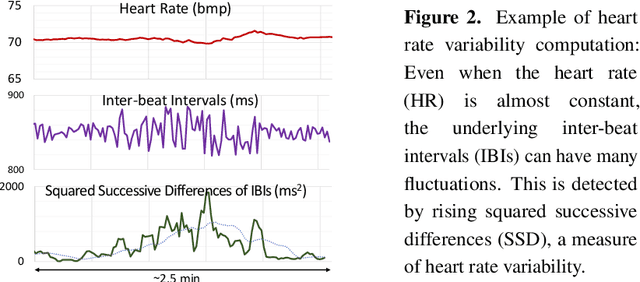

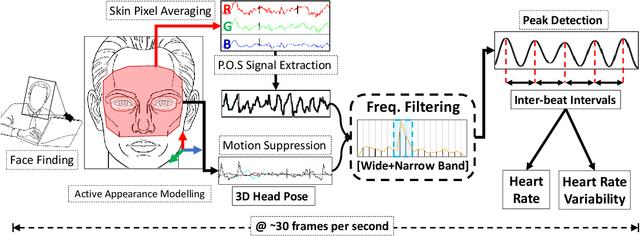

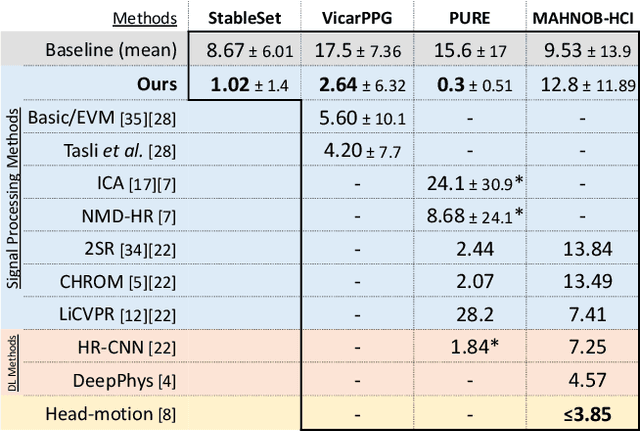

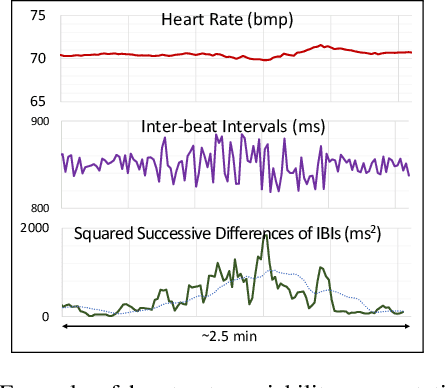

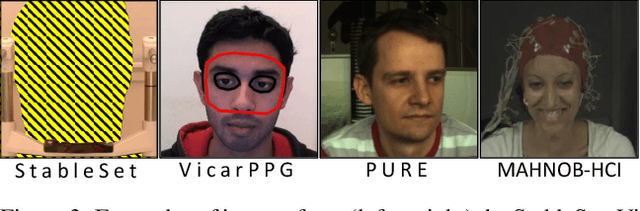

Abstract:Remote photo-plethysmography (rPPG) uses a camera to estimate a person's heart rate (HR). Similar to how heart rate can provide useful information about a person's vital signs, insights about the underlying physio/psychological conditions can be obtained from heart rate variability (HRV). HRV is a measure of the fine fluctuations in the intervals between heart beats. However, this measure requires temporally locating heart beats with a high degree of precision. We introduce a refined and efficient real-time rPPG pipeline with novel filtering and motion suppression that not only estimates heart rates, but also extracts the pulse waveform to time heart beats and measure heart rate variability. This unsupervised method requires no rPPG specific training and is able to operate in real-time. We also introduce a new multi-modal video dataset, VicarPPG 2, specifically designed to evaluate rPPG algorithms on HR and HRV estimation. We validate and study our method under various conditions on a comprehensive range of public and self-recorded datasets, showing state-of-the-art results and providing useful insights into some unique aspects. Lastly, we make available CleanerPPG, a collection of human-verified ground truth peak/heart-beat annotations for existing rPPG datasets. These verified annotations should make future evaluations and benchmarking of rPPG algorithms more accurate, standardized and fair.

* Published in the MDPI Applied Sciences journal special issue Video Analysis for Health Monitoring on December 2, 2020. arXiv admin note: text overlap with arXiv:1909.01206

Efficiency in Real-time Webcam Gaze Tracking

Sep 02, 2020

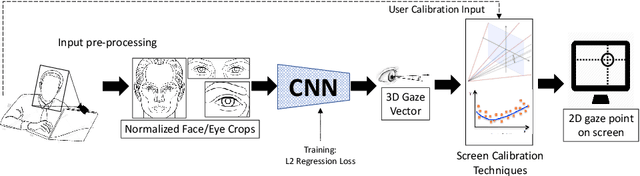

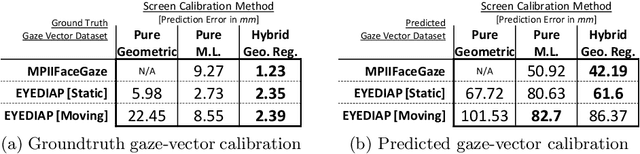

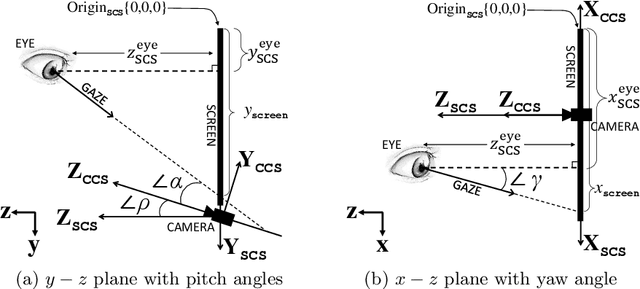

Abstract:Efficiency and ease of use are essential for practical applications of camera based eye/gaze-tracking. Gaze tracking involves estimating where a person is looking on a screen based on face images from a computer-facing camera. In this paper we investigate two complementary forms of efficiency in gaze tracking: 1. The computational efficiency of the system which is dominated by the inference speed of a CNN predicting gaze-vectors; 2. The usability efficiency which is determined by the tediousness of the mandatory calibration of the gaze-vector to a computer screen. To do so, we evaluate the computational speed/accuracy trade-off for the CNN and the calibration effort/accuracy trade-off for screen calibration. For the CNN, we evaluate the full face, two-eyes, and single eye input. For screen calibration, we measure the number of calibration points needed and evaluate three types of calibration: 1. pure geometry, 2. pure machine learning, and 3. hybrid geometric regression. Results suggest that a single eye input and geometric regression calibration achieve the best trade-off.

Efficient Real-Time Camera Based Estimation of Heart Rate and Its Variability

Sep 03, 2019

Abstract:Remote photo-plethysmography (rPPG) uses a remotely placed camera to estimating a person's heart rate (HR). Similar to how heart rate can provide useful information about a person's vital signs, insights about the underlying physio/psychological conditions can be obtained from heart rate variability (HRV). HRV is a measure of the fine fluctuations in the intervals between heart beats. However, this measure requires temporally locating heart beats with a high degree of precision. We introduce a refined and efficient real-time rPPG pipeline with novel filtering and motion suppression that not only estimates heart rate more accurately, but also extracts the pulse waveform to time heart beats and measure heart rate variability. This method requires no rPPG specific training and is able to operate in real-time. We validate our method on a self-recorded dataset under an idealized lab setting, and show state-of-the-art results on two public dataset with realistic conditions (VicarPPG and PURE).

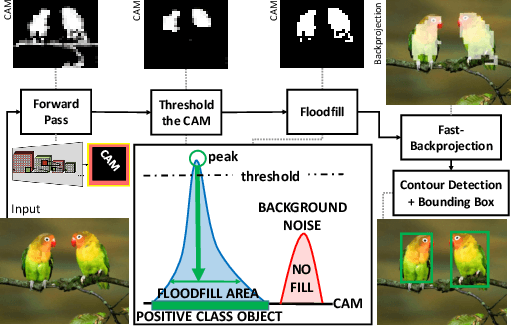

Object-Extent Pooling for Weakly Supervised Single-Shot Localization

Jul 19, 2017

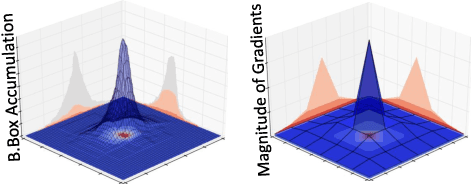

Abstract:In the face of scarcity in detailed training annotations, the ability to perform object localization tasks in real-time with weak-supervision is very valuable. However, the computational cost of generating and evaluating region proposals is heavy. We adapt the concept of Class Activation Maps (CAM) into the very first weakly-supervised 'single-shot' detector that does not require the use of region proposals. To facilitate this, we propose a novel global pooling technique called Spatial Pyramid Averaged Max (SPAM) pooling for training this CAM-based network for object extent localisation with only weak image-level supervision. We show this global pooling layer possesses a near ideal flow of gradients for extent localization, that offers a good trade-off between the extremes of max and average pooling. Our approach only requires a single network pass and uses a fast-backprojection technique, completely omitting any region proposal steps. To the best of our knowledge, this is the first approach to do so. Due to this, we are able to perform inference in real-time at 35fps, which is an order of magnitude faster than all previous weakly supervised object localization frameworks.

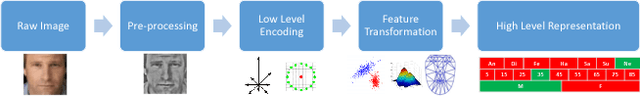

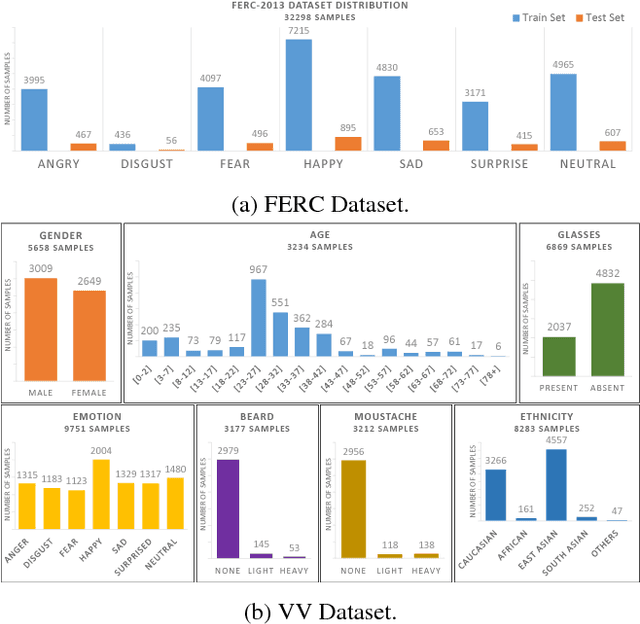

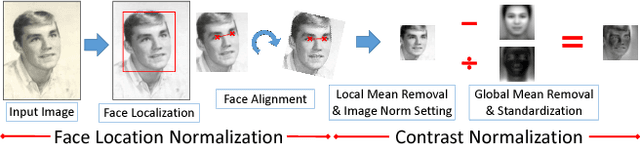

Recognizing Semantic Features in Faces using Deep Learning

Oct 19, 2016

Abstract:The human face constantly conveys information, both consciously and subconsciously. However, as basic as it is for humans to visually interpret this information, it is quite a big challenge for machines. Conventional semantic facial feature recognition and analysis techniques are already in use and are based on physiological heuristics, but they suffer from lack of robustness and high computation time. This thesis aims to explore ways for machines to learn to interpret semantic information available in faces in an automated manner without requiring manual design of feature detectors, using the approach of Deep Learning. This thesis provides a study of the effects of various factors and hyper-parameters of deep neural networks in the process of determining an optimal network configuration for the task of semantic facial feature recognition. This thesis explores the effectiveness of the system to recognize the various semantic features (like emotions, age, gender, ethnicity etc.) present in faces. Furthermore, the relation between the effect of high-level concepts on low level features is explored through an analysis of the similarities in low-level descriptors of different semantic features. This thesis also demonstrates a novel idea of using a deep network to generate 3-D Active Appearance Models of faces from real-world 2-D images. For a more detailed report on this work, please see [arXiv:1512.00743v1].

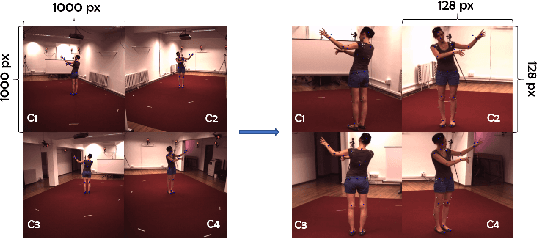

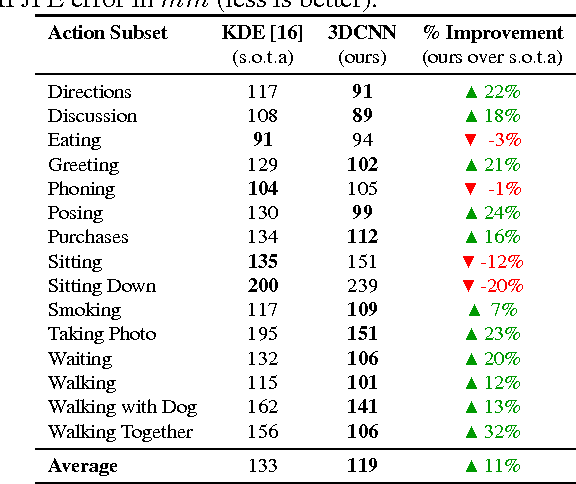

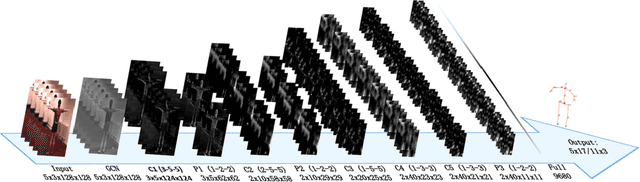

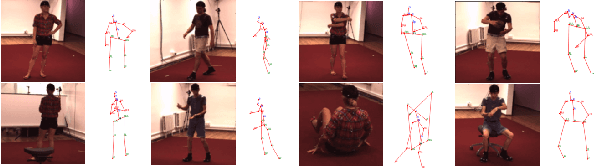

Human Pose Estimation in Space and Time using 3D CNN

Oct 19, 2016

Abstract:This paper explores the capabilities of convolutional neural networks to deal with a task that is easily manageable for humans: perceiving 3D pose of a human body from varying angles. However, in our approach, we are restricted to using a monocular vision system. For this purpose, we apply a convolutional neural network approach on RGB videos and extend it to three dimensional convolutions. This is done via encoding the time dimension in videos as the 3\ts{rd} dimension in convolutional space, and directly regressing to human body joint positions in 3D coordinate space. This research shows the ability of such a network to achieve state-of-the-art performance on the selected Human3.6M dataset, thus demonstrating the possibility of successfully representing temporal data with an additional dimension in the convolutional operation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge