Recognizing Semantic Features in Faces using Deep Learning

Paper and Code

Oct 19, 2016

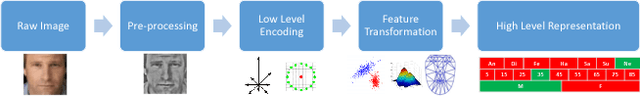

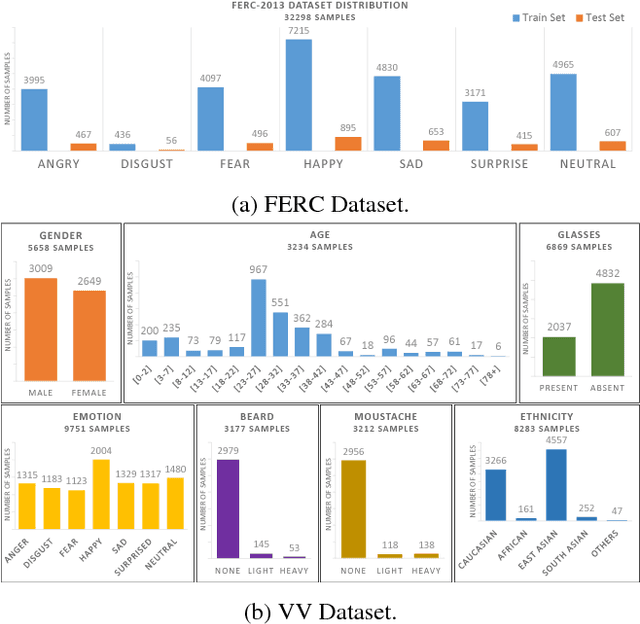

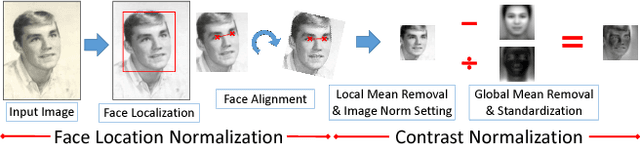

The human face constantly conveys information, both consciously and subconsciously. However, as basic as it is for humans to visually interpret this information, it is quite a big challenge for machines. Conventional semantic facial feature recognition and analysis techniques are already in use and are based on physiological heuristics, but they suffer from lack of robustness and high computation time. This thesis aims to explore ways for machines to learn to interpret semantic information available in faces in an automated manner without requiring manual design of feature detectors, using the approach of Deep Learning. This thesis provides a study of the effects of various factors and hyper-parameters of deep neural networks in the process of determining an optimal network configuration for the task of semantic facial feature recognition. This thesis explores the effectiveness of the system to recognize the various semantic features (like emotions, age, gender, ethnicity etc.) present in faces. Furthermore, the relation between the effect of high-level concepts on low level features is explored through an analysis of the similarities in low-level descriptors of different semantic features. This thesis also demonstrates a novel idea of using a deep network to generate 3-D Active Appearance Models of faces from real-world 2-D images. For a more detailed report on this work, please see [arXiv:1512.00743v1].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge