Amitava Banerjee

Link inference of noisy delay-coupled networks: Machine learning and opto-electronic experimental tests

Oct 29, 2020

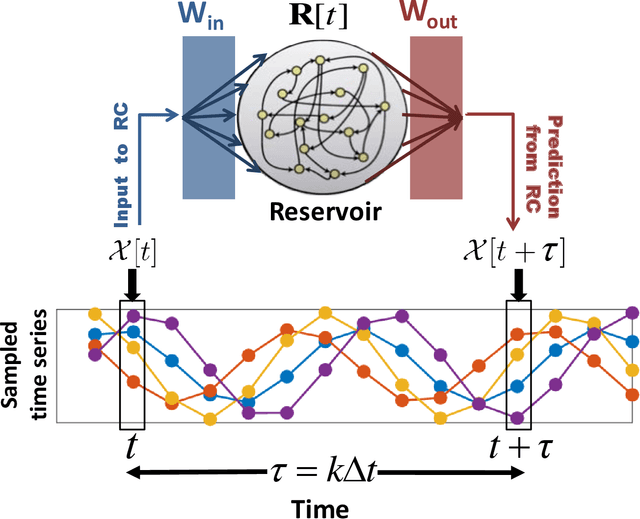

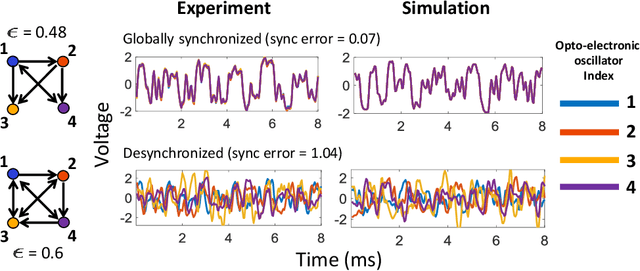

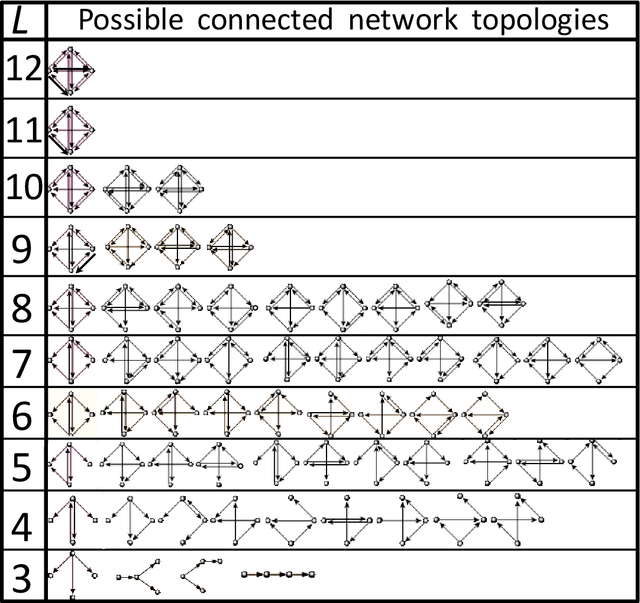

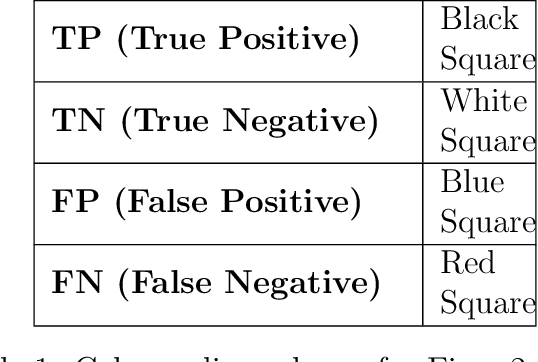

Abstract:We devise a machine learning technique to solve the general problem of inferring network links that have time-delays. The goal is to do this purely from time-series data of the network nodal states. This task has applications in fields ranging from applied physics and engineering to neuroscience and biology. To achieve this, we first train a type of machine learning system known as reservoir computing to mimic the dynamics of the unknown network. We formulate and test a technique that uses the trained parameters of the reservoir system output layer to deduce an estimate of the unknown network structure. Our technique, by its nature, is non-invasive, but is motivated by the widely-used invasive network inference method whereby the responses to active perturbations applied to the network are observed and employed to infer network links (e.g., knocking down genes to infer gene regulatory networks). We test this technique on experimental and simulated data from delay-coupled opto-electronic oscillator networks. We show that the technique often yields very good results particularly if the system does not exhibit synchrony. We also find that the presence of dynamical noise can strikingly enhance the accuracy and ability of our technique, especially in networks that exhibit synchrony.

Using Machine Learning to Assess Short Term Causal Dependence and Infer Network Links

Dec 05, 2019

Abstract:We introduce and test a general machine-learning-based technique for the inference of short term causal dependence between state variables of an unknown dynamical system from time series measurements of its state variables. Our technique leverages the results of a machine learning process for short time prediction to achieve our goal. The basic idea is to use the machine learning to estimate the elements of the Jacobian matrix of the dynamical flow along an orbit. The type of machine learning that we employ is reservoir computing. We present numerical tests on link inference of a network of interacting dynamical nodes. It is seen that dynamical noise can greatly enhance the effectiveness of our technique, while observational noise degrades the effectiveness. We believe that the competition between these two opposing types of noise will be the key factor determining the success of causal inference in many of the most important application situations.

Liquid State Machine with Dendritically Enhanced Readout for Low-power, Neuromorphic VLSI Implementations

Nov 20, 2014

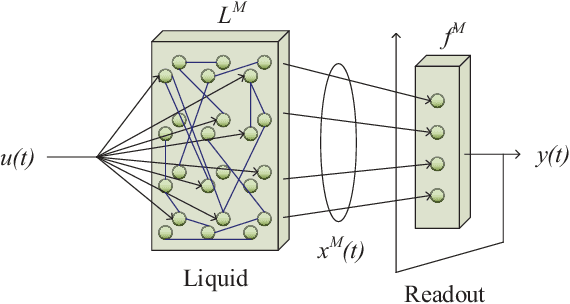

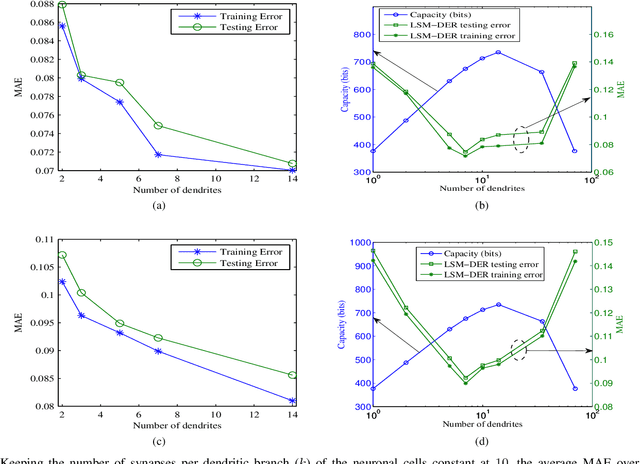

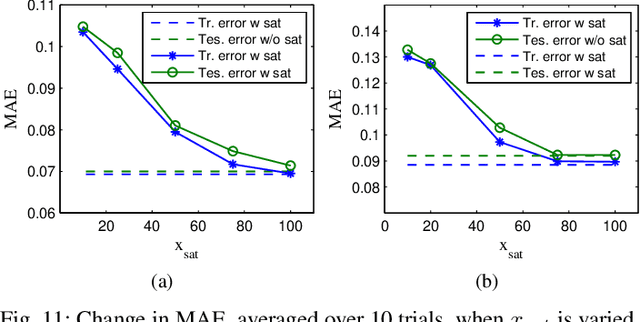

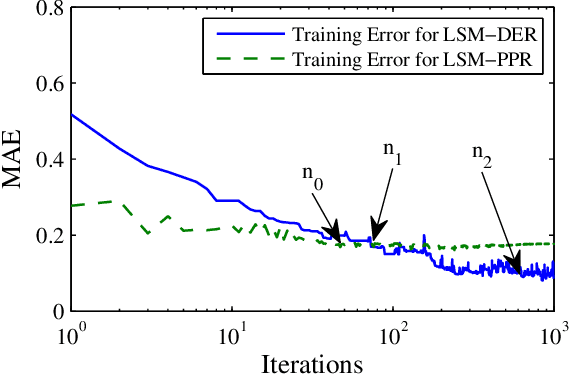

Abstract:In this paper, we describe a new neuro-inspired, hardware-friendly readout stage for the liquid state machine (LSM), a popular model for reservoir computing. Compared to the parallel perceptron architecture trained by the p-delta algorithm, which is the state of the art in terms of performance of readout stages, our readout architecture and learning algorithm can attain better performance with significantly less synaptic resources making it attractive for VLSI implementation. Inspired by the nonlinear properties of dendrites in biological neurons, our readout stage incorporates neurons having multiple dendrites with a lumped nonlinearity. The number of synaptic connections on each branch is significantly lower than the total number of connections from the liquid neurons and the learning algorithm tries to find the best 'combination' of input connections on each branch to reduce the error. Hence, the learning involves network rewiring (NRW) of the readout network similar to structural plasticity observed in its biological counterparts. We show that compared to a single perceptron using analog weights, this architecture for the readout can attain, even by using the same number of binary valued synapses, up to 3.3 times less error for a two-class spike train classification problem and 2.4 times less error for an input rate approximation task. Even with 60 times larger synapses, a group of 60 parallel perceptrons cannot attain the performance of the proposed dendritically enhanced readout. An additional advantage of this method for hardware implementations is that the 'choice' of connectivity can be easily implemented exploiting address event representation (AER) protocols commonly used in current neuromorphic systems where the connection matrix is stored in memory. Also, due to the use of binary synapses, our proposed method is more robust against statistical variations.

* 14 pages, 19 figures, Journal

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge