Aly Megahed

Incorporating Experts' Judgment into Machine Learning Models

Apr 29, 2023

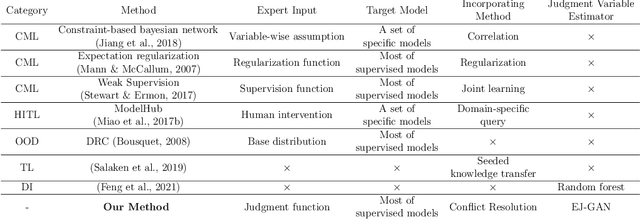

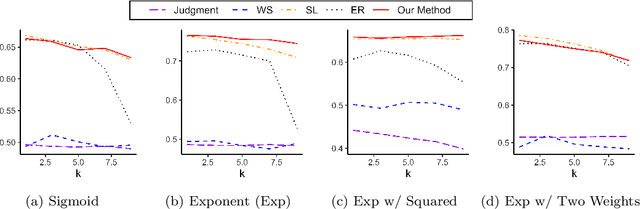

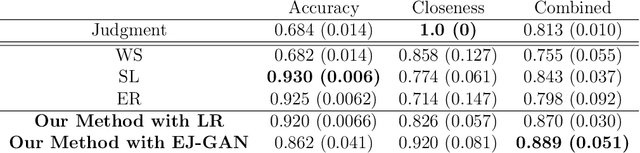

Abstract:Machine learning (ML) models have been quite successful in predicting outcomes in many applications. However, in some cases, domain experts might have a judgment about the expected outcome that might conflict with the prediction of ML models. One main reason for this is that the training data might not be totally representative of the population. In this paper, we present a novel framework that aims at leveraging experts' judgment to mitigate the conflict. The underlying idea behind our framework is that we first determine, using a generative adversarial network, the degree of representation of an unlabeled data point in the training data. Then, based on such degree, we correct the \textcolor{black}{machine learning} model's prediction by incorporating the experts' judgment into it, where the higher that aforementioned degree of representation, the less the weight we put on the expert intuition that we add to our corrected output, and vice-versa. We perform multiple numerical experiments on synthetic data as well as two real-world case studies (one from the IT services industry and the other from the financial industry). All results show the effectiveness of our framework; it yields much higher closeness to the experts' judgment with minimal sacrifice in the prediction accuracy, when compared to multiple baseline methods. We also develop a new evaluation metric that combines prediction accuracy with the closeness to experts' judgment. Our framework yields statistically significant results when evaluated on that metric.

Predicting Loss Risks for B2B Tendering Processes

Sep 14, 2021

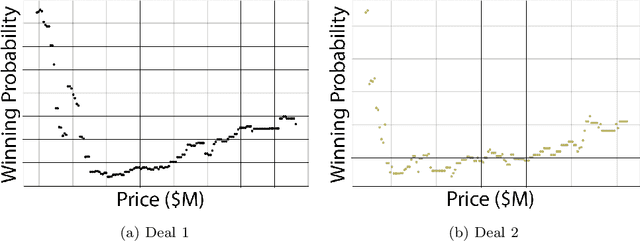

Abstract:Sellers and executives who maintain a bidding pipeline of sales engagements with multiple clients for many opportunities significantly benefit from data-driven insight into the health of each of their bids. There are many predictive models that offer likelihood insights and win prediction modeling for these opportunities. Currently, these win prediction models are in the form of binary classification and only make a prediction for the likelihood of a win or loss. The binary formulation is unable to offer any insight as to why a particular deal might be predicted as a loss. This paper offers a multi-class classification model to predict win probability, with the three loss classes offering specific reasons as to why a loss is predicted, including no bid, customer did not pursue, and lost to competition. These classes offer an indicator of how that opportunity might be handled given the nature of the prediction. Besides offering baseline results on the multi-class classification, this paper also offers results on the model after class imbalance handling, with the results achieving a high accuracy of 85% and an average AUC score of 0.94.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge