Alphonsus Adu-Bredu

ZEST: Zero-shot Embodied Skill Transfer for Athletic Robot Control

Jan 30, 2026Abstract:Achieving robust, human-like whole-body control on humanoid robots for agile, contact-rich behaviors remains a central challenge, demanding heavy per-skill engineering and a brittle process of tuning controllers. We introduce ZEST (Zero-shot Embodied Skill Transfer), a streamlined motion-imitation framework that trains policies via reinforcement learning from diverse sources -- high-fidelity motion capture, noisy monocular video, and non-physics-constrained animation -- and deploys them to hardware zero-shot. ZEST generalizes across behaviors and platforms while avoiding contact labels, reference or observation windows, state estimators, and extensive reward shaping. Its training pipeline combines adaptive sampling, which focuses training on difficult motion segments, and an automatic curriculum using a model-based assistive wrench, together enabling dynamic, long-horizon maneuvers. We further provide a procedure for selecting joint-level gains from approximate analytical armature values for closed-chain actuators, along with a refined model of actuators. Trained entirely in simulation with moderate domain randomization, ZEST demonstrates remarkable generality. On Boston Dynamics' Atlas humanoid, ZEST learns dynamic, multi-contact skills (e.g., army crawl, breakdancing) from motion capture. It transfers expressive dance and scene-interaction skills, such as box-climbing, directly from videos to Atlas and the Unitree G1. Furthermore, it extends across morphologies to the Spot quadruped, enabling acrobatics, such as a continuous backflip, through animation. Together, these results demonstrate robust zero-shot deployment across heterogeneous data sources and embodiments, establishing ZEST as a scalable interface between biological movements and their robotic counterparts.

Exploring Kinodynamic Fabrics for Reactive Whole-Body Control of Underactuated Humanoid Robots

Mar 09, 2023Abstract:For bipedal humanoid robots to successfully operate in the real world, they must be competent at simultaneously executing multiple motion tasks while reacting to unforeseen external disturbances in real-time. We propose Kinodynamic Fabrics as an approach for the specification, solution and simultaneous execution of multiple motion tasks in real-time while being reactive to dynamism in the environment. Kinodynamic Fabrics allows for the specification of prioritized motion tasks as forced spectral semi-sprays and solves for desired robot joint accelerations at real-time frequencies. We evaluate the capabilities of Kinodynamic fabrics on diverse physically challenging whole-body control tasks with a bipedal humanoid robot both in simulation and in the real-world. Kinodynamic Fabrics outperforms the state-of-the-art Quadratic Program based whole-body controller on a variety of whole-body control tasks on run-time and reactivity metrics in our experiments. Our open-source implementation of Kinodynamic Fabrics as well as robot demonstration videos can be found at this url: https://adubredu.github.io/kinofabs.

Optimal Constrained Task Planning as Mixed Integer Programming

Nov 17, 2022Abstract:For robots to successfully execute tasks assigned to them, they must be capable of planning the right sequence of actions. These actions must be both optimal with respect to a specified objective and satisfy whatever constraints exist in their world. We propose an approach for robot task planning that is capable of planning the optimal sequence of grounded actions to accomplish a task given a specific objective function while satisfying all specified numerical constraints. Our approach accomplishes this by encoding the entire task planning problem as a single mixed integer convex program, which it then solves using an off-the-shelf Mixed Integer Programming solver. We evaluate our approach on several mobile manipulation tasks in both simulation and on a physical humanoid robot. Our approach is able to consistently produce optimal plans while accounting for all specified numerical constraints in the mobile manipulation tasks. Open-source implementations of the components of our approach as well as videos of robots executing planned grounded actions in both simulation and the physical world can be found at this url: https://adubredu.github.io/gtpmip

* International Conference on Intelligent Robots and Systems (IROS), 2022

Contact simulation of a 2D Bipedal Robot kicking a ball

Dec 15, 2021

Abstract:This report describes an approach for simulating multi-body contacts of actively-controlled systems. In this work, we focus on the controls and contact simulation of a 2-dimensional bipedal robot kicking a circular ball.

Probabilistic Inference in Planning for Partially Observable Long Horizon Problems

Oct 18, 2021

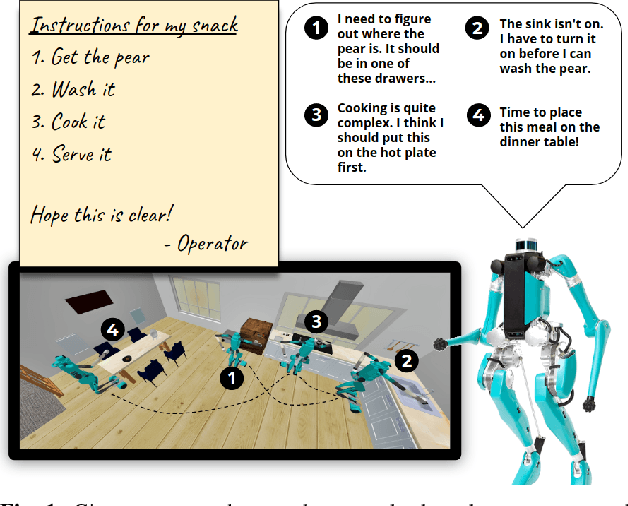

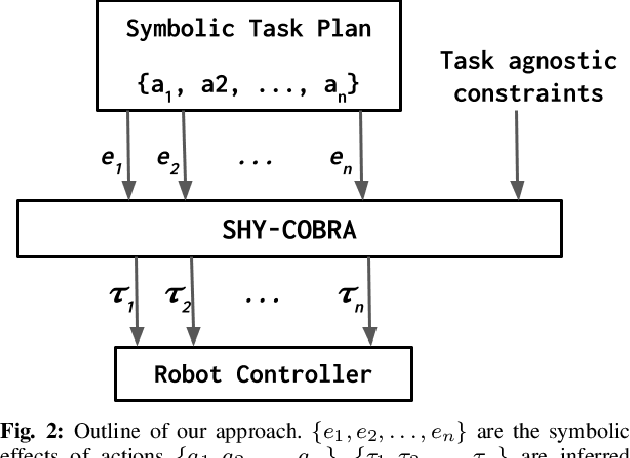

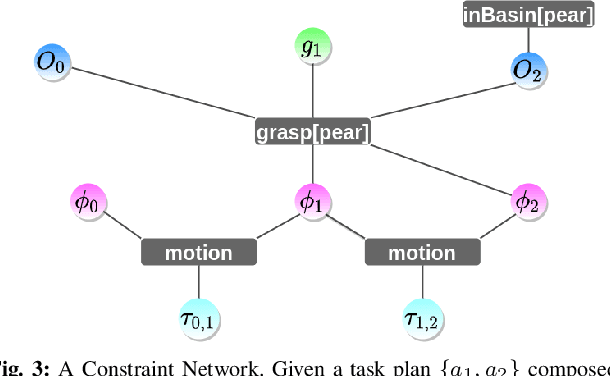

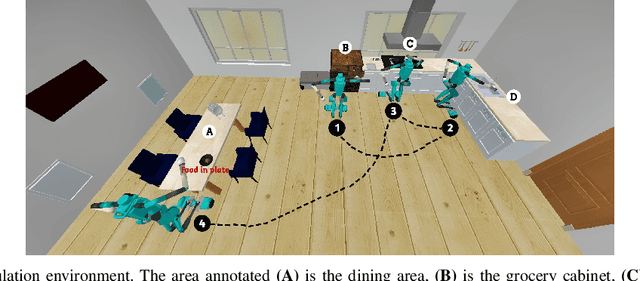

Abstract:For autonomous service robots to successfully perform long horizon tasks in the real world, they must act intelligently in partially observable environments. Most Task and Motion Planning approaches assume full observability of their state space, making them ineffective in stochastic and partially observable domains that reflect the uncertainties in the real world. We propose an online planning and execution approach for performing long horizon tasks in partially observable domains. Given the robot's belief and a plan skeleton composed of symbolic actions, our approach grounds each symbolic action by inferring continuous action parameters needed to execute the plan successfully. To achieve this, we formulate the problem of joint inference of action parameters as a Hybrid Constraint Satisfaction Problem (H-CSP) and solve the H-CSP using Belief Propagation. The robot executes the resulting parameterized actions, updates its belief of the world and replans when necessary. Our approach is able to efficiently solve partially observable tasks in a realistic kitchen simulation environment. Our approach outperformed an adaptation of the state-of-the-art method across our experiments.

GODSAC*: Graph Optimized DSAC* for Robot Relocalization

May 14, 2021

Abstract:Deep learning based camera pose estimation from monocular camera images has seen a recent uptake in Visual SLAM research. Even though such pose estimation approaches have excellent results in small confined areas like offices and apartment buildings, they tend to do poorly when applied to larger areas like outdoor settings, mainly because of the scarcity of distinctive features. We propose GODSAC* as a camera pose estimation approach that augments pose predictions from a trained neural network with noisy odometry data through the optimization of a pose graph. GODSAC* outperforms the state-of-the-art approaches in pose estimation accuracy, as we demonstrate in our experiments.

Robot Task Planning for Low Entropy Belief States

Nov 18, 2020

Abstract:Recent advances in computational perception have significantly improved the ability of autonomous robots to perform state estimation with low entropy. Such advances motivate a reconsideration of robot decision-making under uncertainty. Current approaches to solving sequential decision-making problems model states as inhabiting the extremes of the perceptual entropy spectrum. As such, these methods are either incapable of overcoming perceptual errors or asymptotically inefficient in solving problems with low perceptual entropy. With low entropy perception in mind, we aim to explore a happier medium that balances computational efficiency with the forms of uncertainty we now observe from modern robot perception. We propose FastDownward Replanner (FD-Replan) as an efficient task planning method for goal-directed robot reasoning. FD-Replan combines belief space representation with the fast, goal-directed features of classical planning to efficiently plan for low entropy goal-directed reasoning tasks. We compare FD-Replan with current classical planning and belief space planning approaches by solving low entropy goal-directed grocery packing tasks in simulation. FD-Replan shows positive results and promise with respect to planning time, execution time, and task success rate in our simulation experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge