Allison Cohen

Montreal AI Ethics Institute, AI Global, Mila

The Right to AI

Jan 29, 2025

Abstract:This paper proposes a Right to AI, which asserts that individuals and communities should meaningfully participate in the development and governance of the AI systems that shape their lives. Motivated by the increasing deployment of AI in critical domains and inspired by Henri Lefebvre's concept of the Right to the City, we reconceptualize AI as a societal infrastructure, rather than merely a product of expert design. In this paper, we critically evaluate how generative agents, large-scale data extraction, and diverse cultural values bring new complexities to AI oversight. The paper proposes that grassroots participatory methodologies can mitigate biased outcomes and enhance social responsiveness. It asserts that data is socially produced and should be managed and owned collectively. Drawing on Sherry Arnstein's Ladder of Citizen Participation and analyzing nine case studies, the paper develops a four-tier model for the Right to AI that situates the current paradigm and envisions an aspirational future. It proposes recommendations for inclusive data ownership, transparent design processes, and stakeholder-driven oversight. We also discuss market-led and state-centric alternatives and argue that participatory approaches offer a better balance between technical efficiency and democratic legitimacy.

Subtle Misogyny Detection and Mitigation: An Expert-Annotated Dataset

Nov 15, 2023

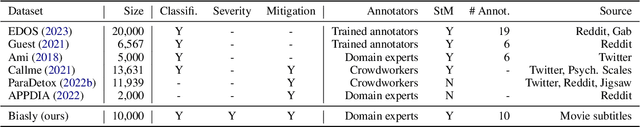

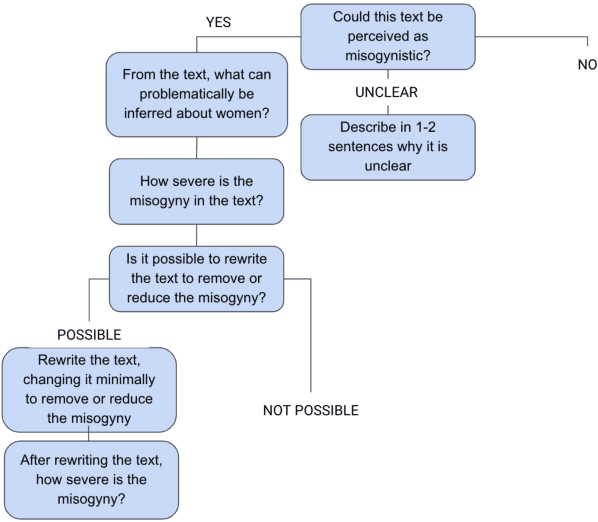

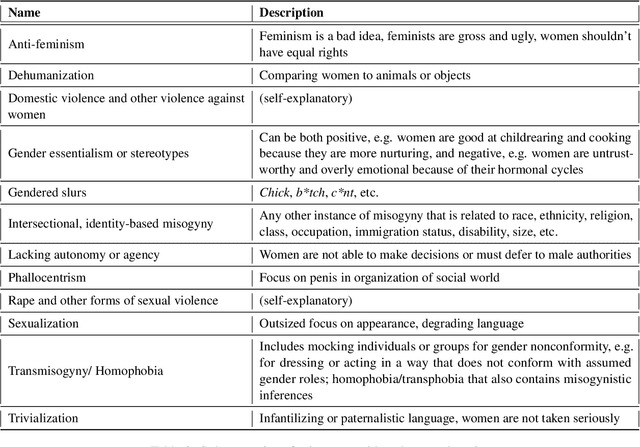

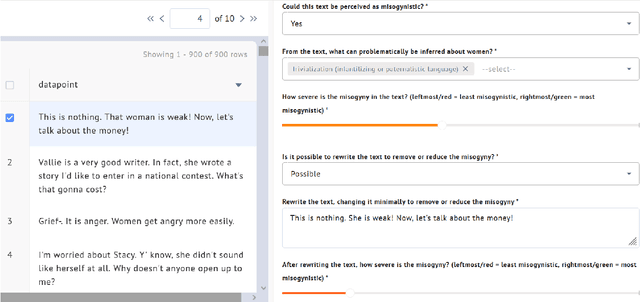

Abstract:Using novel approaches to dataset development, the Biasly dataset captures the nuance and subtlety of misogyny in ways that are unique within the literature. Built in collaboration with multi-disciplinary experts and annotators themselves, the dataset contains annotations of movie subtitles, capturing colloquial expressions of misogyny in North American film. The dataset can be used for a range of NLP tasks, including classification, severity score regression, and text generation for rewrites. In this paper, we discuss the methodology used, analyze the annotations obtained, and provide baselines using common NLP algorithms in the context of misogyny detection and mitigation. We hope this work will promote AI for social good in NLP for bias detection, explanation, and removal.

The State of AI Ethics Report

Nov 05, 2020Abstract:The 2nd edition of the Montreal AI Ethics Institute's The State of AI Ethics captures the most relevant developments in the field of AI Ethics since July 2020. This report aims to help anyone, from machine learning experts to human rights activists and policymakers, quickly digest and understand the ever-changing developments in the field. Through research and article summaries, as well as expert commentary, this report distills the research and reporting surrounding various domains related to the ethics of AI, including: AI and society, bias and algorithmic justice, disinformation, humans and AI, labor impacts, privacy, risk, and future of AI ethics. In addition, The State of AI Ethics includes exclusive content written by world-class AI Ethics experts from universities, research institutes, consulting firms, and governments. These experts include: Danit Gal (Tech Advisor, United Nations), Amba Kak (Director of Global Policy and Programs, NYU's AI Now Institute), Rumman Chowdhury (Global Lead for Responsible AI, Accenture), Brent Barron (Director of Strategic Projects and Knowledge Management, CIFAR), Adam Murray (U.S. Diplomat working on tech policy, Chair of the OECD Network on AI), Thomas Kochan (Professor, MIT Sloan School of Management), and Katya Klinova (AI and Economy Program Lead, Partnership on AI). This report should be used not only as a point of reference and insight on the latest thinking in the field of AI Ethics, but should also be used as a tool for introspection as we aim to foster a more nuanced conversation regarding the impacts of AI on the world.

Report prepared by the Montreal AI Ethics Institute In Response to Mila's Proposal for a Contact Tracing App

Aug 11, 2020Abstract:Contact tracing has grown in popularity as a promising solution to the COVID-19 pandemic. The benefits of automated contact tracing are two-fold. Contact tracing promises to reduce the number of infections by being able to: 1) systematically identify all of those that have been in contact with someone who has had COVID; and, 2) ensure those that have been exposed to the virus do not unknowingly infect others. "COVI" is the name of a recent contact tracing app developed by Mila and was proposed to help combat COVID-19 in Canada. The app was designed to inform each individual of their relative risk of being infected with the virus, which Mila claimed would empower citizens to make informed decisions about their movement and allow for a data-driven approach to public health policy; all the while ensuring data is safeguarded from governments, companies, and individuals. This article will provide a critical response to Mila's COVI White Paper. Specifically, this article will discuss: the extent to which diversity has been considered in the design of the app, assumptions surrounding users' interaction with the app and the app's utility, as well as unanswered questions surrounding transparency, accountability, and security. We see this as an opportunity to supplement the excellent risk analysis done by the COVI team to surface insights that can be applied to other contact- and proximity-tracing apps that are being developed and deployed across the world. Our hope is that, through a meaningful dialogue, we can ultimately help organizations develop better solutions that respect the fundamental rights and values of the communities these solutions are meant to serve.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge