Ali Samadani

A Comparative Analysis of Machine Learning Models for Early Detection of Hospital-Acquired Infections

Nov 15, 2023

Abstract:As more and more infection-specific machine learning models are developed and planned for clinical deployment, simultaneously running predictions from different models may provide overlapping or even conflicting information. It is important to understand the concordance and behavior of parallel models in deployment. In this study, we focus on two models for the early detection of hospital-acquired infections (HAIs): 1) the Infection Risk Index (IRI) and 2) the Ventilator-Associated Pneumonia (VAP) prediction model. The IRI model was built to predict all HAIs, whereas the VAP model identifies patients at risk of developing ventilator-associated pneumonia. These models could make important improvements in patient outcomes and hospital management of infections through early detection of infections and in turn, enable early interventions. The two models vary in terms of infection label definition, cohort selection, and prediction schema. In this work, we present a comparative analysis between the two models to characterize concordances and confusions in predicting HAIs by these models. The learnings from this study will provide important findings for how to deploy multiple concurrent disease-specific models in the future.

Pervasive Lying Posture Tracking

Jun 19, 2020

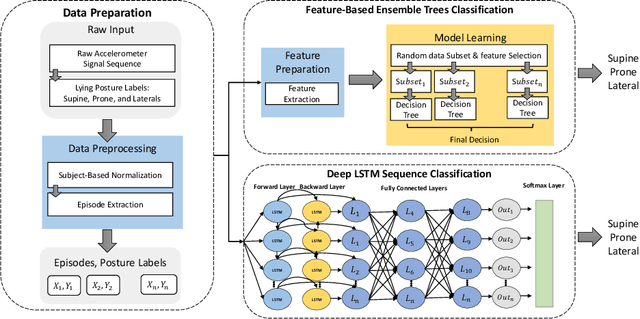

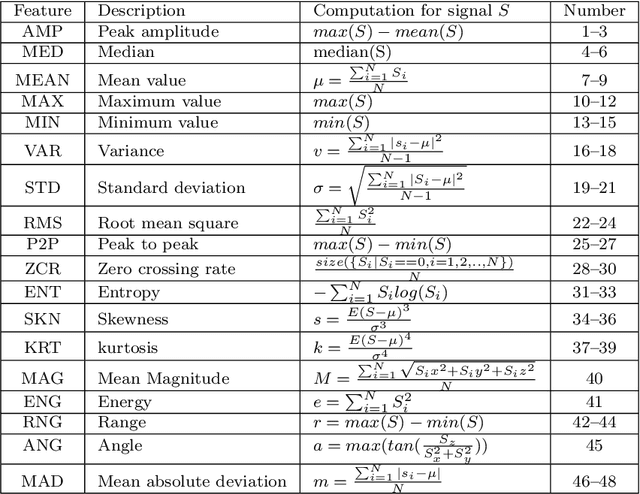

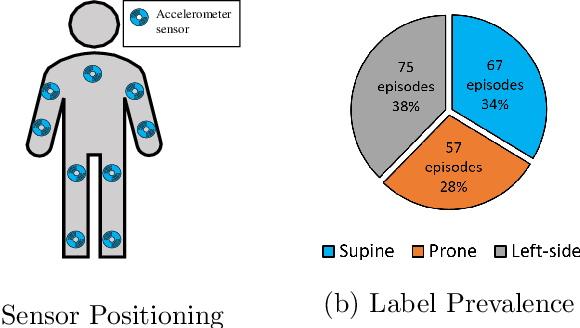

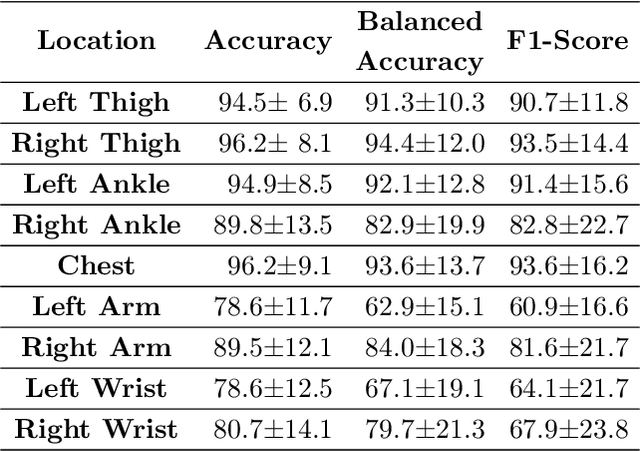

Abstract:There exist significant gaps in research about how to design efficient in-bed lying posture tracking systems. These gaps can be articulated through several research questions as follows. First, can we design a single-sensor, pervasive, and inexpensive system that can accurately detect lying postures? Second, what computational models are most effective in the accurate detection of lying postures? Finally, what physical configuration of the sensor system is most effective for lying posture tracking? To answer these important research questions, in this article, we propose a comprehensive approach to design a sensor system that uses a single accelerometer along with machine learning algorithms for in-bed lying posture classification. We design two categories of machine learning algorithms based on deep learning and traditional classification with handcrafted features to detect lying postures. We also investigate what wearing sites are most effective in accurate detection of lying postures. We extensively evaluate the performance of the proposed algorithms on nine different body locations and four human lying postures using two datasets. Our results show that a system with a single accelerometer can be used with either deep learning or traditional classifiers to accurately detect lying postures. The best models in our approach achieve an F-Score that ranges from 95.2% to 97.8% with 0.03 to 0.05 coefficient of variation. The results also identify the thighs and chest as the most salient body sites for lying posture tracking. Our findings in this article suggest that because accelerometers are ubiquitous and inexpensive sensors, they can be a viable source of information for pervasive monitoring of in-bed postures.

Affective Movement Generation using Laban Effort and Shape and Hidden Markov Models

Jun 10, 2020

Abstract:Body movements are an important communication medium through which affective states can be discerned. Movements that convey affect can also give machines life-like attributes and help to create a more engaging human-machine interaction. This paper presents an approach for automatic affective movement generation that makes use of two movement abstractions: 1) Laban movement analysis (LMA), and 2) hidden Markov modeling. The LMA provides a systematic tool for an abstract representation of the kinematic and expressive characteristics of movements. Given a desired motion path on which a target emotion is to be overlaid, the proposed approach searches a labeled dataset in the LMA Effort and Shape space for similar movements to the desired motion path that convey the target emotion. An HMM abstraction of the identified movements is obtained and used with the desired motion path to generate a novel movement that is a modulated version of the desired motion path that conveys the target emotion. The extent of modulation can be varied, trading-off between kinematic and affective constraints in the generated movement. The proposed approach is tested using a full-body movement dataset. The efficacy of the proposed approach in generating movements with recognizable target emotions is assessed using a validated automatic recognition model and a user study. The target emotions were correctly recognized from the generated movements at a rate of 72% using the recognition model. Furthermore, participants in the user study were able to correctly perceive the target emotions from a sample of generated movements, although some cases of confusion were also observed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge