Ali Goli

Adaptive Locally Linear Embedding

Apr 09, 2025Abstract:Manifold learning techniques, such as Locally linear embedding (LLE), are designed to preserve the local neighborhood structures of high-dimensional data during dimensionality reduction. Traditional LLE employs Euclidean distance to define neighborhoods, which can struggle to capture the intrinsic geometric relationships within complex data. A novel approach, Adaptive locally linear embedding(ALLE), is introduced to address this limitation by incorporating a dynamic, data-driven metric that enhances topological preservation. This method redefines the concept of proximity by focusing on topological neighborhood inclusion rather than fixed distances. By adapting the metric based on the local structure of the data, it achieves superior neighborhood preservation, particularly for datasets with complex geometries and high-dimensional structures. Experimental results demonstrate that ALLE significantly improves the alignment between neighborhoods in the input and feature spaces, resulting in more accurate and topologically faithful embeddings. This approach advances manifold learning by tailoring distance metrics to the underlying data, providing a robust solution for capturing intricate relationships in high-dimensional datasets.

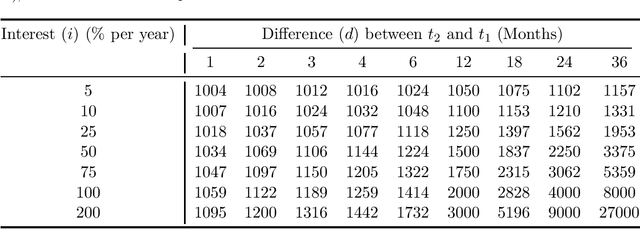

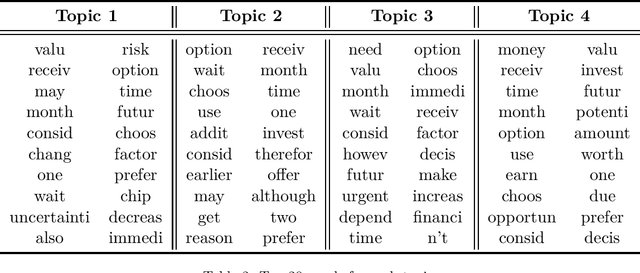

Language, Time Preferences, and Consumer Behavior: Evidence from Large Language Models

May 04, 2023

Abstract:Language has a strong influence on our perceptions of time and rewards. This raises the question of whether large language models, when asked in different languages, show different preferences for rewards over time and if their choices are similar to those of humans. In this study, we analyze the responses of GPT-3.5 (hereafter referred to as GPT) to prompts in multiple languages, exploring preferences between smaller, sooner rewards and larger, later rewards. Our results show that GPT displays greater patience when prompted in languages with weak future tense references (FTR), such as German and Mandarin, compared to languages with strong FTR, like English and French. These findings are consistent with existing literature and suggest a correlation between GPT's choices and the preferences of speakers of these languages. However, further analysis reveals that the preference for earlier or later rewards does not systematically change with reward gaps, indicating a lexicographic preference for earlier payments. While GPT may capture intriguing variations across languages, our findings indicate that the choices made by these models do not correspond to those of human decision-makers.

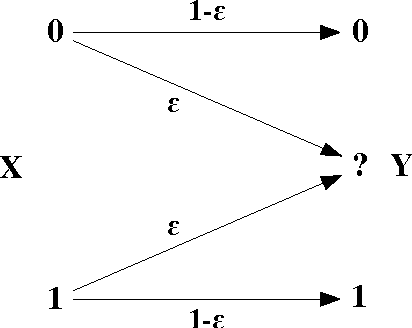

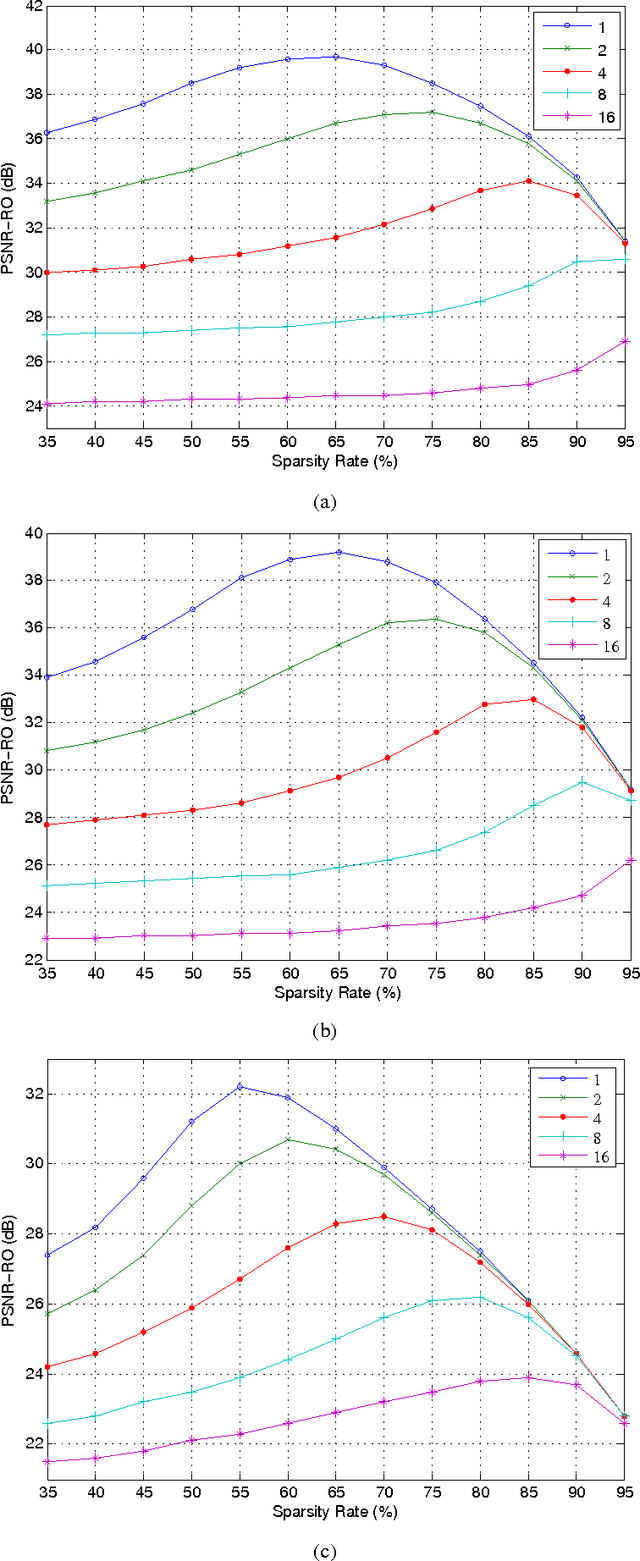

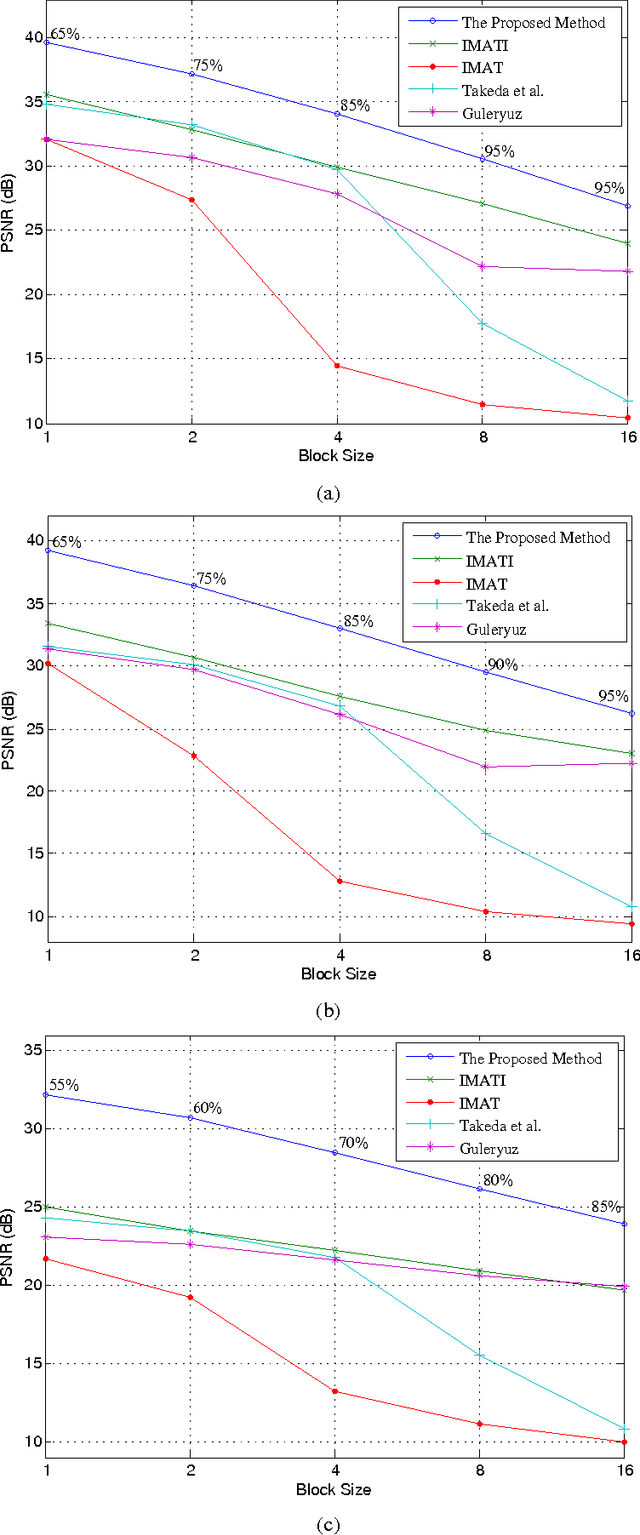

Image Block Loss Restoration Using Sparsity Pattern as Side Information

Aug 27, 2016

Abstract:In this paper, we propose a method for image block loss restoration based on the notion of sparse representation. We use the sparsity pattern as side information to efficiently restore block losses by iteratively imposing the constraints of spatial and transform domains on the corrupted image. Two novel features, including a pre-interpolation and a criterion for stopping the iterations, are proposed to improve the performance. Also, to deal with practical applications, we develop a technique to transmit the side information along with the image. In this technique, we first compress the side information and then embed its LDPC coded version in the least significant bits of the image pixels. This technique ensures the error-free transmission of the side information, while causing only a small perturbation on the transmitted image. Mathematical analysis and extensive simulations are performed to validate the method and investigate the efficiency of the proposed techniques. The results verify that the proposed method outperforms its counterparts for image block loss restoration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge