Alexandru Tifrea

Uniform versus uncertainty sampling: When being active is less efficient than staying passive

Dec 01, 2022Abstract:It is widely believed that given the same labeling budget, active learning algorithms like uncertainty sampling achieve better predictive performance than passive learning (i.e. uniform sampling), albeit at a higher computational cost. Recent empirical evidence suggests that this added cost might be in vain, as uncertainty sampling can sometimes perform even worse than passive learning. While existing works offer different explanations in the low-dimensional regime, this paper shows that the underlying mechanism is entirely different in high dimensions: we prove for logistic regression that passive learning outperforms uncertainty sampling even for noiseless data and when using the uncertainty of the Bayes optimal classifier. Insights from our proof indicate that this high-dimensional phenomenon is exacerbated when the separation between the classes is small. We corroborate this intuition with experiments on 20 high-dimensional datasets spanning a diverse range of applications, from finance and histology to chemistry and computer vision.

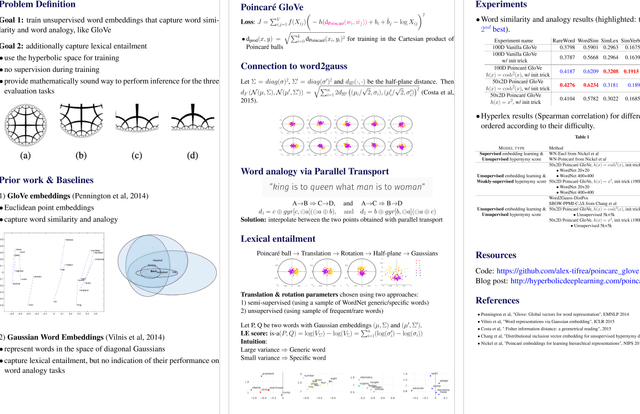

Poincaré GloVe: Hyperbolic Word Embeddings

Oct 15, 2018

Abstract:Words are not created equal. In fact, they form an aristocratic graph with a latent hierarchical structure that the next generation of unsupervised learned word embeddings should reveal. In this paper, driven by the notion of delta-hyperbolicity or tree-likeliness of a space, we propose to embed words in a Cartesian product of hyperbolic spaces which we theoretically connect with the Gaussian word embeddings and their Fisher distance. We adapt the well-known Glove algorithm to learn unsupervised word embeddings in this type of Riemannian manifolds. We explain how concepts from the Euclidean space such as parallel transport (used to solve analogy tasks) generalize to this new type of geometry. Moreover, we show that our embeddings exhibit hierarchical and hypernymy detection capabilities. We back up our findings with extensive experiments in which we outperform strong and popular baselines on the tasks of similarity, analogy and hypernymy detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge