Poincaré GloVe: Hyperbolic Word Embeddings

Paper and Code

Oct 15, 2018

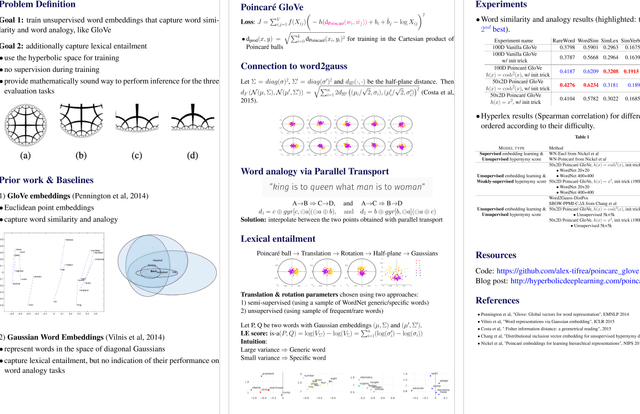

Words are not created equal. In fact, they form an aristocratic graph with a latent hierarchical structure that the next generation of unsupervised learned word embeddings should reveal. In this paper, driven by the notion of delta-hyperbolicity or tree-likeliness of a space, we propose to embed words in a Cartesian product of hyperbolic spaces which we theoretically connect with the Gaussian word embeddings and their Fisher distance. We adapt the well-known Glove algorithm to learn unsupervised word embeddings in this type of Riemannian manifolds. We explain how concepts from the Euclidean space such as parallel transport (used to solve analogy tasks) generalize to this new type of geometry. Moreover, we show that our embeddings exhibit hierarchical and hypernymy detection capabilities. We back up our findings with extensive experiments in which we outperform strong and popular baselines on the tasks of similarity, analogy and hypernymy detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge