Alexandre Péré

Autonomous Goal Exploration using Learned Goal Spaces for Visuomotor Skill Acquisition in Robots

Jun 10, 2019

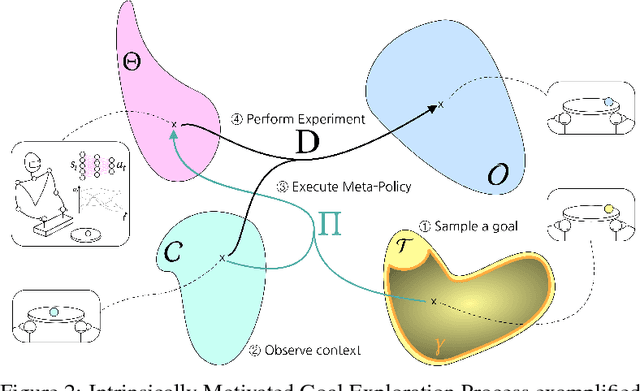

Abstract:The automatic and efficient discovery of skills, without supervision, for long-living autonomous agents, remains a challenge of Artificial Intelligence. Intrinsically Motivated Goal Exploration Processes give learning agents a human-inspired mechanism to sequentially select goals to achieve. This approach gives a new perspective on the lifelong learning problem, with promising results on both simulated and real-world experiments. Until recently, those algorithms were restricted to domains with experimenter-knowledge, since the Goal Space used by the agents was built on engineered feature extractors. The recent advances of deep representation learning, enables new ways of designing those feature extractors, using directly the agent experience. Recent work has shown the potential of those methods on simple yet challenging simulated domains. In this paper, we present recent results showing the applicability of those principles on a real-world robotic setup, where a 6-joint robotic arm learns to manipulate a ball inside an arena, by choosing goals in a space learned from its past experience.

Curiosity Driven Exploration of Learned Disentangled Goal Spaces

Nov 04, 2018

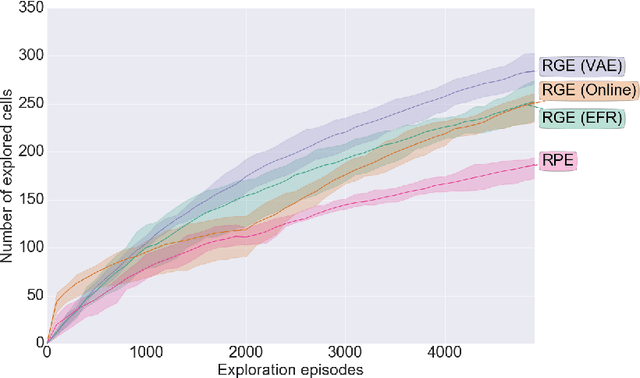

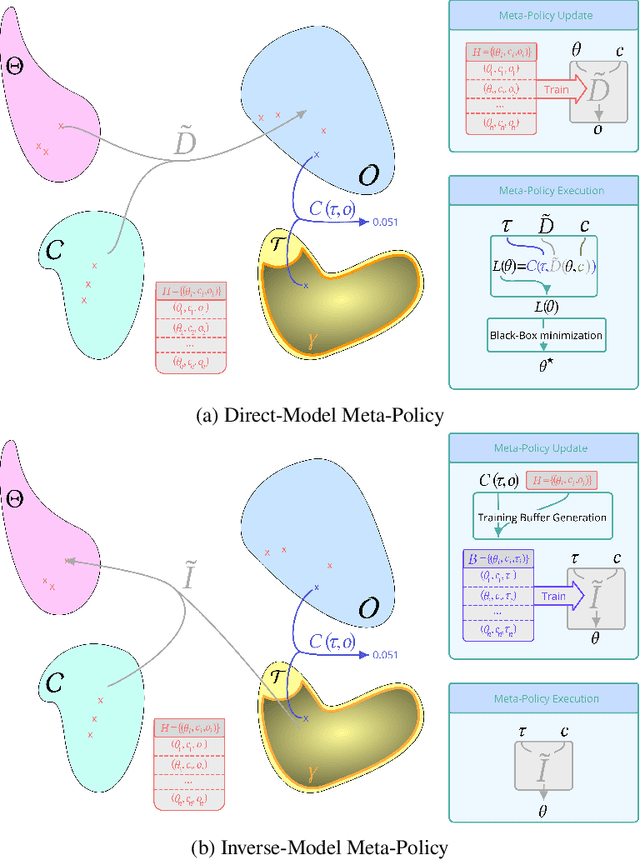

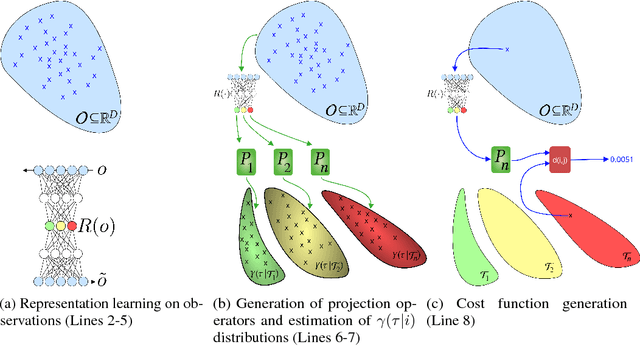

Abstract:Intrinsically motivated goal exploration processes enable agents to autonomously sample goals to explore efficiently complex environments with high-dimensional continuous actions. They have been applied successfully to real world robots to discover repertoires of policies producing a wide diversity of effects. Often these algorithms relied on engineered goal spaces but it was recently shown that one can use deep representation learning algorithms to learn an adequate goal space in simple environments. However, in the case of more complex environments containing multiple objects or distractors, an efficient exploration requires that the structure of the goal space reflects the one of the environment. In this paper we show that using a disentangled goal space leads to better exploration performances than an entangled goal space. We further show that when the representation is disentangled, one can leverage it by sampling goals that maximize learning progress in a modular manner. Finally, we show that the measure of learning progress, used to drive curiosity-driven exploration, can be used simultaneously to discover abstract independently controllable features of the environment.

* The code used in the experiments is available at https://github.com/flowersteam/Curiosity_Driven_Goal_Exploration

Unsupervised Learning of Goal Spaces for Intrinsically Motivated Goal Exploration

Oct 09, 2018

Abstract:Intrinsically motivated goal exploration algorithms enable machines to discover repertoires of policies that produce a diversity of effects in complex environments. These exploration algorithms have been shown to allow real world robots to acquire skills such as tool use in high-dimensional continuous state and action spaces. However, they have so far assumed that self-generated goals are sampled in a specifically engineered feature space, limiting their autonomy. In this work, we propose to use deep representation learning algorithms to learn an adequate goal space. This is a developmental 2-stage approach: first, in a perceptual learning stage, deep learning algorithms use passive raw sensor observations of world changes to learn a corresponding latent space; then goal exploration happens in a second stage by sampling goals in this latent space. We present experiments where a simulated robot arm interacts with an object, and we show that exploration algorithms using such learned representations can match the performance obtained using engineered representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge