Alexandre Heuillet

Efficient Automation of Neural Network Design: A Survey on Differentiable Neural Architecture Search

May 01, 2023

Abstract:In the past few years, Differentiable Neural Architecture Search (DNAS) rapidly imposed itself as the trending approach to automate the discovery of deep neural network architectures. This rise is mainly due to the popularity of DARTS, one of the first major DNAS methods. In contrast with previous works based on Reinforcement Learning or Evolutionary Algorithms, DNAS is faster by several orders of magnitude and uses fewer computational resources. In this comprehensive survey, we focus specifically on DNAS and review recent approaches in this field. Furthermore, we propose a novel challenge-based taxonomy to classify DNAS methods. We also discuss the contributions brought to DNAS in the past few years and its impact on the global NAS field. Finally, we conclude by giving some insights into future research directions for the DNAS field.

NASiam: Efficient Representation Learning using Neural Architecture Search for Siamese Networks

Jan 31, 2023

Abstract:Siamese networks are one of the most trending methods to achieve self-supervised visual representation learning (SSL). Since hand labeling is costly, SSL can play a crucial part by allowing deep learning to train on large unlabeled datasets. Meanwhile, Neural Architecture Search (NAS) is becoming increasingly important as a technique to discover novel deep learning architectures. However, early NAS methods based on reinforcement learning or evolutionary algorithms suffered from ludicrous computational and memory costs. In contrast, differentiable NAS, a gradient-based approach, has the advantage of being much more efficient and has thus retained most of the attention in the past few years. In this article, we present NASiam, a novel approach that uses for the first time differentiable NAS to improve the multilayer perceptron projector and predictor (encoder/predictor pair) architectures inside siamese-networks-based contrastive learning frameworks (e.g., SimCLR, SimSiam, and MoCo) while preserving the simplicity of previous baselines. We crafted a search space designed explicitly for multilayer perceptrons, inside which we explored several alternatives to the standard ReLU activation function. We show that these new architectures allow ResNet backbone convolutional models to learn strong representations efficiently. NASiam reaches competitive performance in both small-scale (i.e., CIFAR-10/CIFAR-100) and large-scale (i.e., ImageNet) image classification datasets while costing only a few GPU hours. We discuss the composition of the NAS-discovered architectures and emit hypotheses on why they manage to prevent collapsing behavior. Our code is available at https://github.com/aheuillet/NASiam.

Collective eXplainable AI: Explaining Cooperative Strategies and Agent Contribution in Multiagent Reinforcement Learning with Shapley Values

Oct 04, 2021

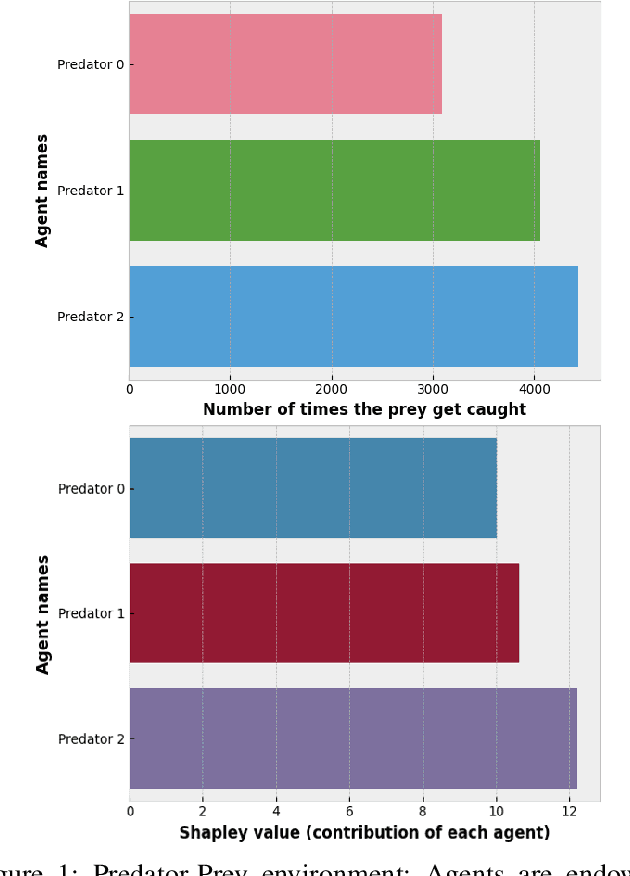

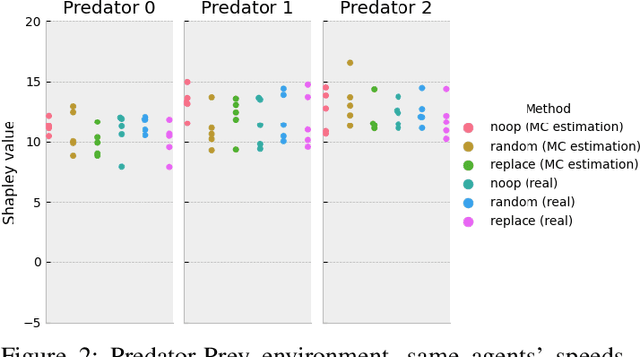

Abstract:While Explainable Artificial Intelligence (XAI) is increasingly expanding more areas of application, little has been applied to make deep Reinforcement Learning (RL) more comprehensible. As RL becomes ubiquitous and used in critical and general public applications, it is essential to develop methods that make it better understood and more interpretable. This study proposes a novel approach to explain cooperative strategies in multiagent RL using Shapley values, a game theory concept used in XAI that successfully explains the rationale behind decisions taken by Machine Learning algorithms. Through testing common assumptions of this technique in two cooperation-centered socially challenging multi-agent environments environments, this article argues that Shapley values are a pertinent way to evaluate the contribution of players in a cooperative multi-agent RL context. To palliate the high overhead of this method, Shapley values are approximated using Monte Carlo sampling. Experimental results on Multiagent Particle and Sequential Social Dilemmas show that Shapley values succeed at estimating the contribution of each agent. These results could have implications that go beyond games in economics, (e.g., for non-discriminatory decision making, ethical and responsible AI-derived decisions or policy making under fairness constraints). They also expose how Shapley values only give general explanations about a model and cannot explain a single run, episode nor justify precise actions taken by agents. Future work should focus on addressing these critical aspects.

D-DARTS: Distributed Differentiable Architecture Search

Aug 20, 2021

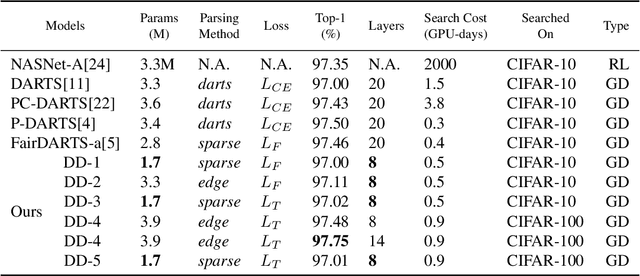

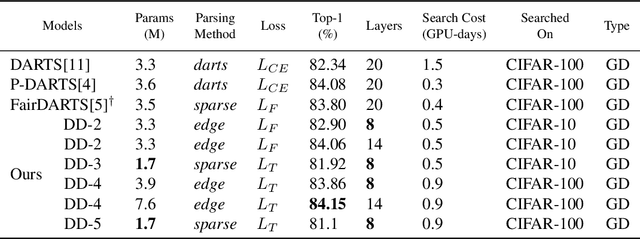

Abstract:Differentiable ARchiTecture Search (DARTS) is one of the most trending Neural Architecture Search (NAS) methods, drastically reducing search cost by resorting to Stochastic Gradient Descent (SGD) and weight-sharing. However, it also greatly reduces the search space, thus excluding potential promising architectures from being discovered. In this paper, we propose D-DARTS, a novel solution that addresses this problem by nesting several neural networks at cell-level instead of using weight-sharing to produce more diversified and specialized architectures. Moreover, we introduce a novel algorithm which can derive deeper architectures from a few trained cells, increasing performance and saving computation time. Our solution is able to provide state-of-the-art results on CIFAR-10, CIFAR-100 and ImageNet while using significantly less parameters than previous baselines, resulting in more hardware-efficient neural networks.

Explainability in Deep Reinforcement Learning

Aug 20, 2020

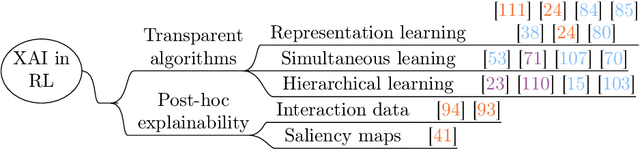

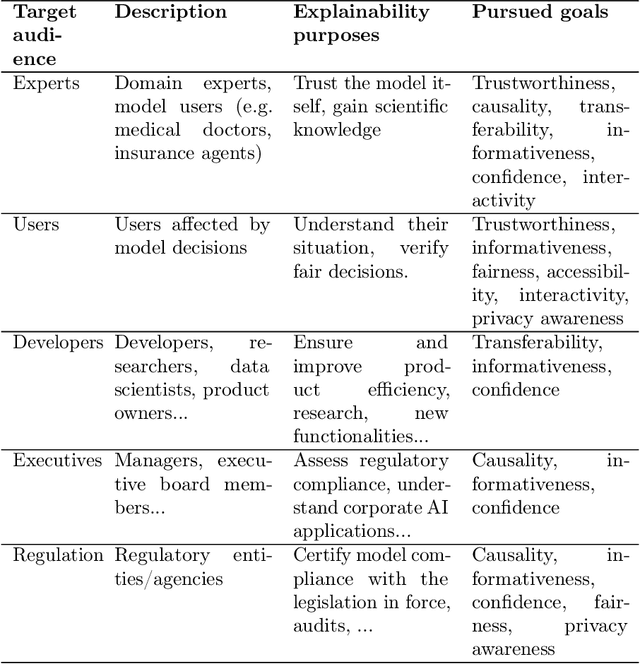

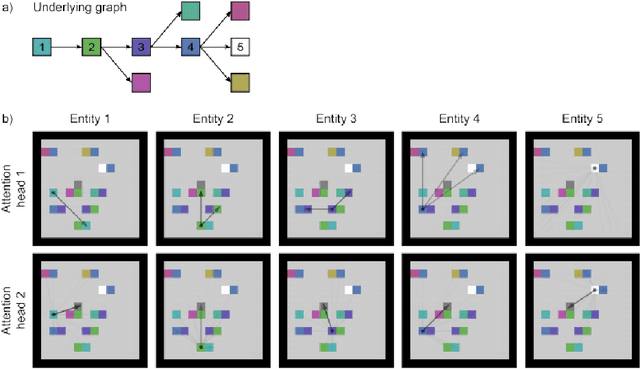

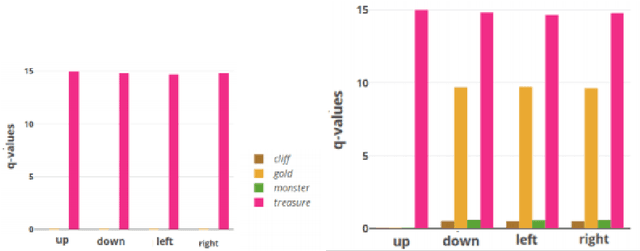

Abstract:A large set of the explainable Artificial Intelligence (XAI) literature is emerging on feature relevance techniques to explain a deep neural network (DNN) output or explaining models that ingest image source data. However, assessing how XAI techniques can help understand models beyond classification tasks, e.g. for reinforcement learning (RL), has not been extensively studied. We review recent works in the direction to attain Explainable Reinforcement Learning (XRL), a relatively new subfield of Explainable Artificial Intelligence, intended to be used in general public applications, with diverse audiences, requiring ethical, responsible and trustable algorithms. In critical situations where it is essential to justify and explain the agent's behaviour, better explainability and interpretability of RL models could help gain scientific insight on the inner workings of what is still considered a black box. We evaluate mainly studies directly linking explainability to RL, and split these into two categories according to the way the explanations are generated: transparent algorithms and post-hoc explainaility. We also review the most prominent XAI works from the lenses of how they could potentially enlighten the further deployment of the latest advances in RL, in the demanding present and future of everyday problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge