Alexander Fonarev

Riemannian Optimization for Skip-Gram Negative Sampling

Apr 26, 2017

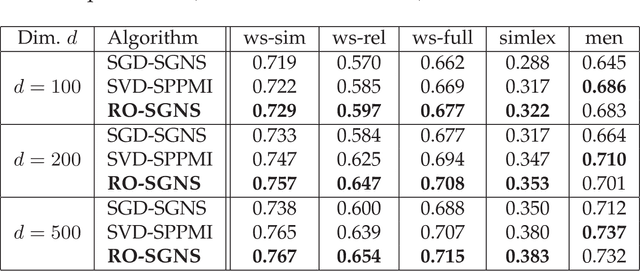

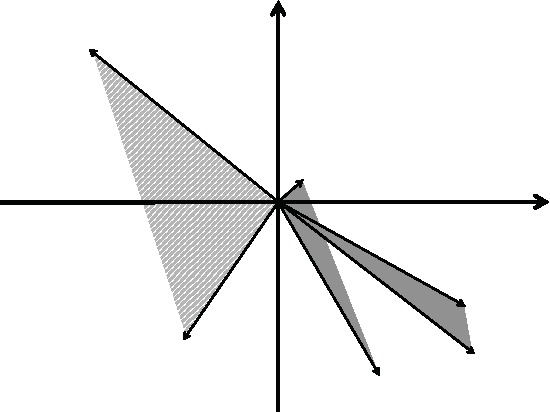

Abstract:Skip-Gram Negative Sampling (SGNS) word embedding model, well known by its implementation in "word2vec" software, is usually optimized by stochastic gradient descent. However, the optimization of SGNS objective can be viewed as a problem of searching for a good matrix with the low-rank constraint. The most standard way to solve this type of problems is to apply Riemannian optimization framework to optimize the SGNS objective over the manifold of required low-rank matrices. In this paper, we propose an algorithm that optimizes SGNS objective using Riemannian optimization and demonstrates its superiority over popular competitors, such as the original method to train SGNS and SVD over SPPMI matrix.

Efficient Rectangular Maximal-Volume Algorithm for Rating Elicitation in Collaborative Filtering

Oct 16, 2016

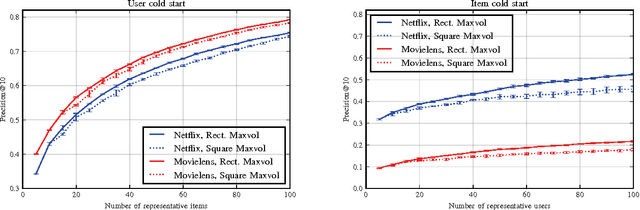

Abstract:Cold start problem in Collaborative Filtering can be solved by asking new users to rate a small seed set of representative items or by asking representative users to rate a new item. The question is how to build a seed set that can give enough preference information for making good recommendations. One of the most successful approaches, called Representative Based Matrix Factorization, is based on Maxvol algorithm. Unfortunately, this approach has one important limitation --- a seed set of a particular size requires a rating matrix factorization of fixed rank that should coincide with that size. This is not necessarily optimal in the general case. In the current paper, we introduce a fast algorithm for an analytical generalization of this approach that we call Rectangular Maxvol. It allows the rank of factorization to be lower than the required size of the seed set. Moreover, the paper includes the theoretical analysis of the method's error, the complexity analysis of the existing methods and the comparison to the state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge