Alex Smith

Demo: Untethered Haptic Teleoperation for Nuclear Decommissioning using a Low-Power Wireless Control Technology

Jun 27, 2022

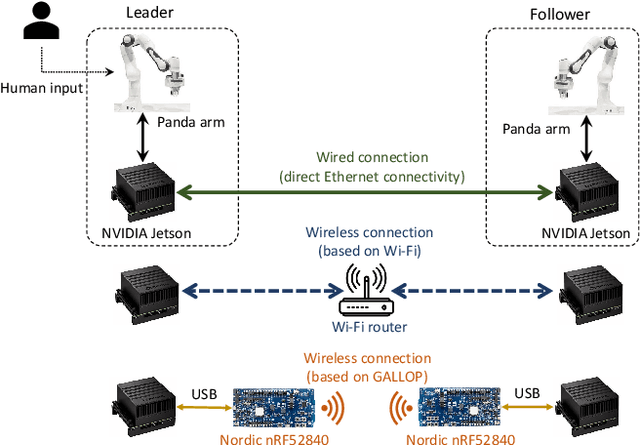

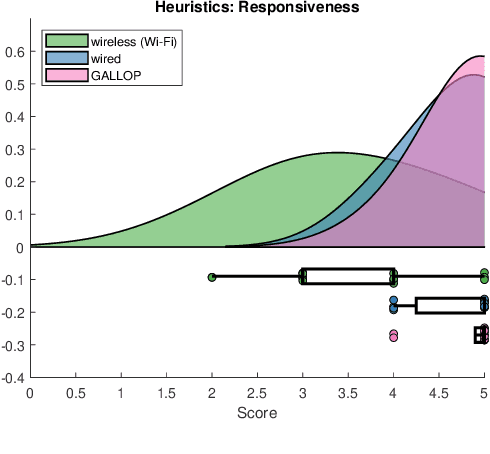

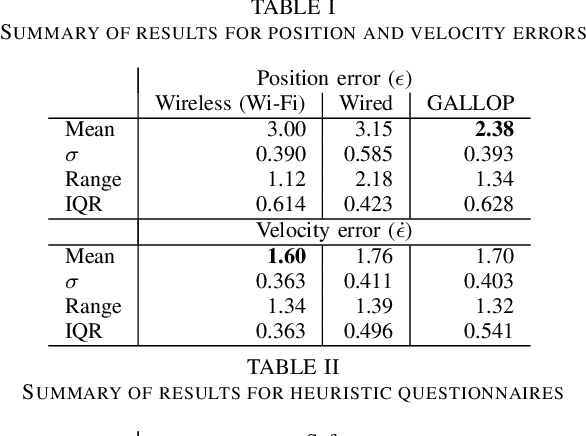

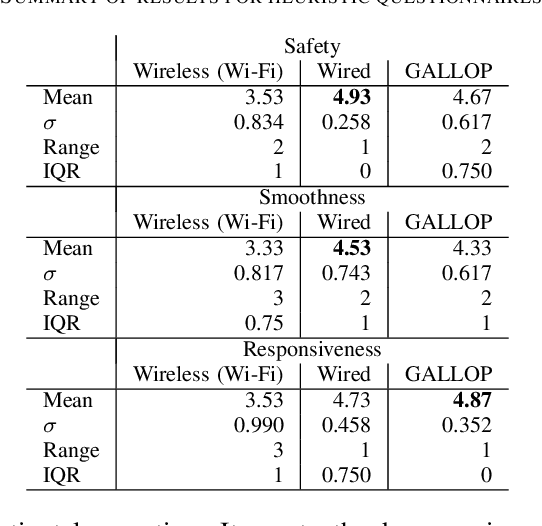

Abstract:Haptic teleoperation is typically realized through wired networking technologies (e.g., Ethernet) which guarantee performance of control loops closed over the communication medium, particularly in terms of latency, jitter, and reliability. This demonstration shows the capability of conducting haptic teleoperation over a novel low-power wireless control technology, called GALLOP, in a nuclear decommissioning use-case. It shows the viability of GALLOP for meeting latency, timeliness, and safety requirements of haptic teleoperation. Evaluation conducted as part of the demonstration reveals that GALLOP, which has been implemented over an off-the-shelf Bluetooth 5.0 chipset, can be a replacement for conventional wired TCP/IP connection, and outperforms WiFi-based wireless solution in same use-case.

SpaceEdit: Learning a Unified Editing Space for Open-Domain Image Editing

Nov 30, 2021

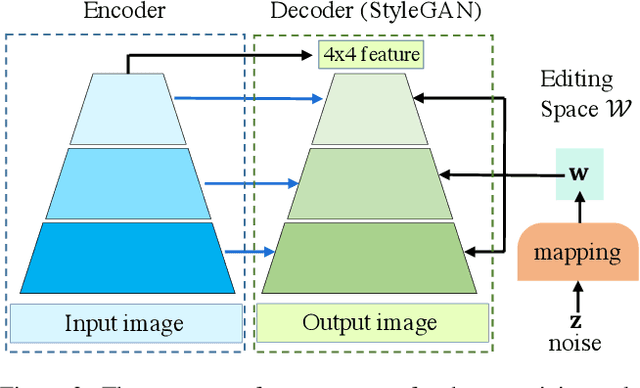

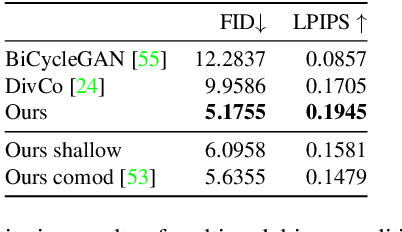

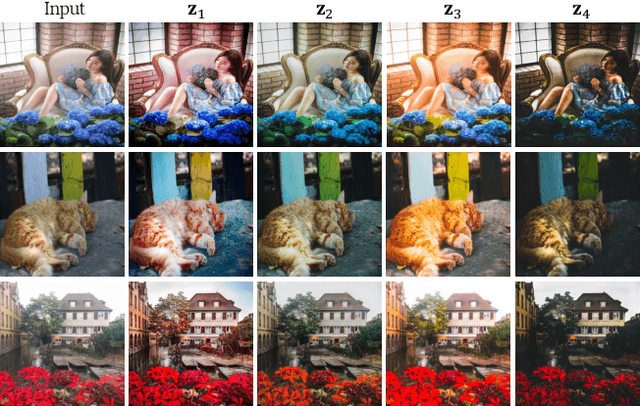

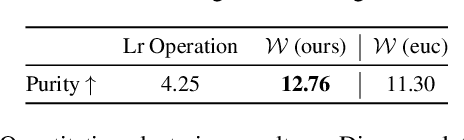

Abstract:Recently, large pretrained models (e.g., BERT, StyleGAN, CLIP) have shown great knowledge transfer and generalization capability on various downstream tasks within their domains. Inspired by these efforts, in this paper we propose a unified model for open-domain image editing focusing on color and tone adjustment of open-domain images while keeping their original content and structure. Our model learns a unified editing space that is more semantic, intuitive, and easy to manipulate than the operation space (e.g., contrast, brightness, color curve) used in many existing photo editing softwares. Our model belongs to the image-to-image translation framework which consists of an image encoder and decoder, and is trained on pairs of before- and after-images to produce multimodal outputs. We show that by inverting image pairs into latent codes of the learned editing space, our model can be leveraged for various downstream editing tasks such as language-guided image editing, personalized editing, editing-style clustering, retrieval, etc. We extensively study the unique properties of the editing space in experiments and demonstrate superior performance on the aforementioned tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge