Alessio Arcudi

Function Based Isolation Forest (FuBIF): A Unifying Framework for Interpretable Isolation-Based Anomaly Detection

Nov 08, 2025

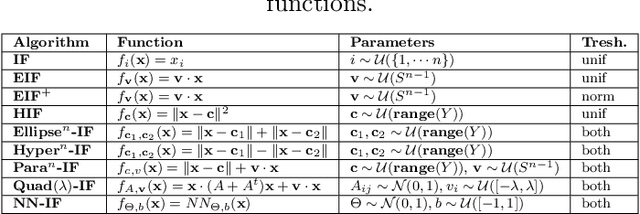

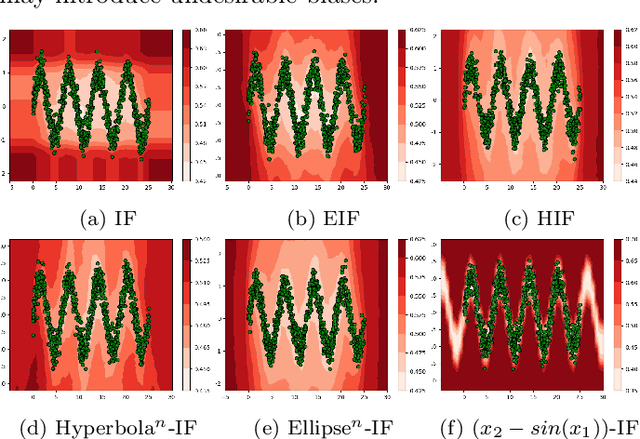

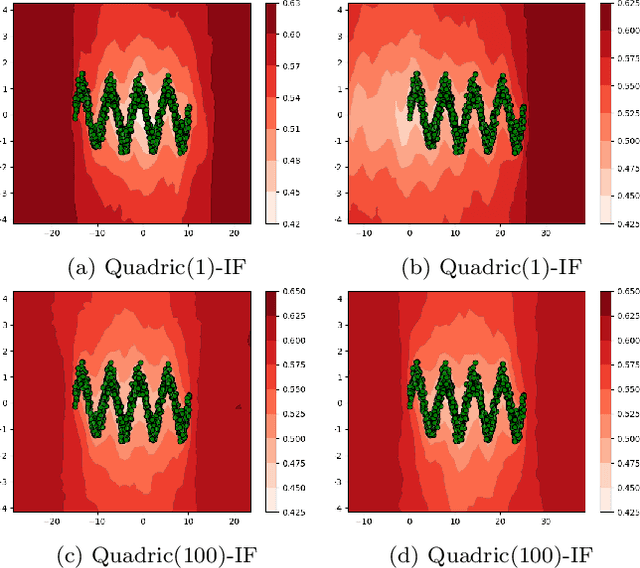

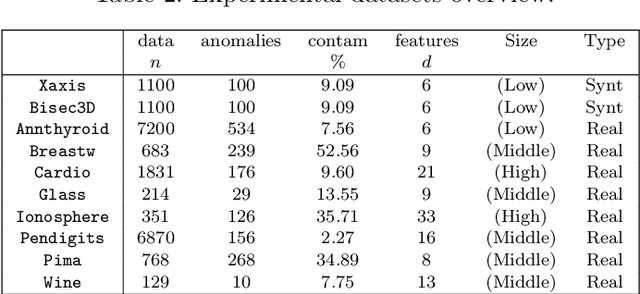

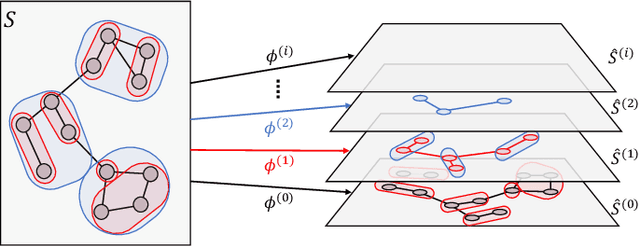

Abstract:Anomaly Detection (AD) is evolving through algorithms capable of identifying outliers in complex datasets. The Isolation Forest (IF), a pivotal AD technique, exhibits adaptability limitations and biases. This paper introduces the Function-based Isolation Forest (FuBIF), a generalization of IF that enables the use of real-valued functions for dataset branching, significantly enhancing the flexibility of evaluation tree construction. Complementing this, the FuBIF Feature Importance (FuBIFFI) algorithm extends the interpretability in IF-based approaches by providing feature importance scores across possible FuBIF models. This paper details the operational framework of FuBIF, evaluates its performance against established methods, and explores its theoretical contributions. An open-source implementation is provided to encourage further research and ensure reproducibility.

Multi-layer Abstraction for Nested Generation of Options (MANGO) in Hierarchical Reinforcement Learning

Aug 25, 2025

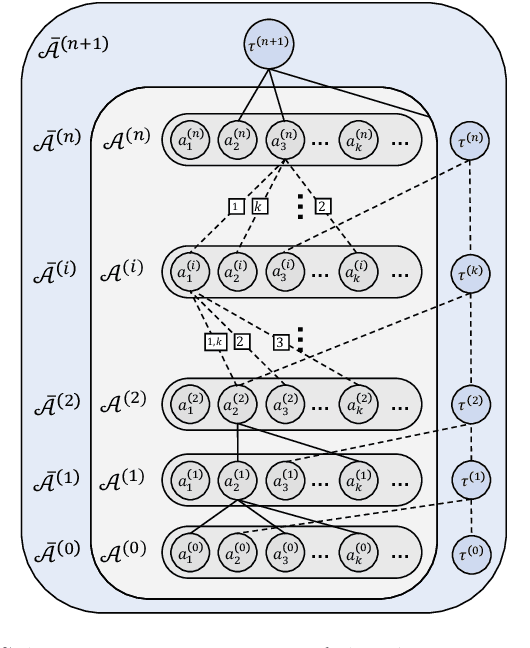

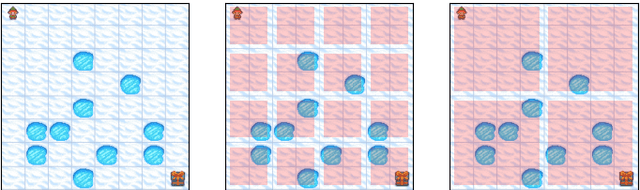

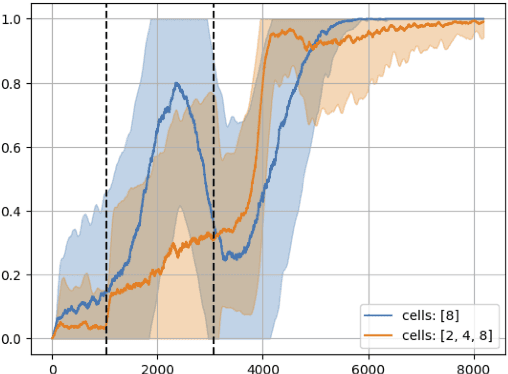

Abstract:This paper introduces MANGO (Multilayer Abstraction for Nested Generation of Options), a novel hierarchical reinforcement learning framework designed to address the challenges of long-term sparse reward environments. MANGO decomposes complex tasks into multiple layers of abstraction, where each layer defines an abstract state space and employs options to modularize trajectories into macro-actions. These options are nested across layers, allowing for efficient reuse of learned movements and improved sample efficiency. The framework introduces intra-layer policies that guide the agent's transitions within the abstract state space, and task actions that integrate task-specific components such as reward functions. Experiments conducted in procedurally-generated grid environments demonstrate substantial improvements in both sample efficiency and generalization capabilities compared to standard RL methods. MANGO also enhances interpretability by making the agent's decision-making process transparent across layers, which is particularly valuable in safety-critical and industrial applications. Future work will explore automated discovery of abstractions and abstract actions, adaptation to continuous or fuzzy environments, and more robust multi-layer training strategies.

Interpretable Data-driven Anomaly Detection in Industrial Processes with ExIFFI

May 02, 2024

Abstract:Anomaly detection (AD) is a crucial process often required in industrial settings. Anomalies can signal underlying issues within a system, prompting further investigation. Industrial processes aim to streamline operations as much as possible, encompassing the production of the final product, making AD an essential mean to reach this goal.Conventional anomaly detection methodologies typically classify observations as either normal or anomalous without providing insight into the reasons behind these classifications.Consequently, in light of the emergence of Industry 5.0, a more desirable approach involves providing interpretable outcomes, enabling users to understand the rationale behind the results.This paper presents the first industrial application of ExIFFI, a recently developed approach focused on the production of fast and efficient explanations for the Extended Isolation Forest (EIF) Anomaly detection method. ExIFFI is tested on two publicly available industrial datasets demonstrating superior effectiveness in explanations and computational efficiency with the respect to other state-of-the-art explainable AD models.

ExIFFI and EIF+: Interpretability and Enhanced Generalizability to Extend the Extended Isolation Forest

Oct 09, 2023

Abstract:Anomaly detection, an essential unsupervised machine learning task, involves identifying unusual behaviors within complex datasets and systems. While Machine Learning algorithms and decision support systems (DSSs) offer effective solutions for this task, simply pinpointing anomalies often falls short in real-world applications. Users of these systems often require insight into the underlying reasons behind predictions to facilitate Root Cause Analysis and foster trust in the model. However, due to the unsupervised nature of anomaly detection, creating interpretable tools is challenging. This work introduces EIF+, an enhanced variant of Extended Isolation Forest (EIF), designed to enhance generalization capabilities. Additionally, we present ExIFFI, a novel approach that equips Extended Isolation Forest with interpretability features, specifically feature rankings. Experimental results provide a comprehensive comparative analysis of Isolation-based approaches for Anomaly Detection, including synthetic and real dataset evaluations that demonstrate ExIFFI's effectiveness in providing explanations. We also illustrate how ExIFFI serves as a valid feature selection technique in unsupervised settings. To facilitate further research and reproducibility, we also provide open-source code to replicate the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge