Alessandro Montanari

OmniBuds: A Sensory Earable Platform for Advanced Bio-Sensing and On-Device Machine Learning

Oct 07, 2024Abstract:Sensory earables have evolved from basic audio enhancement devices into sophisticated platforms for clinical-grade health monitoring and wellbeing management. This paper introduces OmniBuds, an advanced sensory earable platform integrating multiple biosensors and onboard computation powered by a machine learning accelerator, all within a real-time operating system (RTOS). The platform's dual-ear symmetric design, equipped with precisely positioned kinetic, acoustic, optical, and thermal sensors, enables highly accurate and real-time physiological assessments. Unlike conventional earables that rely on external data processing, OmniBuds leverage real-time onboard computation to significantly enhance system efficiency, reduce latency, and safeguard privacy by processing data locally. This capability includes executing complex machine learning models directly on the device. We provide a comprehensive analysis of OmniBuds' design, hardware and software architecture demonstrating its capacity for multi-functional applications, accurate and robust tracking of physiological parameters, and advanced human-computer interaction.

SensiX++: Bringing MLOPs and Multi-tenant Model Serving to Sensory Edge Devices

Sep 08, 2021

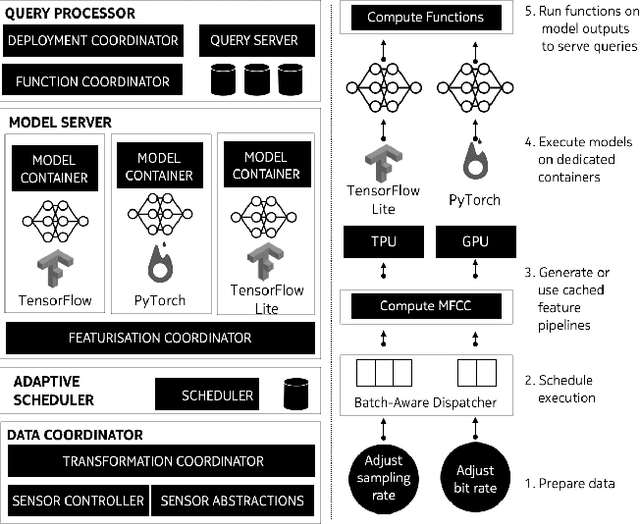

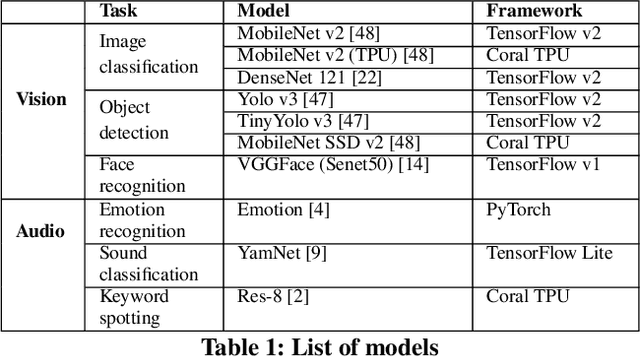

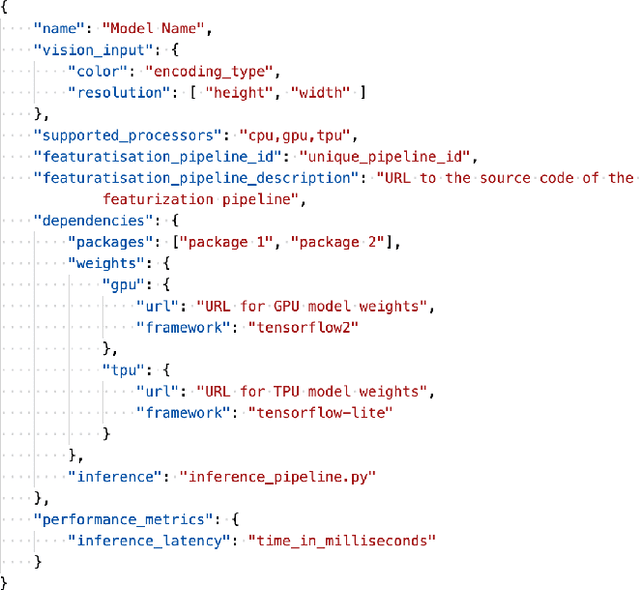

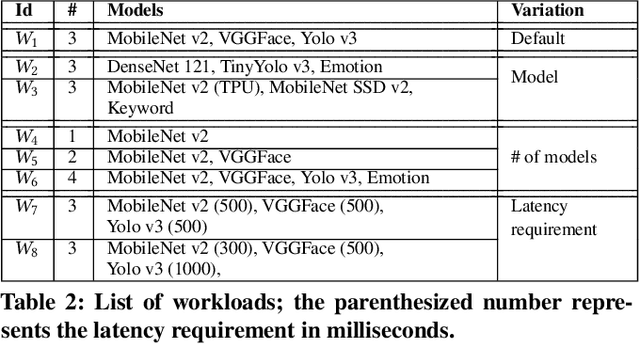

Abstract:We present SensiX++ - a multi-tenant runtime for adaptive model execution with integrated MLOps on edge devices, e.g., a camera, a microphone, or IoT sensors. SensiX++ operates on two fundamental principles - highly modular componentisation to externalise data operations with clear abstractions and document-centric manifestation for system-wide orchestration. First, a data coordinator manages the lifecycle of sensors and serves models with correct data through automated transformations. Next, a resource-aware model server executes multiple models in isolation through model abstraction, pipeline automation and feature sharing. An adaptive scheduler then orchestrates the best-effort executions of multiple models across heterogeneous accelerators, balancing latency and throughput. Finally, microservices with REST APIs serve synthesised model predictions, system statistics, and continuous deployment. Collectively, these components enable SensiX++ to serve multiple models efficiently with fine-grained control on edge devices while minimising data operation redundancy, managing data and device heterogeneity, reducing resource contention and removing manual MLOps. We benchmark SensiX++ with ten different vision and acoustics models across various multi-tenant configurations on different edge accelerators (Jetson AGX and Coral TPU) designed for sensory devices. We report on the overall throughput and quantified benefits of various automation components of SensiX++ and demonstrate its efficacy to significantly reduce operational complexity and lower the effort to deploy, upgrade, reconfigure and serve embedded models on edge devices.

SensiX: A Platform for Collaborative Machine Learning on the Edge

Dec 04, 2020

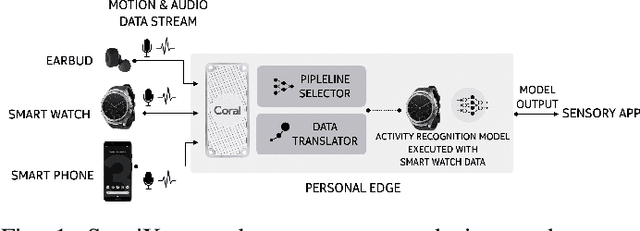

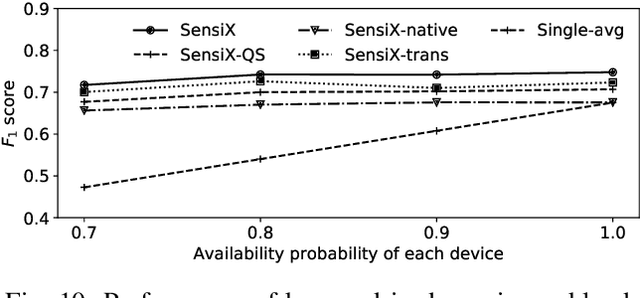

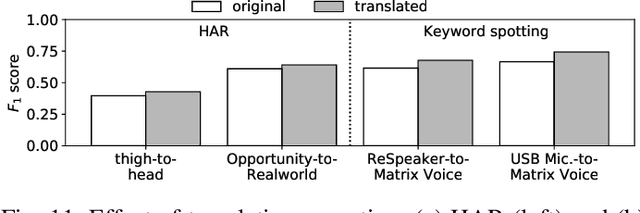

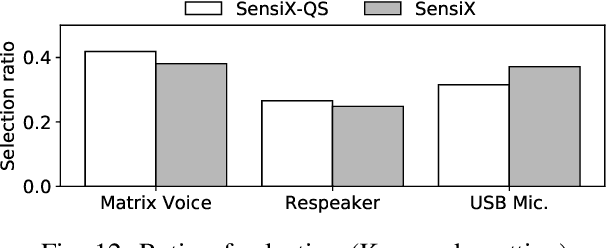

Abstract:The emergence of multiple sensory devices on or near a human body is uncovering new dynamics of extreme edge computing. In this, a powerful and resource-rich edge device such as a smartphone or a Wi-Fi gateway is transformed into a personal edge, collaborating with multiple devices to offer remarkable sensory al eapplications, while harnessing the power of locality, availability, and proximity. Naturally, this transformation pushes us to rethink how to construct accurate, robust, and efficient sensory systems at personal edge. For instance, how do we build a reliable activity tracker with multiple on-body IMU-equipped devices? While the accuracy of sensing models is improving, their runtime performance still suffers, especially under this emerging multi-device, personal edge environments. Two prime caveats that impact their performance are device and data variabilities, contributed by several runtime factors, including device availability, data quality, and device placement. To this end, we present SensiX, a personal edge platform that stays between sensor data and sensing models, and ensures best-effort inference under any condition while coping with device and data variabilities without demanding model engineering. SensiX externalises model execution away from applications, and comprises of two essential functions, a translation operator for principled mapping of device-to-device data and a quality-aware selection operator to systematically choose the right execution path as a function of model accuracy. We report the design and implementation of SensiX and demonstrate its efficacy in developing motion and audio-based multi-device sensing systems. Our evaluation shows that SensiX offers a 7-13% increase in overall accuracy and up to 30% increase across different environment dynamics at the expense of 3mW power overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge