Albara Ah Ramli

Harnessing FFT for Rapid Community Travel Distance and Step Estimation in Children with DMD

Apr 04, 2025Abstract:Accurate estimation of gait characteristics, including step length, step velocity, and travel distance, is critical for assessing mobility in toddlers, children and teens with Duchenne muscular dystrophy (DMD) and typically developing (TD) peers. This study introduces a novel method leveraging Fast Fourier Transform (FFT)-derived step frequency from a single waist-worn consumer-grade accelerometer to predict gait parameters efficiently. The proposed FFT-based step frequency detection approach, combined with regression-derived stride length estimation, enables precise measurement of temporospatial gait features across various walking and running speeds. Our model, developed from a diverse cohort of children aged 3-16, demonstrated high accuracy in step length estimation (R^2=0.92, RMSE = 0.06) using only step frequency and height as inputs. Comparative analysis with ground-truth observations and AI-driven Walk4Me models validated the FFT-based method, showing strong agreement across step count, step frequency, step length, step velocity, and travel distance metrics. The results highlight the feasibility of using widely available mobile devices for gait assessment in real-world settings, offering a scalable solution for monitoring disease progression and mobility changes in individuals with DMD. Future work will focus on refining model performance and expanding applicability to additional movement disorders.

Automated Detection of Gait Events and Travel Distance Using Waist-worn Accelerometers Across a Typical Range of Walking and Running Speeds

Jul 10, 2023

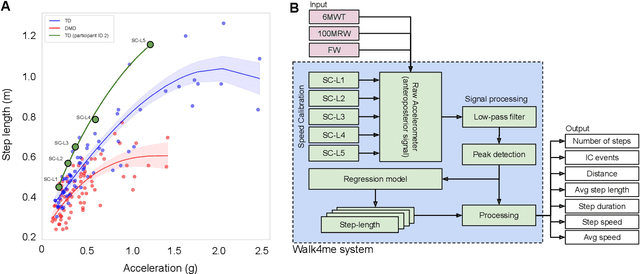

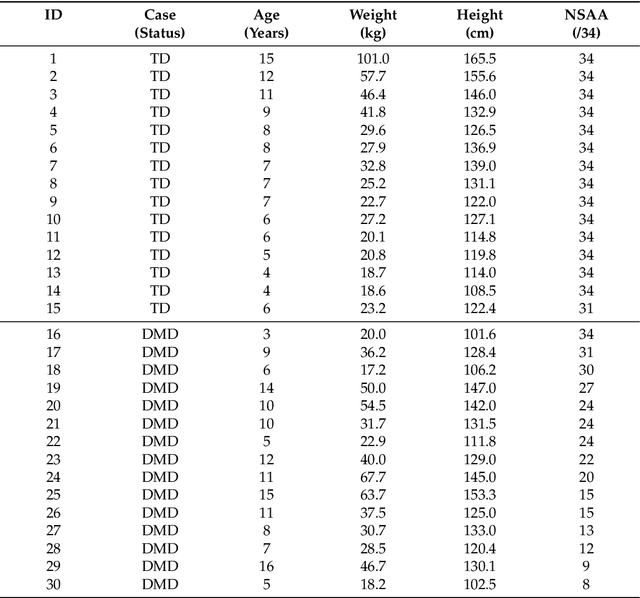

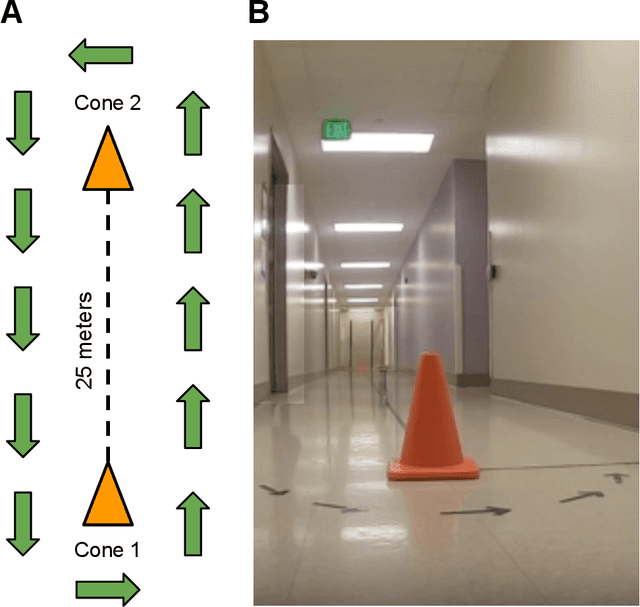

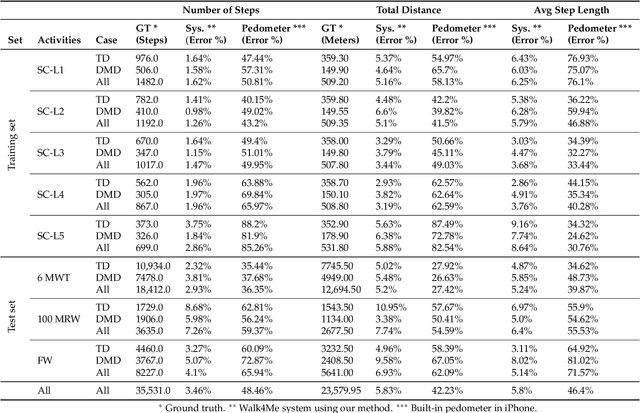

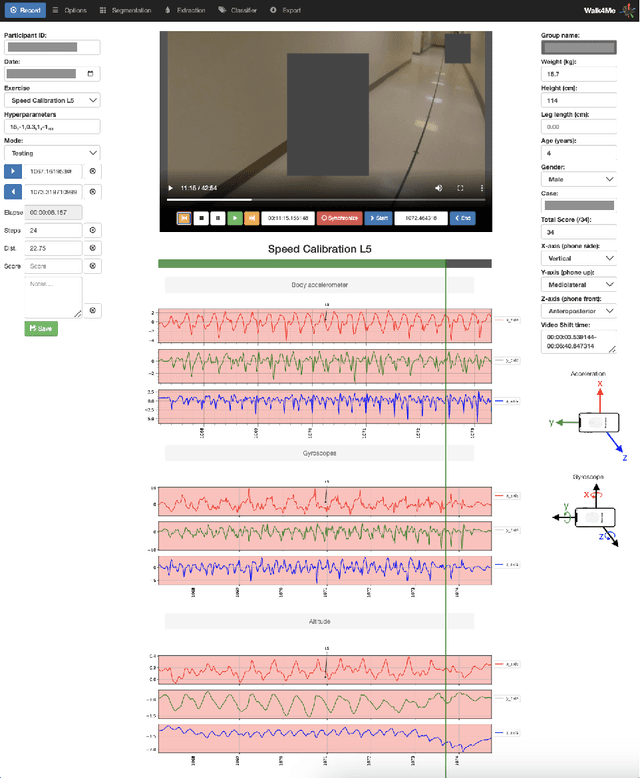

Abstract:Background: Estimation of temporospatial clinical features of gait (CFs), such as step count and length, step duration, step frequency, gait speed and distance traveled is an important component of community-based mobility evaluation using wearable accelerometers. However, challenges arising from device complexity and availability, cost and analytical methodology have limited widespread application of such tools. Research Question: Can accelerometer data from commercially-available smartphones be used to extract gait CFs across a broad range of attainable gait velocities in children with Duchenne muscular dystrophy (DMD) and typically developing controls (TDs) using machine learning (ML)-based methods Methods: Fifteen children with DMD and 15 TDs underwent supervised clinical testing across a range of gait speeds using 10 or 25m run/walk (10MRW, 25MRW), 100m run/walk (100MRW), 6-minute walk (6MWT) and free-walk (FW) evaluations while wearing a mobile phone-based accelerometer at the waist near the body's center of mass. Gait CFs were extracted from the accelerometer data using a multi-step machine learning-based process and results were compared to ground-truth observation data. Results: Model predictions vs. observed values for step counts, distance traveled, and step length showed a strong correlation (Pearson's r = -0.9929 to 0.9986, p<0.0001). The estimates demonstrated a mean (SD) percentage error of 1.49% (7.04%) for step counts, 1.18% (9.91%) for distance traveled, and 0.37% (7.52%) for step length compared to ground truth observations for the combined 6MWT, 100MRW, and FW tasks. Significance: The study findings indicate that a single accelerometer placed near the body's center of mass can accurately measure CFs across different gait speeds in both TD and DMD peers, suggesting that there is potential for accurately measuring CFs in the community with consumer-level smartphones.

Walk4Me: Telehealth Community Mobility Assessment, An Automated System for Early Diagnosis and Disease Progression

May 05, 2023

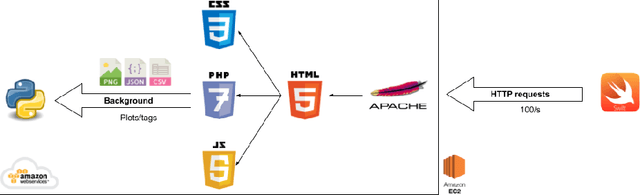

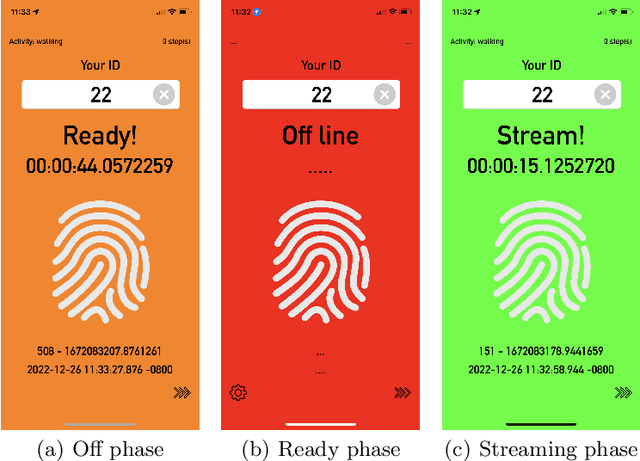

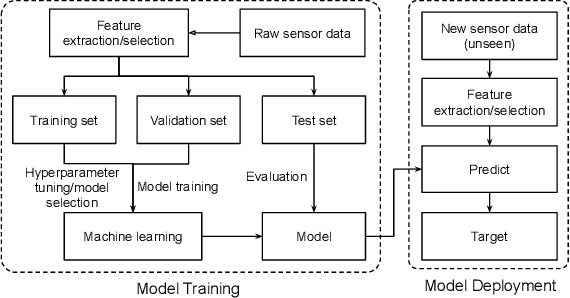

Abstract:We introduce Walk4Me, a telehealth community mobility assessment system designed to facilitate early diagnosis, severity, and progression identification. Our system achieves this by 1) enabling early diagnosis, 2) identifying early indicators of clinical severity, and 3) quantifying and tracking the progression of the disease across the ambulatory phase of the disease. To accomplish this, we employ an Artificial Intelligence (AI)-based detection of gait characteristics in patients and typically developing peers. Our system remotely and in real-time collects data from device sensors (e.g., acceleration from a mobile device, etc.) using our novel Walk4Me API. Our web application extracts temporal/spatial gait characteristics and raw data signal characteristics and then employs traditional machine learning and deep learning techniques to identify patterns that can 1) identify patients with gait disturbances associated with disease, 2) describe the degree of mobility limitation, and 3) identify characteristics that change over time with disease progression. We have identified several machine learning techniques that differentiate between patients and typically-developing subjects with 100% accuracy across the age range studied, and we have also identified corresponding temporal/spatial gait characteristics associated with each group. Our work demonstrates the potential of utilizing the latest advances in mobile device and machine learning technology to measure clinical outcomes regardless of the point of care, inform early clinical diagnosis and treatment decision-making, and monitor disease progression.

Early Mobility Recognition for Intensive Care Unit Patients Using Accelerometers

Jun 28, 2021

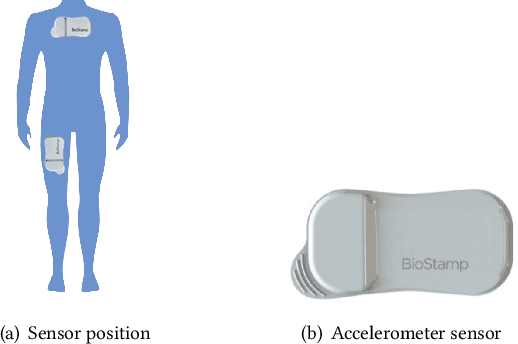

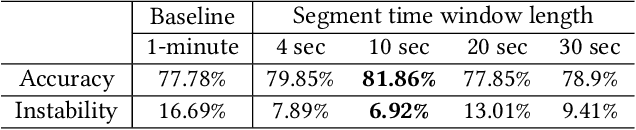

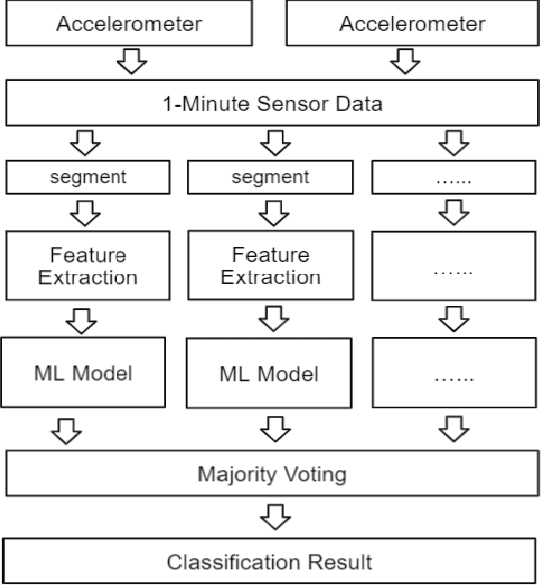

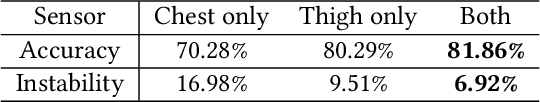

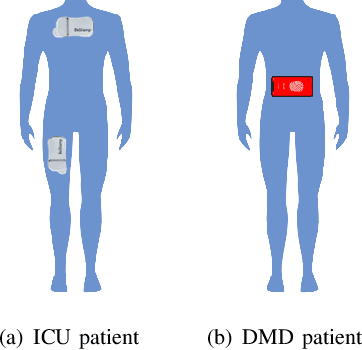

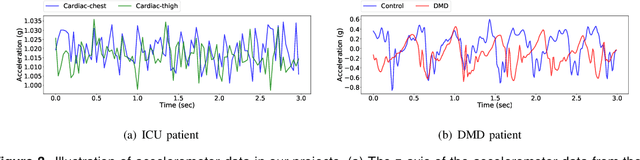

Abstract:With the development of the Internet of Things(IoT) and Artificial Intelligence(AI) technologies, human activity recognition has enabled various applications, such as smart homes and assisted living. In this paper, we target a new healthcare application of human activity recognition, early mobility recognition for Intensive Care Unit(ICU) patients. Early mobility is essential for ICU patients who suffer from long-time immobilization. Our system includes accelerometer-based data collection from ICU patients and an AI model to recognize patients' early mobility. To improve the model accuracy and stability, we identify features that are insensitive to sensor orientations and propose a segment voting process that leverages a majority voting strategy to recognize each segment's activity. Our results show that our system improves model accuracy from 77.78\% to 81.86\% and reduces the model instability (standard deviation) from 16.69\% to 6.92\%, compared to the same AI model without our feature engineering and segment voting process.

Gait Characterization in Duchenne Muscular Dystrophy (DMD) Using a Single-Sensor Accelerometer: Classical Machine Learning and Deep Learning Approaches

May 12, 2021

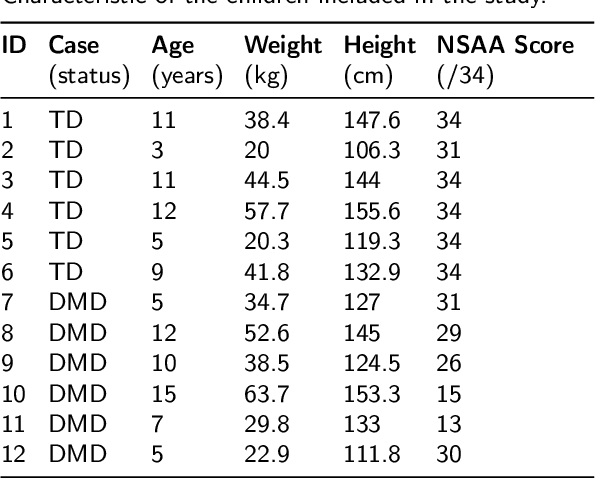

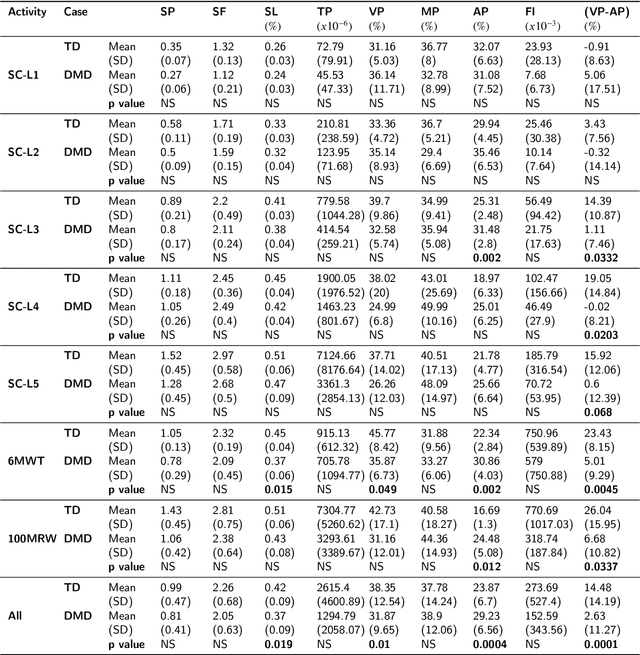

Abstract:Differences in gait patterns of children with Duchenne muscular dystrophy (DMD) and typically developing (TD) peers are visible to the eye, but quantification of those differences outside of the gait laboratory has been elusive. We measured vertical, mediolateral, and anteroposterior acceleration using a waist-worn iPhone accelerometer during ambulation across a typical range of velocities. Six TD and six DMD children from 3-15 years of age underwent seven walking/running tasks, including five 25m walk/run tests at a slow walk to running speeds, a 6-minute walk test (6MWT), and a 100-meter-run/walk (100MRW). We extracted temporospatial clinical gait features (CFs) and applied multiple Artificial Intelligence (AI) tools to differentiate between DMD and TD control children using extracted features and raw data. Extracted CFs showed reduced step length and a greater mediolateral component of total power (TP) consistent with shorter strides and Trendelenberg-like gait commonly observed in DMD. AI methods using CFs and raw data varied ineffectiveness at differentiating between DMD and TD controls at different speeds, with an accuracy of some methods exceeding 91%. We demonstrate that by using AI tools with accelerometer data from a consumer-level smartphone, we can identify DMD gait disturbance in toddlers to early teens.

An Overview of Human Activity Recognition Using Wearable Sensors: Healthcare and Artificial Intelligence

Mar 29, 2021

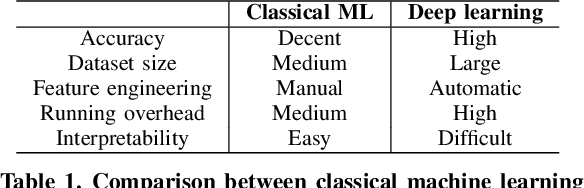

Abstract:With the rapid development of the internet of things (IoT) and artificial intelligence (AI) technologies, human activity recognition (HAR) has been applied in a variety of domains such as security and surveillance, human-robot interaction, and entertainment. Even though a number of surveys and review papers have been published, there is a lack of HAR overview paper focusing on healthcare applications that use wearable sensors. Therefore, we fill in the gap by presenting this overview paper. In particular, we present our emerging HAR projects for healthcare: identification of human activities for intensive care unit (ICU) patients and Duchenne muscular dystrophy (DMD) patients. Our HAR systems include hardware design to collect sensor data from ICU patients and DMD patients and accurate AI models to recognize patients' activities. This overview paper covers considerations and settings for building a HAR healthcare system, including sensor factors, AI model comparison, and system challenges.

BWCNN: Blink to Word, a Real-Time Convolutional Neural Network Approach

Jun 01, 2020

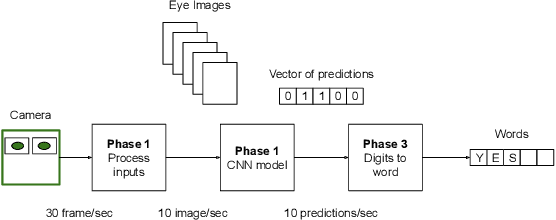

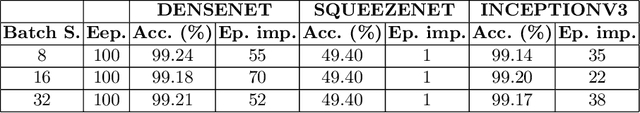

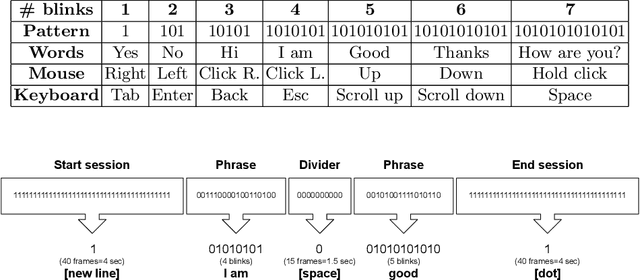

Abstract:Amyotrophic lateral sclerosis (ALS) is a progressive neurodegenerative disease of the brain and the spinal cord, which leads to paralysis of motor functions. Patients retain their ability to blink, which can be used for communication. Here, We present an Artificial Intelligence (AI) system that uses eye-blinks to communicate with the outside world, running on real-time Internet-of-Things (IoT) devices. The system uses a Convolutional Neural Network (CNN) to find the blinking pattern, which is defined as a series of Open and Closed states. Each pattern is mapped to a collection of words that manifest the patient's intent. To investigate the best trade-off between accuracy and latency, we investigated several Convolutional Network architectures, such as ResNet, SqueezeNet, DenseNet, and InceptionV3, and evaluated their performance. We found that the InceptionV3 architecture, after hyper-parameter fine-tuning on the specific task led to the best performance with an accuracy of 99.20% and 94ms latency. This work demonstrates how the latest advances in deep learning architectures can be adapted for clinical systems that ameliorate the patient's quality of life regardless of the point-of-care.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge