Alan Bundy

Exploring the Meta-level Reasoning of Large Language Models via a Tool-based Multi-hop Tabular Question Answering Task

Jan 12, 2026Abstract:Recent advancements in Large Language Models (LLMs) are increasingly focused on "reasoning" ability, a concept with many overlapping definitions in the LLM discourse. We take a more structured approach, distinguishing meta-level reasoning (denoting the process of reasoning about intermediate steps required to solve a task) from object-level reasoning (which concerns the low-level execution of the aforementioned steps.) We design a novel question answering task, which is based around the values of geopolitical indicators for various countries over various years. Questions require breaking down into intermediate steps, retrieval of data, and mathematical operations over that data. The meta-level reasoning ability of LLMs is analysed by examining the selection of appropriate tools for answering questions. To bring greater depth to the analysis of LLMs beyond final answer accuracy, our task contains 'essential actions' against which we can compare the tool call output of LLMs to infer the strength of reasoning ability. We find that LLMs demonstrate good meta-level reasoning on our task, yet are flawed in some aspects of task understanding. We find that n-shot prompting has little effect on accuracy; error messages encountered do not often deteriorate performance; and provide additional evidence for the poor numeracy of LLMs. Finally, we discuss the generalisation and limitation of our findings to other task domains.

Advancing the Scientific Method with Large Language Models: From Hypothesis to Discovery

May 22, 2025Abstract:With recent Nobel Prizes recognising AI contributions to science, Large Language Models (LLMs) are transforming scientific research by enhancing productivity and reshaping the scientific method. LLMs are now involved in experimental design, data analysis, and workflows, particularly in chemistry and biology. However, challenges such as hallucinations and reliability persist. In this contribution, we review how Large Language Models (LLMs) are redefining the scientific method and explore their potential applications across different stages of the scientific cycle, from hypothesis testing to discovery. We conclude that, for LLMs to serve as relevant and effective creative engines and productivity enhancers, their deep integration into all steps of the scientific process should be pursued in collaboration and alignment with human scientific goals, with clear evaluation metrics. The transition to AI-driven science raises ethical questions about creativity, oversight, and responsibility. With careful guidance, LLMs could evolve into creative engines, driving transformative breakthroughs across scientific disciplines responsibly and effectively. However, the scientific community must also decide how much it leaves to LLMs to drive science, even when associations with 'reasoning', mostly currently undeserved, are made in exchange for the potential to explore hypothesis and solution regions that might otherwise remain unexplored by human exploration alone.

* 45 pages

ALIST: Associative Logic for Inference, Storage and Transfer. A Lingua Franca for Inference on the Web

Mar 12, 2023Abstract:Recent developments in support for constructing knowledge graphs have led to a rapid rise in their creation both on the Web and within organisations. Added to existing sources of data, including relational databases, APIs, etc., there is a strong demand for techniques to query these diverse sources of knowledge. While formal query languages, such as SPARQL, exist for querying some knowledge graphs, users are required to know which knowledge graphs they need to query and the unique resource identifiers of the resources they need. Although alternative techniques in neural information retrieval embed the content of knowledge graphs in vector spaces, they fail to provide the representation and query expressivity needed (e.g. inability to handle non-trivial aggregation functions such as regression). We believe that a lingua franca, i.e. a formalism, that enables such representational flexibility will increase the ability of intelligent automated agents to combine diverse data sources by inference. Our work proposes a flexible representation (alists) to support intelligent federated querying of diverse knowledge sources. Our contribution includes (1) a formalism that abstracts the representation of queries from the specific query language of a knowledge graph; (2) a representation to dynamically curate data and functions (operations) to perform non-trivial inference over diverse knowledge sources; (3) a demonstration of the expressiveness of alists to represent the diversity of representational formalisms, including SPARQL queries, and more generally first-order logic expressions.

Investigating the use of Paraphrase Generation for Question Reformulation in the FRANK QA system

Jun 06, 2022

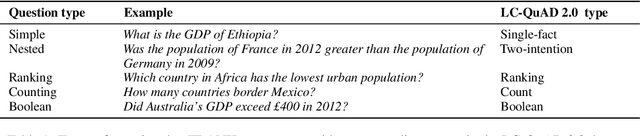

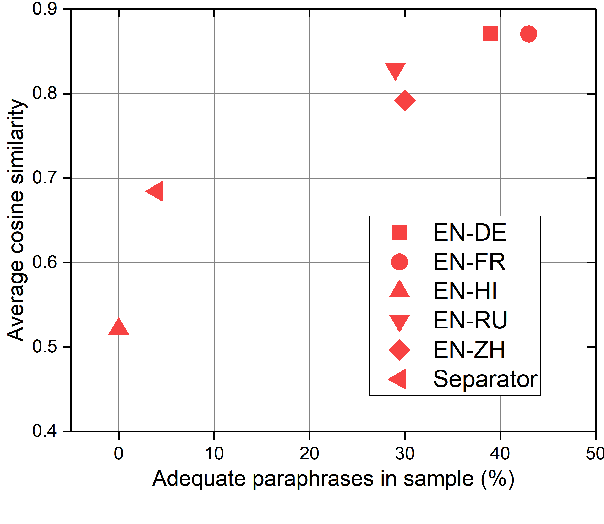

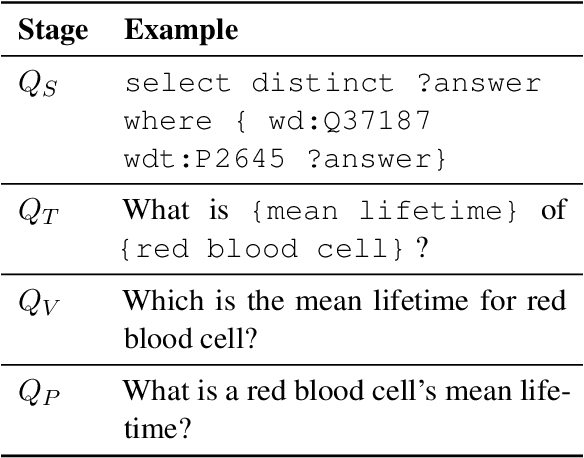

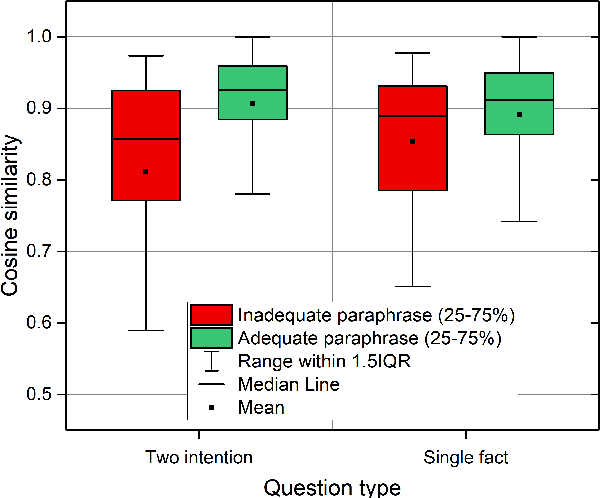

Abstract:We present a study into the ability of paraphrase generation methods to increase the variety of natural language questions that the FRANK Question Answering system can answer. We first evaluate paraphrase generation methods on the LC-QuAD 2.0 dataset using both automatic metrics and human judgement, and discuss their correlation. Error analysis on the dataset is also performed using both automatic and manual approaches, and we discuss how paraphrase generation and evaluation is affected by data points which contain error. We then simulate an implementation of the best performing paraphrase generation method (an English-French backtranslation) into FRANK in order to test our original hypothesis, using a small challenge dataset. Our two main conclusions are that cleaning of LC-QuAD 2.0 is required as the errors present can affect evaluation; and that, due to limitations of FRANK's parser, paraphrase generation is not a method which we can rely on to improve the variety of natural language questions that FRANK can answer.

Signature Entrenchment and Conceptual Changes in Automated Theory Repair

Jan 20, 2022Abstract:Human beliefs change, but so do the concepts that underpin them. The recent Abduction, Belief Revision and Conceptual Change (ABC) repair system combines several methods from automated theory repair to expand, contract, or reform logical structures representing conceptual knowledge in artificial agents. In this paper we focus on conceptual change: repair not only of the membership of logical concepts, such as what animals can fly, but also concepts themselves, such that birds may be divided into flightless and flying birds, by changing the signature of the logical theory used to represent them. We offer a method for automatically evaluating entrenchment in the signature of a Datalog theory, in order to constrain automated theory repair to succinct and intuitive outcomes. Formally, signature entrenchment measures the inferential contributions of every logical language element used to express conceptual knowledge, i.e., predicates and the arguments, ranking possible repairs to retain valuable logical concepts and reject redundant or implausible alternatives. This quantitative measurement of signature entrenchment offers a guide to the plausibility of conceptual changes, which we aim to contrast with human judgements of concept entrenchment in future work.

Automating change of representation for proofs in discrete mathematics

May 10, 2015

Abstract:Representation determines how we can reason about a specific problem. Sometimes one representation helps us find a proof more easily than others. Most current automated reasoning tools focus on reasoning within one representation. There is, therefore, a need for the development of better tools to mechanise and automate formal and logically sound changes of representation. In this paper we look at examples of representational transformations in discrete mathematics, and show how we have used Isabelle's Transfer tool to automate the use of these transformations in proofs. We give a brief overview of a general theory of transformations that we consider appropriate for thinking about the matter, and we explain how it relates to the Transfer package. We show our progress towards developing a general tactic that incorporates the automatic search for representation within the proving process.

Incidence Calculus: A Mechanism for Probabilistic Reasoning

Mar 27, 2013

Abstract:Mechanisms for the automation of uncertainty are required for expert systems. Sometimes these mechanisms need to obey the properties of probabilistic reasoning. A purely numeric mechanism, like those proposed so far, cannot provide a probabilistic logic with truth functional connectives. We propose an alternative mechanism, Incidence Calculus, which is based on a representation of uncertainty using sets of points, which might represent situations, models or possible worlds. Incidence Calculus does provide a probabilistic logic with truth functional connectives.

On Some Equivalence Relations between Incidence Calculus and Dempster-Shafer Theory of Evidence

Mar 27, 2013Abstract:Incidence Calculus and Dempster-Shafer Theory of Evidence are both theories to describe agents' degrees of belief in propositions, thus being appropriate to represent uncertainty in reasoning systems. This paper presents a straightforward equivalence proof between some special cases of these theories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge