Investigating the use of Paraphrase Generation for Question Reformulation in the FRANK QA system

Paper and Code

Jun 06, 2022

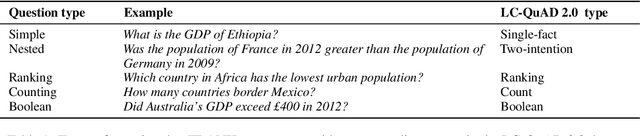

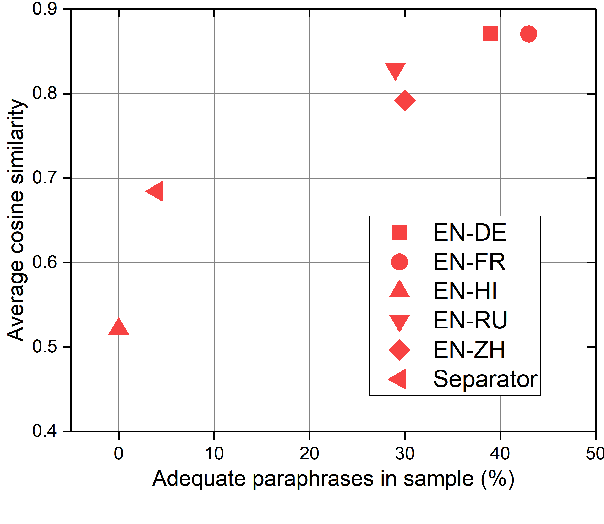

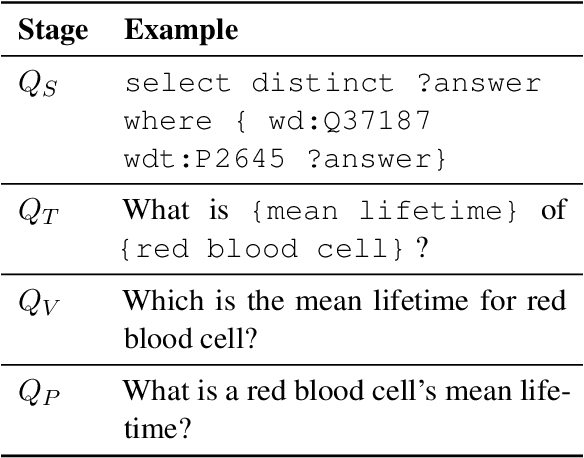

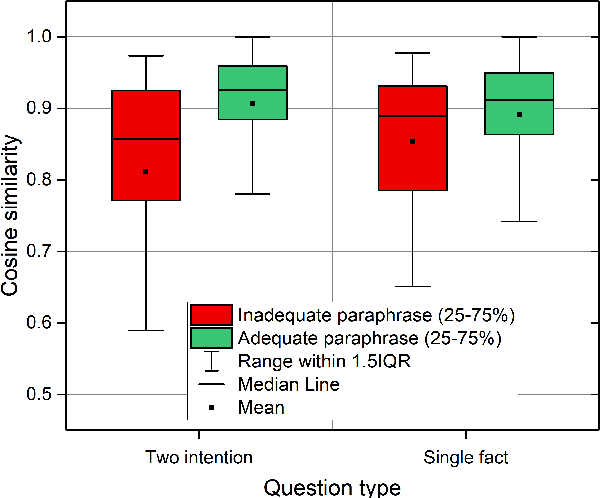

We present a study into the ability of paraphrase generation methods to increase the variety of natural language questions that the FRANK Question Answering system can answer. We first evaluate paraphrase generation methods on the LC-QuAD 2.0 dataset using both automatic metrics and human judgement, and discuss their correlation. Error analysis on the dataset is also performed using both automatic and manual approaches, and we discuss how paraphrase generation and evaluation is affected by data points which contain error. We then simulate an implementation of the best performing paraphrase generation method (an English-French backtranslation) into FRANK in order to test our original hypothesis, using a small challenge dataset. Our two main conclusions are that cleaning of LC-QuAD 2.0 is required as the errors present can affect evaluation; and that, due to limitations of FRANK's parser, paraphrase generation is not a method which we can rely on to improve the variety of natural language questions that FRANK can answer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge