Aisha Syed

Spatiotemporal Semantic V2X Framework for Cooperative Collision Prediction

Jan 23, 2026Abstract:Intelligent Transportation Systems (ITS) demand real-time collision prediction to ensure road safety and reduce accident severity. Conventional approaches rely on transmitting raw video or high-dimensional sensory data from roadside units (RSUs) to vehicles, which is impractical under vehicular communication bandwidth and latency constraints. In this work, we propose a semantic V2X framework in which RSU-mounted cameras generate spatiotemporal semantic embeddings of future frames using the Video Joint Embedding Predictive Architecture (V-JEPA). To evaluate the system, we construct a digital twin of an urban traffic environment enabling the generation of d verse traffic scenarios with both safe and collision events. These embeddings of the future frame, extracted from V-JEPA, capture task-relevant traffic dynamics and are transmitted via V2X links to vehicles, where a lightweight attentive probe and classifier decode them to predict imminent collisions. By transmitting only semantic embeddings instead of raw frames, the proposed system significantly reduces communication overhead while maintaining predictive accuracy. Experimental results demonstrate that the framework with an appropriate processing method achieves a 10% F1-score improvement for collision prediction while reducing transmission requirements by four orders of magnitude compared to raw video. This validates the potential of semantic V2X communication to enable cooperative, real-time collision prediction in ITS.

Sustainable Task Offloading in Secure UAV-assisted Smart Farm Networks: A Multi-Agent DRL with Action Mask Approach

Jul 29, 2024Abstract:The integration of unmanned aerial vehicles (UAVs) with mobile edge computing (MEC) and Internet of Things (IoT) technology in smart farms is pivotal for efficient resource management and enhanced agricultural productivity sustainably. This paper addresses the critical need for optimizing task offloading in secure UAV-assisted smart farm networks, aiming to reduce total delay and energy consumption while maintaining robust security in data communications. We propose a multi-agent deep reinforcement learning (DRL)-based approach using a deep double Q-network (DDQN) with an action mask (AM), designed to manage task offloading dynamically and efficiently. The simulation results demonstrate the superior performance of our method in managing task offloading, highlighting significant improvements in operational efficiency by reducing delay and energy consumption. This aligns with the goal of developing sustainable and energy-efficient solutions for next-generation network infrastructures, making our approach an advanced solution for achieving both performance and sustainability in smart farming applications.

To Risk or Not to Risk: Learning with Risk Quantification for IoT Task Offloading in UAVs

Feb 14, 2023Abstract:A deep reinforcement learning technique is presented for task offloading decision-making algorithms for a multi-access edge computing (MEC) assisted unmanned aerial vehicle (UAV) network in a smart farm Internet of Things (IoT) environment. The task offloading technique uses financial concepts such as cost functions and conditional variable at risk (CVaR) in order to quantify the damage that may be caused by each risky action. The approach was able to quantify potential risks to train the reinforcement learning agent to avoid risky behaviors that will lead to irreversible consequences for the farm. Such consequences include an undetected fire, pest infestation, or a UAV being unusable. The proposed CVaR-based technique was compared to other deep reinforcement learning techniques and two fixed rule-based techniques. The simulation results show that the CVaR-based risk quantifying method eliminated the most dangerous risk, which was exceeding the deadline for a fire detection task. As a result, it reduced the total number of deadline violations with a negligible increase in energy consumption.

IoT-Aerial Base Station Task Offloading with Risk-Sensitive Reinforcement Learning for Smart Agriculture

Sep 15, 2022

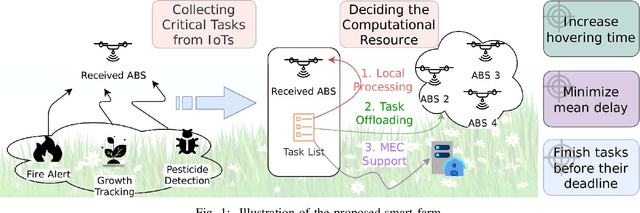

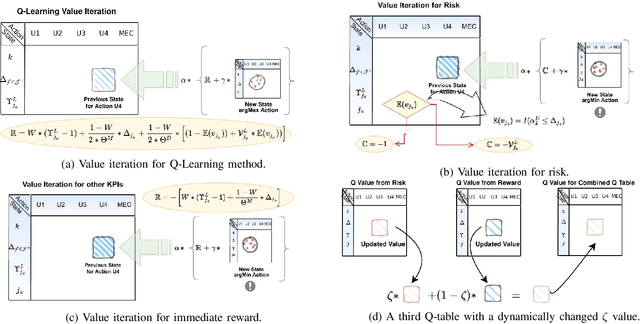

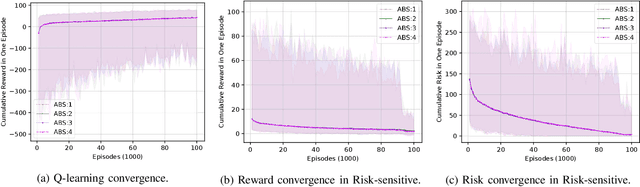

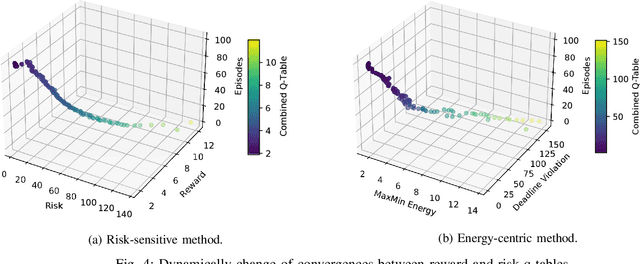

Abstract:Aerial base stations (ABSs) allow smart farms to offload processing responsibility of complex tasks from internet of things (IoT) devices to ABSs. IoT devices have limited energy and computing resources, thus it is required to provide an advanced solution for a system that requires the support of ABSs. This paper introduces a novel multi-actor-based risk-sensitive reinforcement learning approach for ABS task scheduling for smart agriculture. The problem is defined as task offloading with a strict condition on completing the IoT tasks before their deadlines. Moreover, the algorithm must also consider the limited energy capacity of the ABSs. The results show that our proposed approach outperforms several heuristics and the classic Q-Learning approach. Furthermore, we provide a mixed integer linear programming solution to determine a lower bound on the performance, and clarify the gap between our risk-sensitive solution and the optimal solution, as well. The comparison proves our extensive simulation results demonstrate that our method is a promising approach for providing a guaranteed task processing services for the IoT tasks in a smart farm, while increasing the hovering time of the ABSs in this farm.

Deep Reinforcement Learning for Task Offloading in UAV-Aided Smart Farm Networks

Sep 15, 2022

Abstract:The fifth and sixth generations of wireless communication networks are enabling tools such as internet of things devices, unmanned aerial vehicles (UAVs), and artificial intelligence, to improve the agricultural landscape using a network of devices to automatically monitor farmlands. Surveying a large area requires performing a lot of image classification tasks within a specific period of time in order to prevent damage to the farm in case of an incident, such as fire or flood. UAVs have limited energy and computing power, and may not be able to perform all of the intense image classification tasks locally and within an appropriate amount of time. Hence, it is assumed that the UAVs are able to partially offload their workload to nearby multi-access edge computing devices. The UAVs need a decision-making algorithm that will decide where the tasks will be performed, while also considering the time constraints and energy level of the other UAVs in the network. In this paper, we introduce a Deep Q-Learning (DQL) approach to solve this multi-objective problem. The proposed method is compared with Q-Learning and three heuristic baselines, and the simulation results show that our proposed DQL-based method achieves comparable results when it comes to the UAVs' remaining battery levels and percentage of deadline violations. In addition, our method is able to reach convergence 13 times faster than Q-Learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge