Aikaterini Adam

Has Anything Changed? 3D Change Detection by 2D Segmentation Masks

Dec 02, 2023

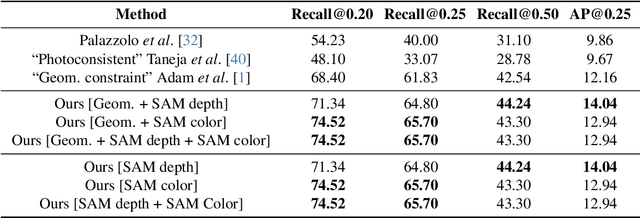

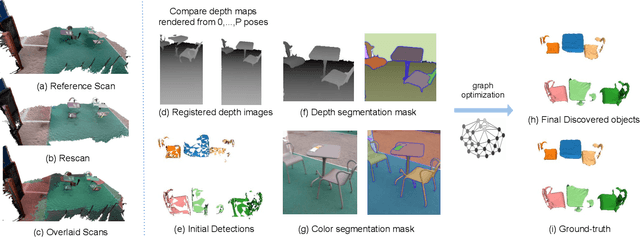

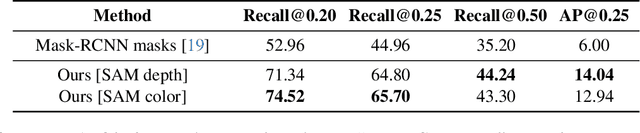

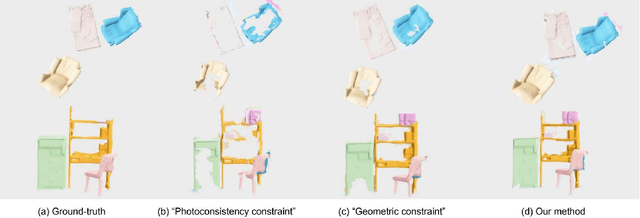

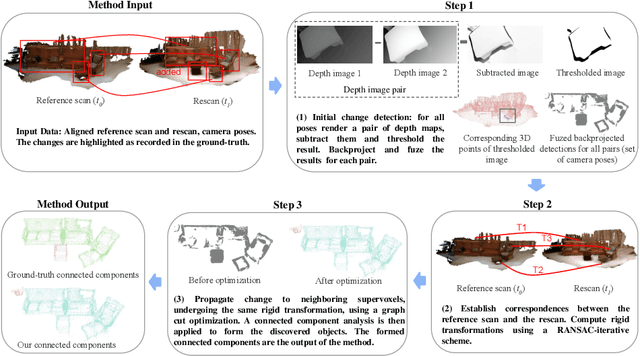

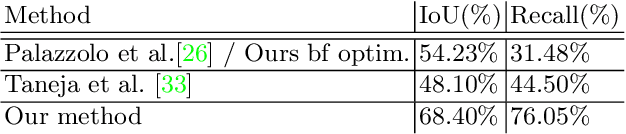

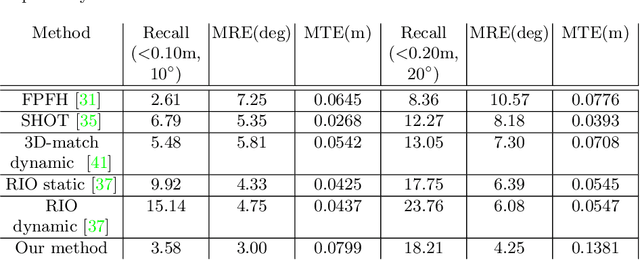

Abstract:As capturing devices become common, 3D scans of interior spaces are acquired on a daily basis. Through scene comparison over time, information about objects in the scene and their changes is inferred. This information is important for robots and AR and VR devices, in order to operate in an immersive virtual experience. We thus propose an unsupervised object discovery method that identifies added, moved, or removed objects without any prior knowledge of what objects exist in the scene. We model this problem as a combination of a 3D change detection and a 2D segmentation task. Our algorithm leverages generic 2D segmentation masks to refine an initial but incomplete set of 3D change detections. The initial changes, acquired through render-and-compare likely correspond to movable objects. The incomplete detections are refined through graph optimization, distilling the information of the 2D segmentation masks in the 3D space. Experiments on the 3Rscan dataset prove that our method outperforms competitive baselines, with SoTA results.

Objects Can Move: 3D Change Detection by Geometric Transformation Constistency

Aug 21, 2022

Abstract:AR/VR applications and robots need to know when the scene has changed. An example is when objects are moved, added, or removed from the scene. We propose a 3D object discovery method that is based only on scene changes. Our method does not need to encode any assumptions about what is an object, but rather discovers objects by exploiting their coherent move. Changes are initially detected as differences in the depth maps and segmented as objects if they undergo rigid motions. A graph cut optimization propagates the changing labels to geometrically consistent regions. Experiments show that our method achieves state-of-the-art performance on the 3RScan dataset against competitive baselines. The source code of our method can be found at https://github.com/katadam/ObjectsCanMove.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge