Ahmed Eldawy

Can SAM recognize crops? Quantifying the zero-shot performance of a semantic segmentation foundation model on generating crop-type maps using satellite imagery for precision agriculture

Dec 04, 2023Abstract:Climate change is increasingly disrupting worldwide agriculture, making global food production less reliable. To tackle the growing challenges in feeding the planet, cutting-edge management strategies, such as precision agriculture, empower farmers and decision-makers with rich and actionable information to increase the efficiency and sustainability of their farming practices. Crop-type maps are key information for decision-support tools but are challenging and costly to generate. We investigate the capabilities of Meta AI's Segment Anything Model (SAM) for crop-map prediction task, acknowledging its recent successes at zero-shot image segmentation. However, SAM being limited to up-to 3 channel inputs and its zero-shot usage being class-agnostic in nature pose unique challenges in using it directly for crop-type mapping. We propose using clustering consensus metrics to assess SAM's zero-shot performance in segmenting satellite imagery and producing crop-type maps. Although direct crop-type mapping is challenging using SAM in zero-shot setting, experiments reveal SAM's potential for swiftly and accurately outlining fields in satellite images, serving as a foundation for subsequent crop classification. This paper attempts to highlight a use-case of state-of-the-art image segmentation models like SAM for crop-type mapping and related specific needs of the agriculture industry, offering a potential avenue for automatic, efficient, and cost-effective data products for precision agriculture practices.

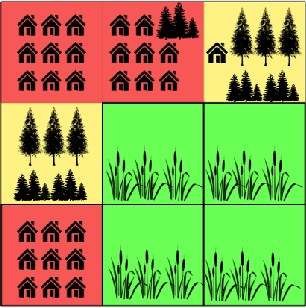

Object Delineation in Satellite Images

Dec 14, 2022

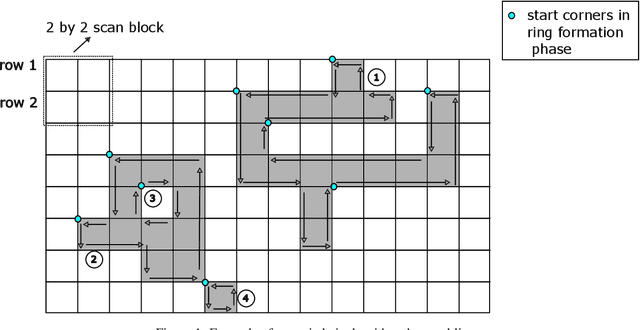

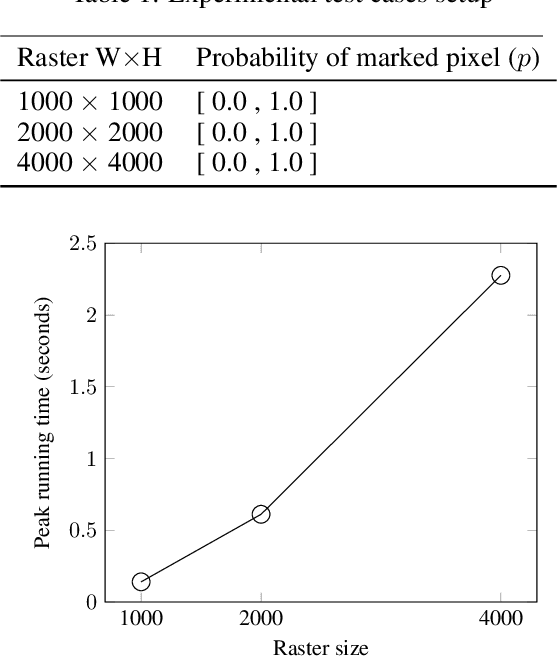

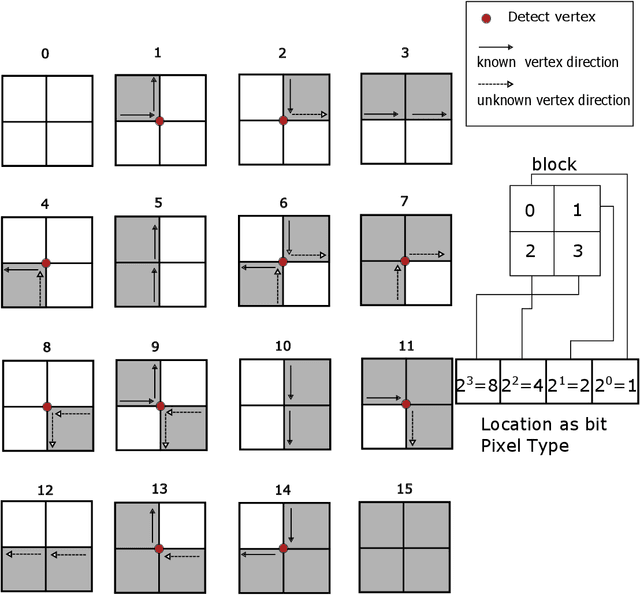

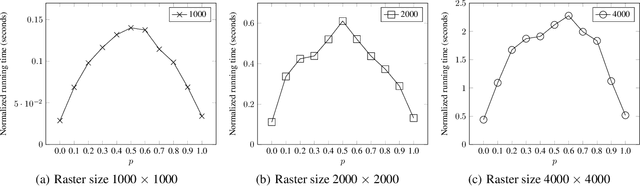

Abstract:Machine learning is being widely applied to analyze satellite data with problems such as classification and feature detection. Unlike traditional image processing algorithms, geospatial applications need to convert the detected objects from a raster form to a geospatial vector form to further analyze it. This gem delivers a simple and light-weight algorithm for delineating the pixels that are marked by ML algorithms to extract geospatial objects from satellite images. The proposed algorithm is exact and users can further apply simplification and approximation based on the application needs.

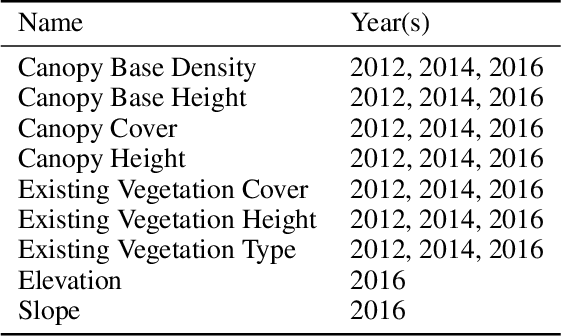

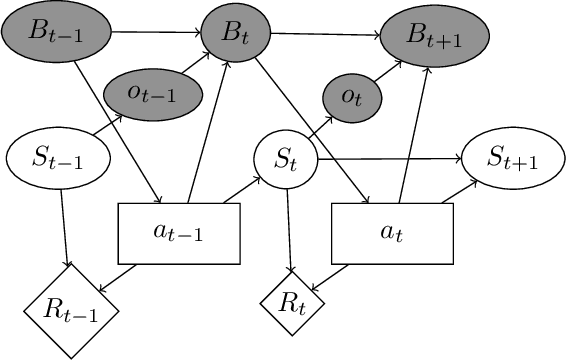

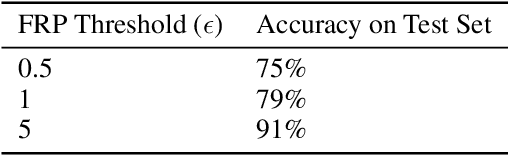

Uncertainty Aware Wildfire Management

Oct 15, 2020

Abstract:Recent wildfires in the United States have resulted in loss of life and billions of dollars, destroying countless structures and forests. Fighting wildfires is extremely complex. It is difficult to observe the true state of fires due to smoke and risk associated with ground surveillance. There are limited resources to be deployed over a massive area and the spread of the fire is challenging to predict. This paper proposes a decision-theoretic approach to combat wildfires. We model the resource allocation problem as a partially-observable Markov decision process. We also present a data-driven model that lets us simulate how fires spread as a function of relevant covariates. A major problem in using data-driven models to combat wildfires is the lack of comprehensive data sources that relate fires with relevant covariates. We present an algorithmic approach based on large-scale raster and vector analysis that can be used to create such a dataset. Our data with over 2 million data points is the first open-source dataset that combines existing fire databases with covariates extracted from satellite imagery. Through experiments using real-world wildfire data, we demonstrate that our forecasting model can accurately model the spread of wildfires. Finally, we use simulations to demonstrate that our response strategy can significantly reduce response times compared to baseline methods.

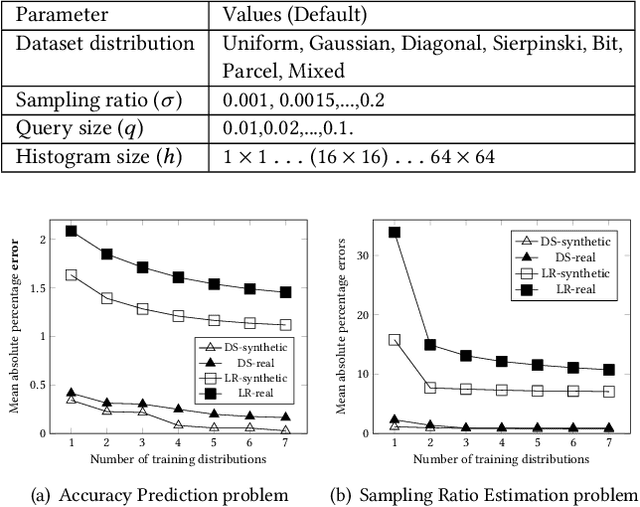

DeepSampling: Selectivity Estimation with Predicted Error and Response Time

Aug 16, 2020

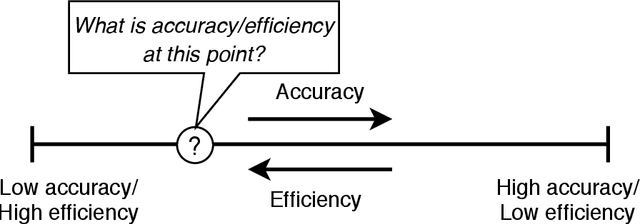

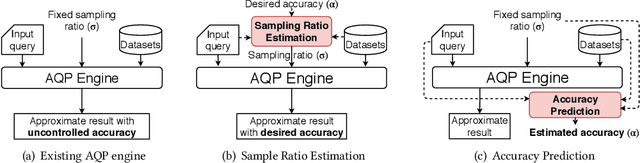

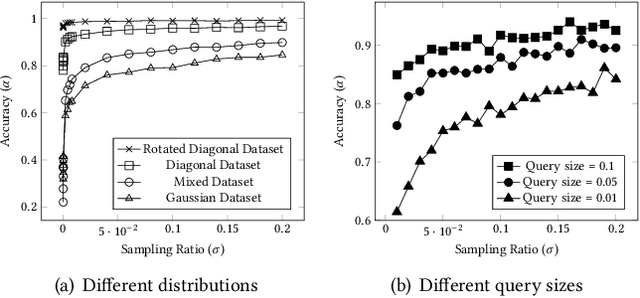

Abstract:The rapid growth of spatial data urges the research community to find efficient processing techniques for interactive queries on large volumes of data. Approximate Query Processing (AQP) is the most prominent technique that can provide real-time answer for ad-hoc queries based on a random sample. Unfortunately, existing AQP methods provide an answer without providing any accuracy metrics due to the complex relationship between the sample size, the query parameters, the data distribution, and the result accuracy. This paper proposes DeepSampling, a deep-learning-based model that predicts the accuracy of a sample-based AQP algorithm, specially selectivity estimation, given the sample size, the input distribution, and query parameters. The model can also be reversed to measure the sample size that would produce a desired accuracy. DeepSampling is the first system that provides a reliable tool for existing spatial databases to control the accuracy of AQP.

* 9 pages, published in DeepSpatial 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge