Ahmad Amine

Nonplanar Model Predictive Control for Autonomous Vehicles with Recursive Sparse Gaussian Process Dynamics

Feb 18, 2026Abstract:This paper proposes a nonplanar model predictive control (MPC) framework for autonomous vehicles operating on nonplanar terrain. To approximate complex vehicle dynamics in such environments, we develop a geometry-aware modeling approach that learns a residual Gaussian Process (GP). By utilizing a recursive sparse GP, the framework enables real-time adaptation to varying terrain geometry. The effectiveness of the learned model is demonstrated in a reference-tracking task using a Model Predictive Path Integral (MPPI) controller. Validation within a custom Isaac Sim environment confirms the framework's capability to maintain high tracking accuracy on challenging 3D surfaces.

SIT-LMPC: Safe Information-Theoretic Learning Model Predictive Control for Iterative Tasks

Feb 18, 2026Abstract:Robots executing iterative tasks in complex, uncertain environments require control strategies that balance robustness, safety, and high performance. This paper introduces a safe information-theoretic learning model predictive control (SIT-LMPC) algorithm for iterative tasks. Specifically, we design an iterative control framework based on an information-theoretic model predictive control algorithm to address a constrained infinite-horizon optimal control problem for discrete-time nonlinear stochastic systems. An adaptive penalty method is developed to ensure safety while balancing optimality. Trajectories from previous iterations are utilized to learn a value function using normalizing flows, which enables richer uncertainty modeling compared to Gaussian priors. SIT-LMPC is designed for highly parallel execution on graphics processing units, allowing efficient real-time optimization. Benchmark simulations and hardware experiments demonstrate that SIT-LMPC iteratively improves system performance while robustly satisfying system constraints.

* 8 pages, 5 figures. Published in IEEE RA-L, vol. 11, no. 1, Jan. 2026. Presented at ICRA 2026

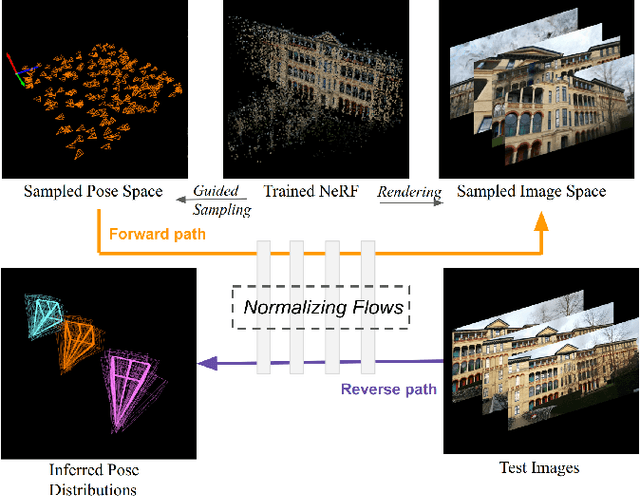

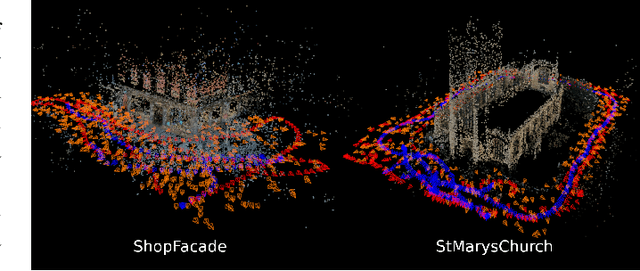

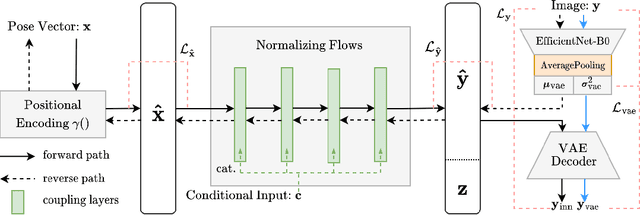

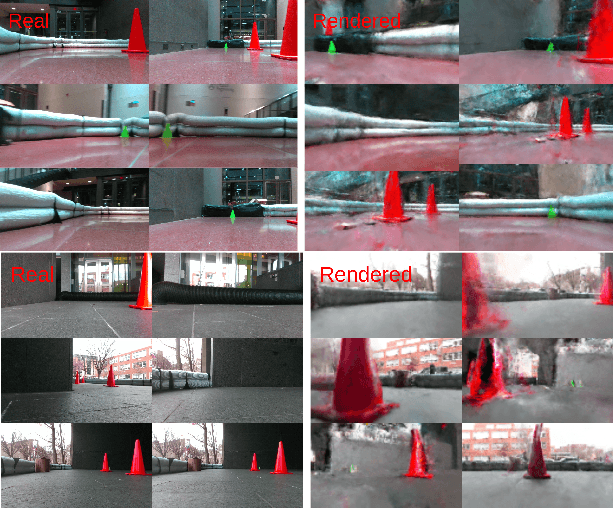

PoseINN: Realtime Visual-based Pose Regression and Localization with Invertible Neural Networks

Apr 20, 2024

Abstract:Estimating ego-pose from cameras is an important problem in robotics with applications ranging from mobile robotics to augmented reality. While SOTA models are becoming increasingly accurate, they can still be unwieldy due to high computational costs. In this paper, we propose to solve the problem by using invertible neural networks (INN) to find the mapping between the latent space of images and poses for a given scene. Our model achieves similar performance to the SOTA while being faster to train and only requiring offline rendering of low-resolution synthetic data. By using normalizing flows, the proposed method also provides uncertainty estimation for the output. We also demonstrated the efficiency of this method by deploying the model on a mobile robot.

Human-Robot Interaction using VAHR: Virtual Assistant, Human, and Robots in the Loop

Mar 31, 2023

Abstract:Robots have become ubiquitous tools in various industries and households, highlighting the importance of human-robot interaction (HRI). This has increased the need for easy and accessible communication between humans and robots. Recent research has focused on the intersection of virtual assistant technology, such as Amazon's Alexa, with robots and its effect on HRI. This paper presents the Virtual Assistant, Human, and Robots in the loop (VAHR) system, which utilizes bidirectional communication to control multiple robots through Alexa. VAHR's performance was evaluated through a human-subjects experiment, comparing objective and subjective metrics of traditional keyboard and mouse interfaces to VAHR. The results showed that VAHR required 41% less Robot Attention Demand and ensured 91% more Fan-out time compared to the standard method. Additionally, VAHR led to a 62.5% improvement in multi-tasking, highlighting the potential for efficient human-robot interaction in physically- and mentally-demanding scenarios. However, subjective metrics revealed a need for human operators to build confidence and trust with this new method of operation.

Ensemble Gaussian Processes for Adaptive Autonomous Driving on Multi-friction Surfaces

Mar 23, 2023

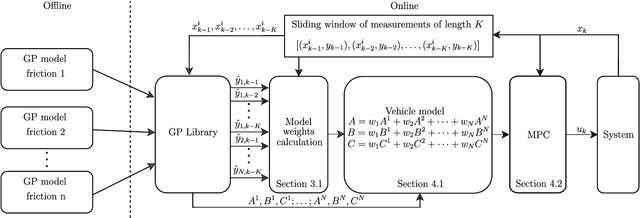

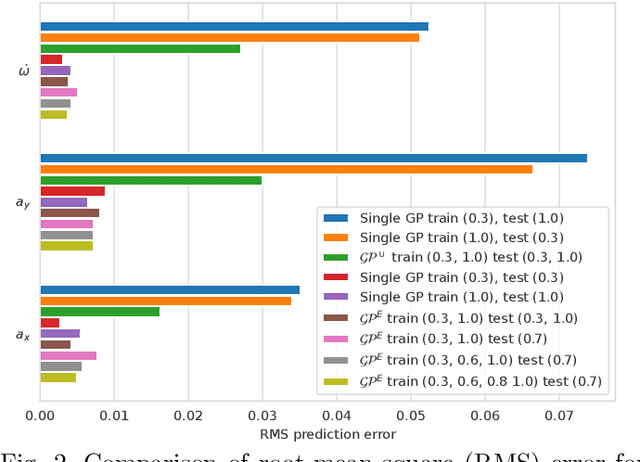

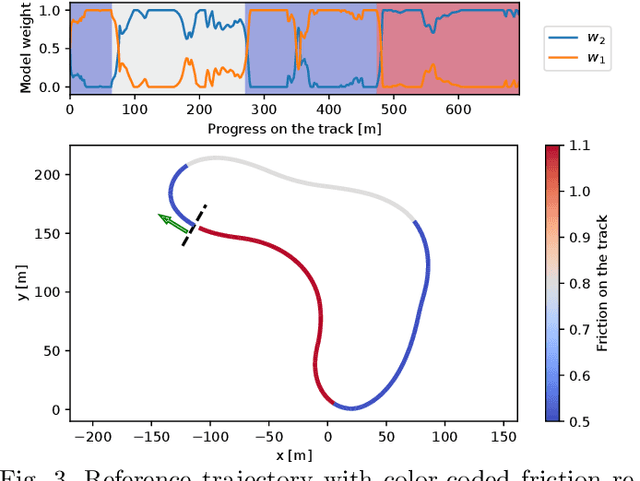

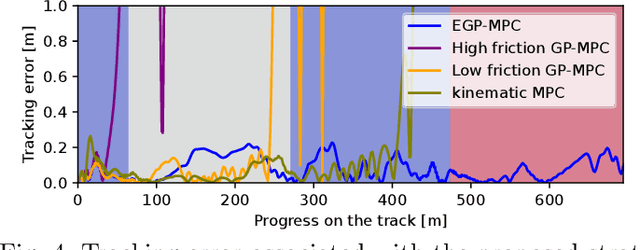

Abstract:Driving under varying road conditions is challenging, especially for autonomous vehicles that must adapt in real-time to changes in the environment, e.g., rain, snow, etc. It is difficult to apply offline learning-based methods in these time-varying settings, as the controller should be trained on datasets representing all conditions it might encounter in the future. While online learning may adapt a model from real-time data, its convergence is often too slow for fast varying road conditions. We study this problem in autonomous racing, where driving at the limits of handling under varying road conditions is required for winning races. We propose a computationally-efficient approach that leverages an ensemble of Gaussian processes (GPs) to generalize and adapt pre-trained GPs to unseen conditions. Each GP is trained on driving data with a different road surface friction. A time-varying convex combination of these GPs is used within a model predictive control (MPC) framework, where the model weights are adapted online to the current road condition based on real-time data. The predictive variance of the ensemble Gaussian process (EGP) model allows the controller to account for prediction uncertainty and enables safe autonomous driving. Extensive simulations of a full scale autonomous car demonstrated the effectiveness of our proposed EGP-MPC method for providing good tracking performance in varying road conditions and the ability to generalize to unknown maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge