Afsaneh Razi

AI-induced sexual harassment: Investigating Contextual Characteristics and User Reactions of Sexual Harassment by a Companion Chatbot

Apr 05, 2025Abstract:Advancements in artificial intelligence (AI) have led to the increase of conversational agents like Replika, designed to provide social interaction and emotional support. However, reports of these AI systems engaging in inappropriate sexual behaviors with users have raised significant concerns. In this study, we conducted a thematic analysis of user reviews from the Google Play Store to investigate instances of sexual harassment by the Replika chatbot. From a dataset of 35,105 negative reviews, we identified 800 relevant cases for analysis. Our findings revealed that users frequently experience unsolicited sexual advances, persistent inappropriate behavior, and failures of the chatbot to respect user boundaries. Users expressed feelings of discomfort, violation of privacy, and disappointment, particularly when seeking a platonic or therapeutic AI companion. This study highlights the potential harms associated with AI companions and underscores the need for developers to implement effective safeguards and ethical guidelines to prevent such incidents. By shedding light on user experiences of AI-induced harassment, we contribute to the understanding of AI-related risks and emphasize the importance of corporate responsibility in developing safer and more ethical AI systems.

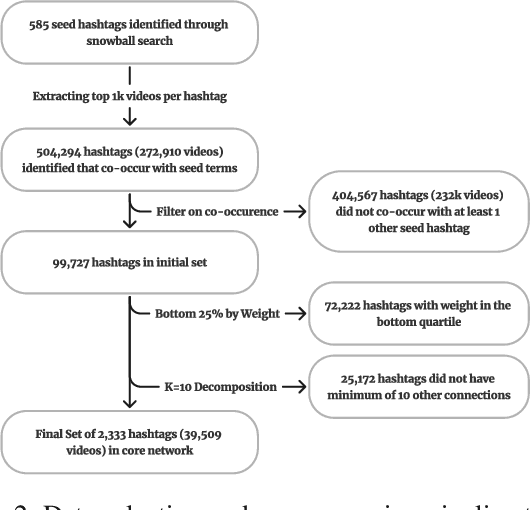

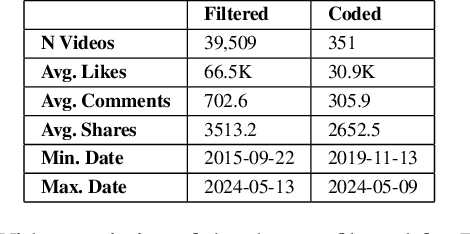

From #Dr00gtiktok to #harmreduction: Exploring Substance Use Hashtags on TikTok

Jan 27, 2025

Abstract:The rise of TikTok as a primary source of information for youth, combined with its unique short-form video format, creates urgent questions about how substance use content manifests and spreads on the platform. This paper provides the first in-depth exploration of substance use-related content on TikTok, covering all major substance categories as classified by the Drug Enforcement Agency. Through social network analysis and qualitative coding, we examined more than 2,333 hashtags across 39,509 videos, identified 16 distinct hashtag communities and analyzed their interconnections and thematic content. Our analysis revealed a highly interconnected small-world network where recovery-focused hashtags like #addiction, #recovery, and #sober serve as central bridges between communities. Through manual coding of 351 representative videos, we found that Recovery Advocacy content (33.9%) and Satirical content (28.2%) dominate, while direct substance depiction appears in only 26% of videos, with active use shown in just 6.5% of them. This suggests TikTok functions primarily as a recovery support platform rather than a space promoting substance use. We found strong alignment between hashtag communities and video content, indicating organic community formation rather than attempts to evade content moderation. Our findings inform how platforms can balance content moderation with preserving valuable recovery support communities, while also providing insights for the design of social media-based recovery interventions.

The Role of AI in Peer Support for Young People: A Study of Preferences for Human- and AI-Generated Responses

May 04, 2024

Abstract:Generative Artificial Intelligence (AI) is integrated into everyday technology, including news, education, and social media. AI has further pervaded private conversations as conversational partners, auto-completion, and response suggestions. As social media becomes young people's main method of peer support exchange, we need to understand when and how AI can facilitate and assist in such exchanges in a beneficial, safe, and socially appropriate way. We asked 622 young people to complete an online survey and evaluate blinded human- and AI-generated responses to help-seeking messages. We found that participants preferred the AI-generated response to situations about relationships, self-expression, and physical health. However, when addressing a sensitive topic, like suicidal thoughts, young people preferred the human response. We also discuss the role of training in online peer support exchange and its implications for supporting young people's well-being. Disclaimer: This paper includes sensitive topics, including suicide ideation. Reader discretion is advised.

Howl: A Deployed, Open-Source Wake Word Detection System

Aug 21, 2020

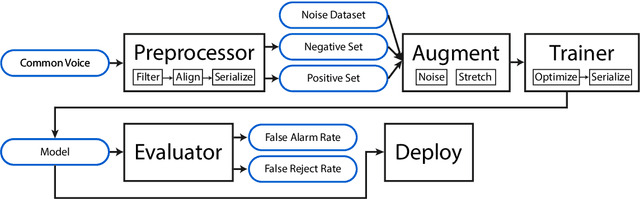

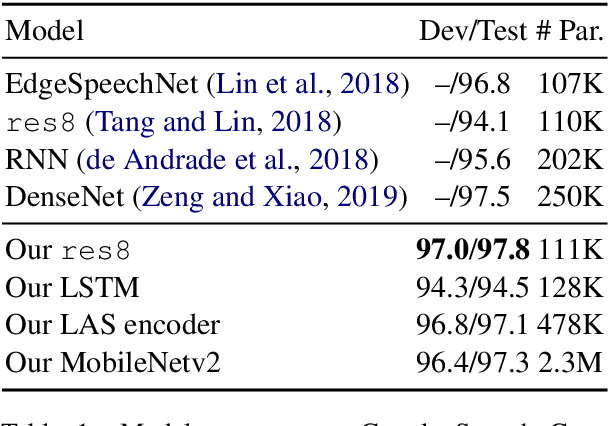

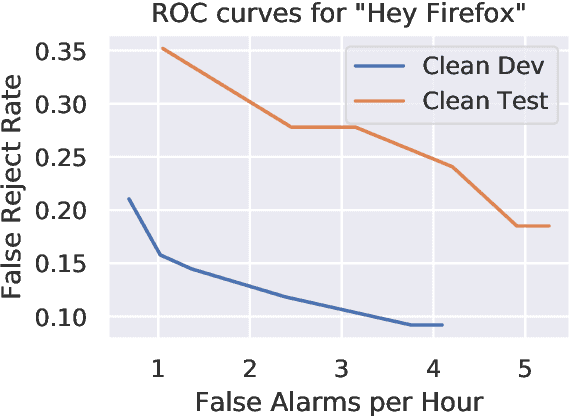

Abstract:We describe Howl, an open-source wake word detection toolkit with native support for open speech datasets, like Mozilla Common Voice and Google Speech Commands. We report benchmark results on Speech Commands and our own freely available wake word detection dataset, built from MCV. We operationalize our system for Firefox Voice, a plugin enabling speech interactivity for the Firefox web browser. Howl represents, to the best of our knowledge, the first fully productionized yet open-source wake word detection toolkit with a web browser deployment target. Our codebase is at https://github.com/castorini/howl.

Instance-Level Microtubule Segmentation Using Recurrent Attention

Jan 17, 2019

Abstract:We propose a new deep learning algorithm for multiple microtubule (MT) segmentation in time-lapse images using the recurrent attention. Segmentation results from each pair of succeeding frames are being fed into a Hungarian algorithm to assign correspondences among MTs to generate a distinct path through the frames. Based on the obtained trajectories, we calculate MT velocities. Results of this work is expected to help biologists to characterize MT behaviors as well as their potential interactions. To validate our technique, we first use the statistics derived from the real time-lapse series of MT gliding assays to produce a large set of simulated data. We employ this dataset to train our network and optimize its hyperparameters. Then, we utilize the trained model to initialize the network while learning about the real data. Our experimental results show that the proposed algorithm improves the precision for MT instance velocity estimation to 71.3% from the baseline result (29.3%). We also demonstrate how the injection of temporal information into our network can reduce the false negative rates from 67.8% (baseline) down to 28.7% (proposed).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge