Adams Wai Kin Kong

Finite Volume Features, Global Geometry Representations, and Residual Training for Deep Learning-based CFD Simulation

Nov 24, 2023

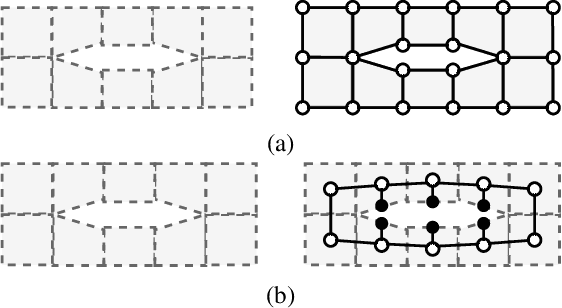

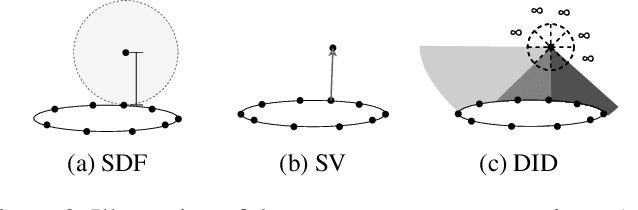

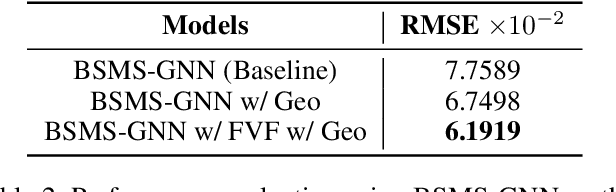

Abstract:Computational fluid dynamics (CFD) simulation is an irreplaceable modelling step in many engineering designs, but it is often computationally expensive. Some graph neural network (GNN)-based CFD methods have been proposed. However, the current methods inherit the weakness of traditional numerical simulators, as well as ignore the cell characteristics in the mesh used in the finite volume method, a common method in practical CFD applications. Specifically, the input nodes in these GNN methods have very limited information about any object immersed in the simulation domain and its surrounding environment. Also, the cell characteristics of the mesh such as cell volume, face surface area, and face centroid are not included in the message-passing operations in the GNN methods. To address these weaknesses, this work proposes two novel geometric representations: Shortest Vector (SV) and Directional Integrated Distance (DID). Extracted from the mesh, the SV and DID provide global geometry perspective to each input node, thus removing the need to collect this information through message-passing. This work also introduces the use of Finite Volume Features (FVF) in the graph convolutions as node and edge attributes, enabling its message-passing operations to adjust to different nodes. Finally, this work is the first to demonstrate how residual training, with the availability of low-resolution data, can be adopted to improve the flow field prediction accuracy. Experimental results on two datasets with five different state-of-the-art GNN methods for CFD indicate that SV, DID, FVF and residual training can effectively reduce the predictive error of current GNN-based methods by as much as 41%.

Improving Hand Recognition in Uncontrolled and Uncooperative Environments using Multiple Spatial Transformers and Loss Functions

Nov 09, 2023

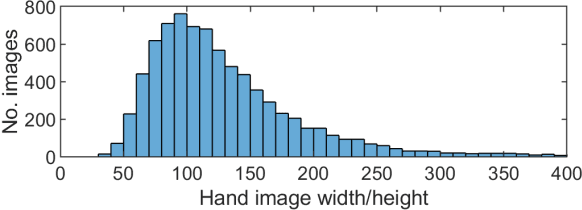

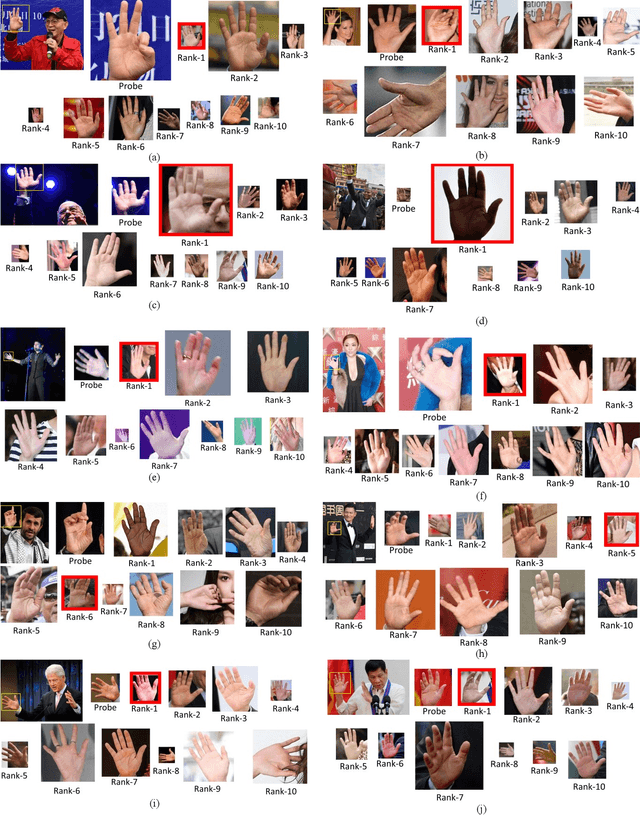

Abstract:The prevalence of smartphone and consumer camera has led to more evidence in the form of digital images, which are mostly taken in uncontrolled and uncooperative environments. In these images, criminals likely hide or cover their faces while their hands are observable in some cases, creating a challenging use case for forensic investigation. Many existing hand-based recognition methods perform well for hand images collected in controlled environments with user cooperation. However, their performance deteriorates significantly in uncontrolled and uncooperative environments. A recent work has exposed the potential of hand recognition in these environments. However, only the palmar regions were considered, and the recognition performance is still far from satisfactory. To improve the recognition accuracy, an algorithm integrating a multi-spatial transformer network (MSTN) and multiple loss functions is proposed to fully utilize information in full hand images. MSTN is firstly employed to localize the palms and fingers and estimate the alignment parameters. Then, the aligned images are further fed into pretrained convolutional neural networks, where features are extracted. Finally, a training scheme with multiple loss functions is used to train the network end-to-end. To demonstrate the effectiveness of the proposed algorithm, the trained model is evaluated on NTU-PI-v1 database and six benchmark databases from different domains. Experimental results show that the proposed algorithm performs significantly better than the existing methods in these uncontrolled and uncooperative environments and has good generalization capabilities to samples from different domains.

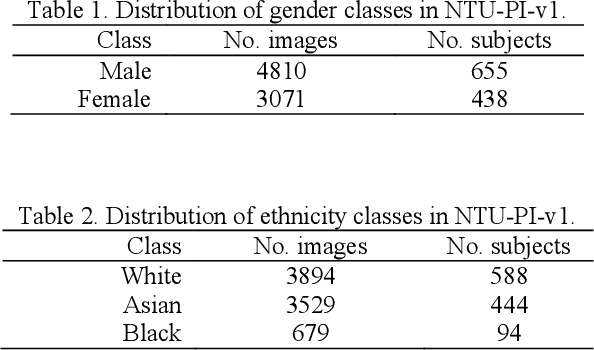

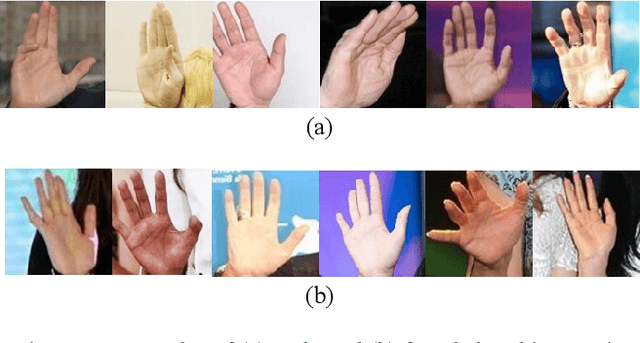

Gender and Ethnicity Classification based on Palmprint and Palmar Hand Images from Uncontrolled Environment

Aug 06, 2020

Abstract:Soft biometric attributes such as gender, ethnicity or age may provide useful information for biometrics and forensics applications. Researchers used, e.g., face, gait, iris, and hand, etc. to classify such attributes. Even though hand has been widely studied for biometric recognition, relatively less attention has been given to soft biometrics from hand. Previous studies of soft biometrics based on hand images focused on gender and well-controlled imaging environment. In this paper, the gender and ethnicity classification in uncontrolled environment are considered. Gender and ethnicity labels are collected and provided for subjects in a publicly available database, which contains hand images from the Internet. Five deep learning models are fine-tuned and evaluated in gender and ethnicity classification scenarios based on palmar 1) full hand, 2) segmented hand and 3) palmprint images. The experimental results indicate that for gender and ethnicity classification in uncontrolled environment, full and segmented hand images are more suitable than palmprint images.

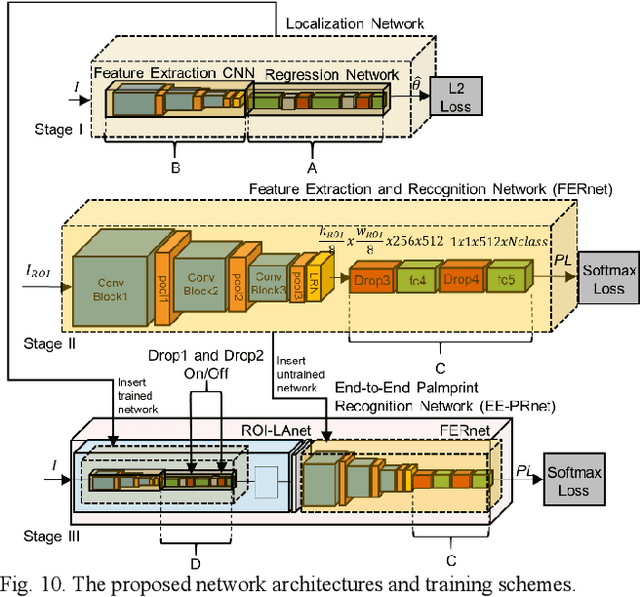

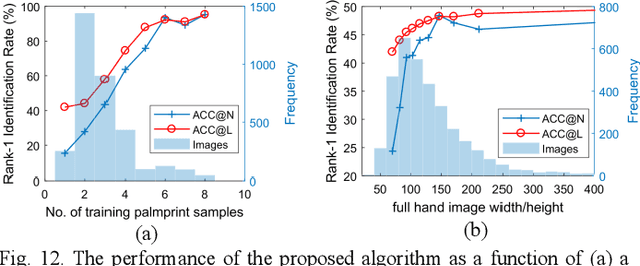

Palmprint Recognition in Uncontrolled and Uncooperative Environment

Nov 28, 2019

Abstract:Online palmprint recognition and latent palmprint identification are two branches of palmprint studies. The former uses middle-resolution images collected by a digital camera in a well-controlled or contact-based environment with user cooperation for commercial applications and the latter uses high-resolution latent palmprints collected in crime scenes for forensic investigation. However, these two branches do not cover some palmprint images which have the potential for forensic investigation. Due to the prevalence of smartphone and consumer camera, more evidence is in the form of digital images taken in uncontrolled and uncooperative environment, e.g., child pornographic images and terrorist images, where the criminals commonly hide or cover their face. However, their palms can be observable. To study palmprint identification on images collected in uncontrolled and uncooperative environment, a new palmprint database is established and an end-to-end deep learning algorithm is proposed. The new database named NTU Palmprints from the Internet (NTU-PI-v1) contains 7881 images from 2035 palms collected from the Internet. The proposed algorithm consists of an alignment network and a feature extraction network and is end-to-end trainable. The proposed algorithm is compared with the state-of-the-art online palmprint recognition methods and evaluated on three public contactless palmprint databases, IITD, CASIA, and PolyU and two new databases, NTU-PI-v1 and NTU contactless palmprint database. The experimental results showed that the proposed algorithm outperforms the existing palmprint recognition methods.

A Study on Wrist Identification for Forensic Investigation

Oct 08, 2019

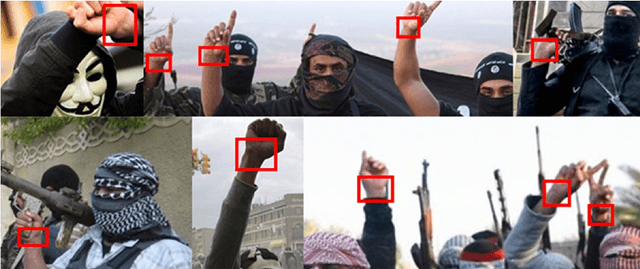

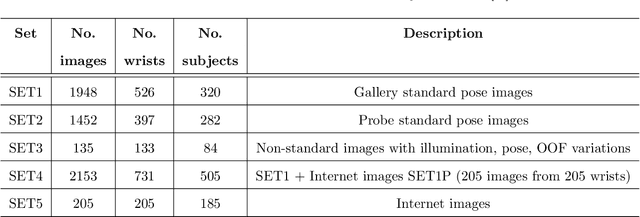

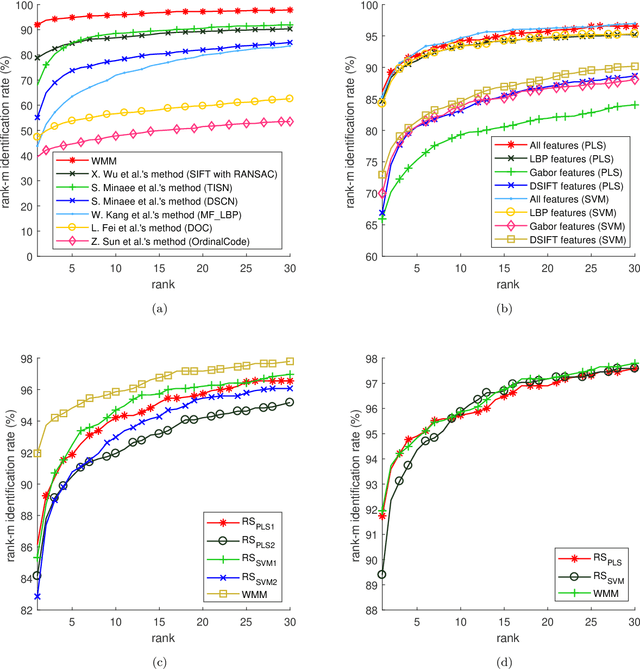

Abstract:Criminal and victim identification based on crime scene images is an important part of forensic investigation. Criminals usually avoid identification by covering their faces and tattoos in the evidence images, which are taken in uncontrolled environments. Existing identification methods, which make use of biometric traits, such as vein, skin mark, height, skin color, weight, race, etc., are considered for solving this problem. The soft biometric traits, including skin color, gender, height, weight and race, provide useful information but not distinctive enough. Veins and skin marks are limited to high resolution images and some body sites may neither have enough skin marks nor clear veins. Terrorists and rioters tend to expose their wrists in a gesture of triumph, greeting or salute, while paedophiles usually show them when touching victims. However, wrists were neglected by the biometric community for forensic applications. In this paper, a wrist identification algorithm, which includes skin segmentation, key point localization, image to template alignment, large feature set extraction, and classification, is proposed. The proposed algorithm is evaluated on NTU-Wrist-Image-Database-v1, which consists of 3945 images from 731 different wrists, including 205 pairs of wrist images collected from the Internet, taken under uneven illuminations with different poses and resolutions. The experimental results show that wrist is a useful clue for criminal and victim identification. Keywords: biometrics, criminal and victim identification, forensics, wrist.

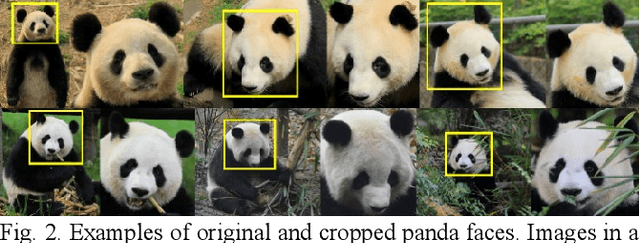

Giant Panda Face Recognition Using Small Dataset

May 27, 2019

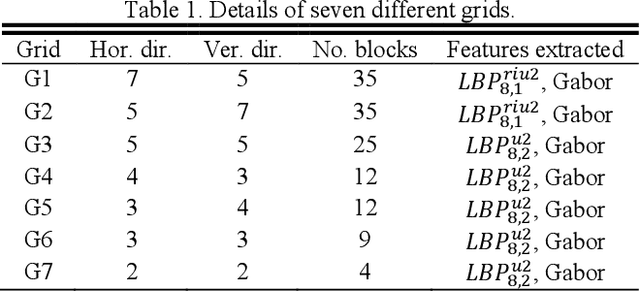

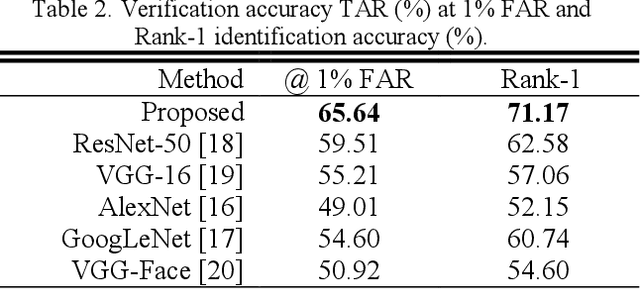

Abstract:Giant panda (panda) is a highly endangered animal. Significant efforts and resources have been put on panda conservation. To measure effectiveness of conservation schemes, estimating its population size in wild is an important task. The current population estimation approaches, including capture-recapture, human visual identification and collection of DNA from hair or feces, are invasive, subjective, costly or even dangerous to the workers who perform these tasks in wild. Cameras have been widely installed in the regions where pandas live. It opens a new possibility for non-invasive image based panda recognition. Panda face recognition is naturally a small dataset problem, because of the number of pandas in the world and the number of qualified images captured by the cameras in each encounter. In this paper, a panda face recognition algorithm, which includes alignment, large feature set extraction and matching is proposed and evaluated on a dataset consisting of 163 images. The experimental results are encouraging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge