Adam Wolniakowski

Neural network modelling of kinematic and dynamic features for signature verification

Nov 26, 2024

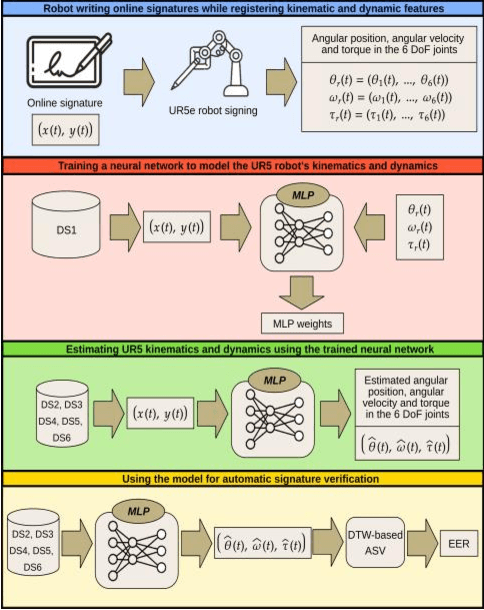

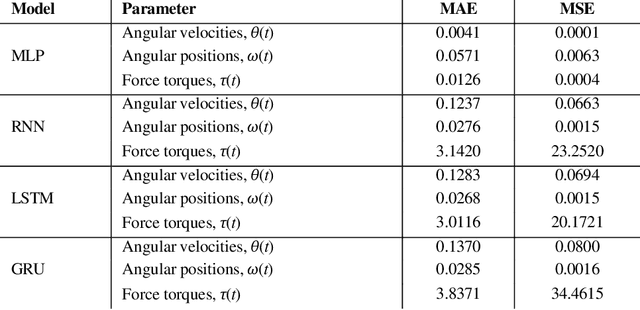

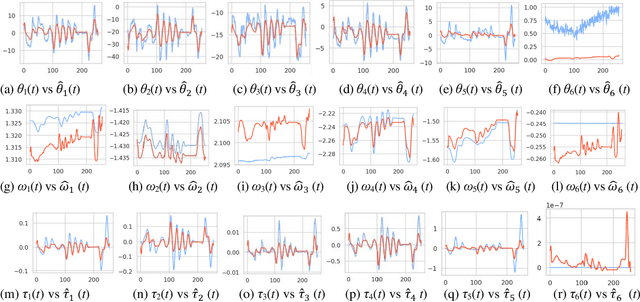

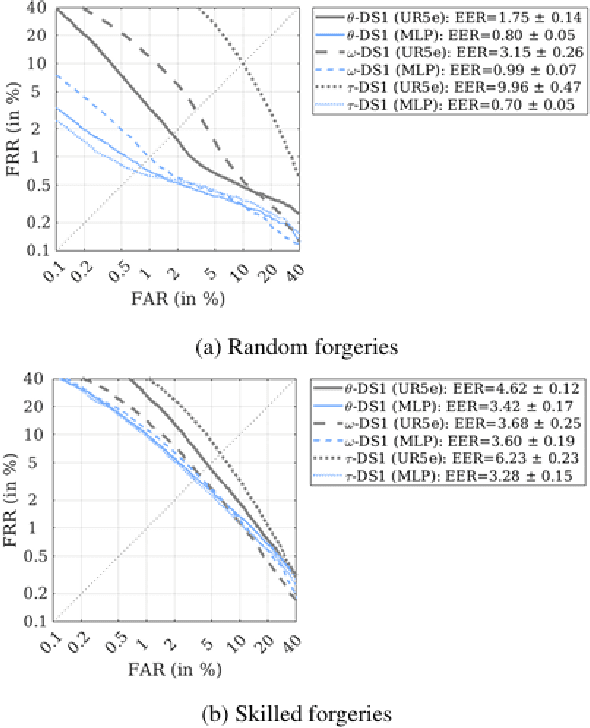

Abstract:Online signature parameters, which are based on human characteristics, broaden the applicability of an automatic signature verifier. Although kinematic and dynamic features have previously been suggested, accurately measuring features such as arm and forearm torques remains challenging. We present two approaches for estimating angular velocities, angular positions, and force torques. The first approach involves using a physical UR5e robotic arm to reproduce a signature while capturing those parameters over time. The second method, a cost effective approach, uses a neural network to estimate the same parameters. Our findings demonstrate that a simple neural network model can extract effective parameters for signature verification. Training the neural network with the MCYT300 dataset and cross validating with other databases, namely, BiosecurID, Visual, Blind, OnOffSigDevanagari 75 and OnOffSigBengali 75 confirm the models generalization capability.

Towards human-like kinematics in industrial robotic arms: a case study on a UR3 robot

Sep 25, 2024

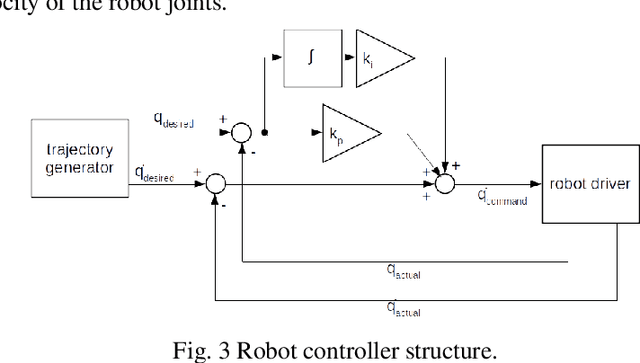

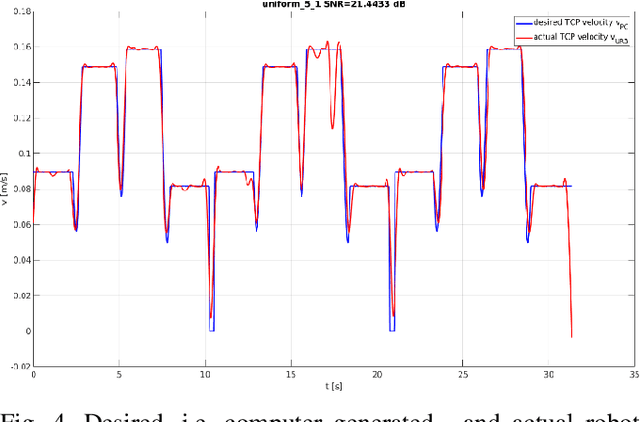

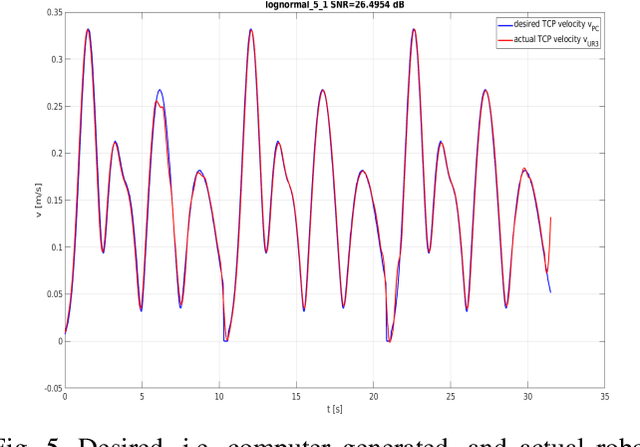

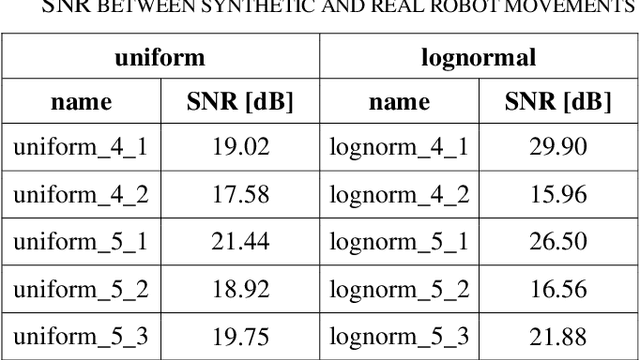

Abstract:Safety in industrial robotic environments is a hot research topic in the area of human-robot interaction (HRI). Up to now, a robotic arm on an assembly line interacts with other machines away from human workers. Nowadays, robotic arm manufactures are aimed to their robots could increasingly perform tasks collaborating with humans. One of the ways to improve this collaboration is by making the movement of robots more humanlike. This way, it would be easier for a human to foresee the movement of the robot and approach it without fear of contact. The main difference between the movement of a human and of a robotic arm is that the former has a bell-shaped speed profile while the latter has a uniform speed one. To generate this speed profile, the kinematic theory of rapid human movements and its Sigma-Lognormal model has been used. This model is widely used to explain most of the basic phenomena related to the control of human movements. Both human-like and robotic-like movements are transferred to the UR3 robot. In this paper we detail the how the UR3 robot was programmed to produce both kinds of movement. The dissimilarities result between the input motion and output motion to the robot confirm the possibility to develop human-like velocities in the UR3 robot.

* 6 pages, 5 figures

Uniform vs. Lognormal Kinematics in Robots: Perceptual Preferences for Robotic Movements

May 29, 2024

Abstract:Collaborative robots or cobots interact with humans in a common work environment. In cobots, one under investigated but important issue is related to their movement and how it is perceived by humans. This paper tries to analyze whether humans prefer a robot moving in a human or in a robotic fashion. To this end, the present work lays out what differentiates the movement performed by an industrial robotic arm from that performed by a human one. The main difference lies in the fact that the robotic movement has a trapezoidal speed profile, while for the human arm, the speed profile is bell-shaped and during complex movements, it can be considered as a sum of superimposed bell-shaped movements. Based on the lognormality principle, a procedure was developed for a robotic arm to perform human-like movements. Both speed profiles were implemented in two industrial robots, namely, an ABB IRB 120 and a Universal Robot UR3. Three tests were used to study the subjects' preference when seeing both movements and another analyzed the same when interacting with the robot by touching its ends with their fingers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge