Abu Md Niamul Taufique

SiamReID: Confuser Aware Siamese Tracker with Re-identification Feature

Apr 15, 2021

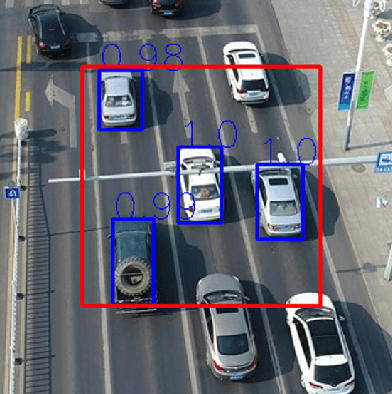

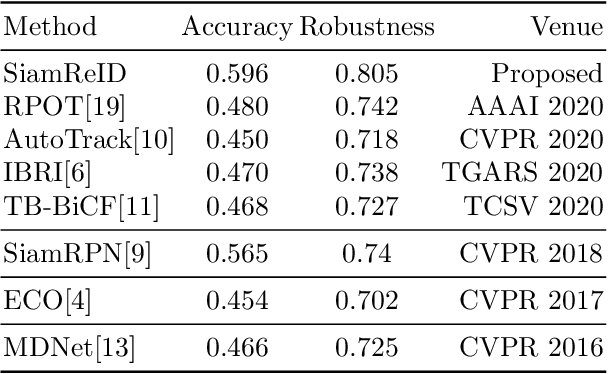

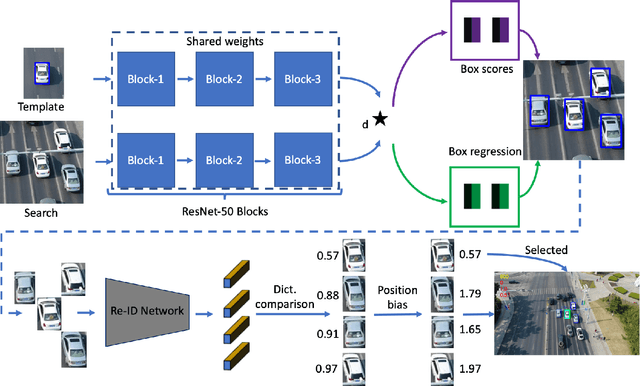

Abstract:Siamese deep-network trackers have received significant attention in recent years due to their real-time speed and state-of-the-art performance. However, Siamese trackers suffer from similar looking confusers, that are prevalent in aerial imagery and create challenging conditions due to prolonged occlusions where the tracker object re-appears under different pose and illumination. Our work proposes SiamReID, a novel re-identification framework for Siamese trackers, that incorporates confuser rejection during prolonged occlusions and is well-suited for aerial tracking. The re-identification feature is trained using both triplet loss and a class balanced loss. Our approach achieves state-of-the-art performance in the UAVDT single object tracking benchmark.

Hyperspectral Pigment Analysis of Cultural Heritage Artifacts Using the Opaque Form of Kubelka-Munk Theory

Apr 11, 2021Abstract:Kubelka-Munk (K-M) theory has been successfully used to estimate pigment concentrations in the pigment mixtures of modern paintings in spectral imagery. In this study the single-constant K-M theory has been utilized for the classification of green pigments in the Selden Map of China, a navigational map of the South China Sea likely created in the early seventeenth century. Hyperspectral data of the map was collected at the Bodleian Library, University of Oxford, and can be used to estimate the pigment diversity, and spatial distribution, within the map. This work seeks to assess the utility of analyzing the data in the K/S space from Kubelka-Munk theory, as opposed to the traditional reflectance domain. We estimate the dimensionality of the data and extract endmembers in the reflectance domain. Then we perform linear unmixing to estimate abundances in the K/S space, and following Bai, et al. (2017), we perform a classification in the abundance space. Finally, due to the lack of ground truth labels, the classification accuracy was estimated by computing the mean spectrum of each class as the representative signature of that class, and calculating the root mean squared error with all the pixels in that class to create a spatial representation of the error. This highlights both the magnitude of, and any spatial pattern in, the errors, indicating if a particular pigment is not well modeled in this approach.

* 11 pages, 9 figures

ConDA: Continual Unsupervised Domain Adaptation

Apr 07, 2021

Abstract:Domain Adaptation (DA) techniques are important for overcoming the domain shift between the source domain used for training and the target domain where testing takes place. However, current DA methods assume that the entire target domain is available during adaptation, which may not hold in practice. This paper considers a more realistic scenario, where target data become available in smaller batches and adaptation on the entire target domain is not feasible. In our work, we introduce a new, data-constrained DA paradigm where unlabeled target samples are received in batches and adaptation is performed continually. We propose a novel source-free method for continual unsupervised domain adaptation that utilizes a buffer for selective replay of previously seen samples. In our continual DA framework, we selectively mix samples from incoming batches with data stored in a buffer using buffer management strategies and use the combination to incrementally update our model. We evaluate the classification performance of the continual DA approach with state-of-the-art DA methods based on the entire target domain. Our results on three popular DA datasets demonstrate that our method outperforms many existing state-of-the-art DA methods with access to the entire target domain during adaptation.

Benchmarking Deep Trackers on Aerial Videos

Mar 24, 2021

Abstract:In recent years, deep learning-based visual object trackers have achieved state-of-the-art performance on several visual object tracking benchmarks. However, most tracking benchmarks are focused on ground level videos, whereas aerial tracking presents a new set of challenges. In this paper, we compare ten trackers based on deep learning techniques on four aerial datasets. We choose top performing trackers utilizing different approaches, specifically tracking by detection, discriminative correlation filters, Siamese networks and reinforcement learning. In our experiments, we use a subset of OTB2015 dataset with aerial style videos; the UAV123 dataset without synthetic sequences; the UAV20L dataset, which contains 20 long sequences; and DTB70 dataset as our benchmark datasets. We compare the advantages and disadvantages of different trackers in different tracking situations encountered in aerial data. Our findings indicate that the trackers perform significantly worse in aerial datasets compared to standard ground level videos. We attribute this effect to smaller target size, camera motion, significant camera rotation with respect to the target, out of view movement, and clutter in the form of occlusions or similar looking distractors near tracked object.

* 25 pages, 10 figures, 7 tables

Visualization of Deep Transfer Learning In SAR Imagery

Mar 20, 2021

Abstract:Synthetic Aperture Radar (SAR) imagery has diverse applications in land and marine surveillance. Unlike electro-optical (EO) systems, these systems are not affected by weather conditions and can be used in the day and night times. With the growing importance of SAR imagery, it would be desirable if models trained on widely available EO datasets can also be used for SAR images. In this work, we consider transfer learning to leverage deep features from a network trained on an EO ships dataset and generate predictions on SAR imagery. Furthermore, by exploring the network activations in the form of class-activation maps (CAMs), we visualize the transfer learning process to SAR imagery and gain insight on how a deep network interprets a new modality.

* 4 pages, 5 figures

Automatic Quantification of Facial Asymmetry using Facial Landmarks

Mar 20, 2021

Abstract:One-sided facial paralysis causes uneven movements of facial muscles on the sides of the face. Physicians currently assess facial asymmetry in a subjective manner based on their clinical experience. This paper proposes a novel method to provide an objective and quantitative asymmetry score for frontal faces. Our metric has the potential to help physicians for diagnosis as well as monitoring the rehabilitation of patients with one-sided facial paralysis. A deep learning based landmark detection technique is used to estimate style invariant facial landmark points and dense optical flow is used to generate motion maps from a short sequence of frames. Six face regions are considered corresponding to the left and right parts of the forehead, eyes, and mouth. Motion is computed and compared between the left and the right parts of each region of interest to estimate the symmetry score. For testing, asymmetric sequences are synthetically generated from a facial expression dataset. A score equation is developed to quantify symmetry in both symmetric and asymmetric face sequences.

* 5 pages, 4 figures

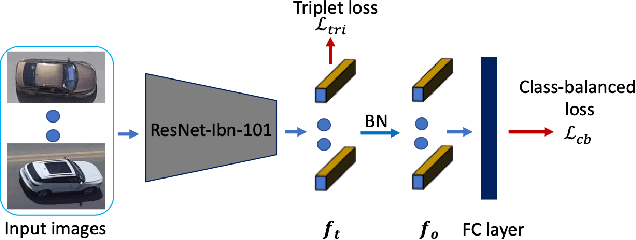

LABNet: Local Graph Aggregation Network with Class Balanced Loss for Vehicle Re-Identification

Nov 29, 2020

Abstract:Vehicle re-identification is an important computer vision task where the objective is to identify a specific vehicle among a set of vehicles seen at various viewpoints. Recent methods based on deep learning utilize a global average pooling layer after the backbone feature extractor, however, this ignores any spatial reasoning on the feature map. In this paper, we propose local graph aggregation on the backbone feature map, to learn associations of local information and hence improve feature learning as well as reduce the effects of partial occlusion and background clutter. Our local graph aggregation network considers spatial regions of the feature map as nodes and builds a local neighborhood graph that performs local feature aggregation before the global average pooling layer. We further utilize a batch normalization layer to improve the system effectiveness. Additionally, we introduce a class balanced loss to compensate for the imbalance in the sample distributions found in the most widely used vehicle re-identification datasets. Finally, we evaluate our method in three popular benchmarks and show that our approach outperforms many state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge